Wow, number 4 looks like a great ancestor ! It seems to have a lot of potential for obstacle avoidance AND your future mapping bot (at least as far as detecting boundary walls goes.

I'm probably asking for trouble here, but would it be illegal, immoral and possibly fattening to apply the "long distance factor" as a multiplier after the sum of step fitnesses are accumulated It seems like that would help to separate the travellers from the spinners ?

Oops, on further consideration that would penalize the bot for rounding the obstacle and returning to its starting location 🙁

Anything seems possible when you don't know what you're talking about.

Wow, number 4 looks like a great ancestor ! It seems to have a lot of potential for obstacle avoidance AND your future mapping bot (at least as far as detecting boundary walls goes.

Yes, I thought so. If it was a race car it was taking the apex like a Boss! Most bots I see doing obstacle avoidance are very twitchy and indecisive swaying back and forth. The few wall followers I've seen, likewise, keep cycling the entire length, while this one dampened out the oscillations with in two cycles and was strait as an arrow the rest of the time. It just needs to get the lead out.

I'm probably asking for trouble here, but would it be illegal, immoral and possibly fattening to apply the "long distance factor" as a multiplier after the sum of step fitnesses are accumulated It seems like that would help to separate the travellers from the spinners ?

I'm really starting to feel dense here. I think you see something clearly that I'm just not latching on. #4 has a Fitness=0.0288 while the Idiot Child that was the best Genome had a Fitness=0.0452. Note that if it was running at full speed in wide open space the Fitness would equal 1.0!

The point being... if I sum up the distance traveled, this idiot child is still winning out. If I do the difference at the end from the start point, it would be based on whether the last step was near or far from the start point.

Oh... is this what you mean? Maybe a running sum... every step's distance from the start is summed and a factor is multiplied at the end. That way something that lapped the island should fair better than something playing idiot child. That might work!!! If that isn't where you were heading... Use more fattening words. 🤣

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

No, I don't think I have a clear concept of a superior fitness calculation. I'd like to see something that rewards a bot that covers a lot of distance but my above strategy is flawed. It seems that distance travelled itself is not enough since if one bot travels 1000 units perfectly straight in one direction it would seem to be preferable to a bot that just spins in a 10 unit circle 33 times.

Similarly, calculating the distance from the start to the end point isn't sufficient because a bot that travels out and around the island and then returns to its starting location would score less than a bot that runs directly into a wall and just kept pushing into it for the rest of the experiment.

However, any other strategy I imagine seems to depend on the size and/or shape of the enclosure. For instance, using the ratio of the farthest distance from the starting point that the bot attained. You'd think that would penalize spinners and reward explorers but it would seem to bias the mutation towards success in the "home" enclosure and might not transfer well to new environments.

IDK, is there some way of combining distance with "straightness" ? I suppose that "straightness" is partly expressed by the difference in wheel speed.

Anything seems possible when you don't know what you're talking about.

Yes, this is what I was having trouble seeing a solution when you first brought it up. I'm still going to give the average distance from start just to see what kind of affect it has. But I think you're right. If it crams into the furthest corner, it scores bit. It is also only valid if it is learning in the same arena and can't be compared to those learning in say... the Auditorium versus the ACAA arena.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

Here is the run (First 20 Generations) using a distance factor. As we surmised, it did get rid of all of the high-speed-tight-circle Genomes and it did give some interesting results.

Unfortunately, it rewarded Genomes that were slow as mud that ended up at the extreme top corner of the arena at the end of 300 steps. I wasn't predicting this ahead of time, but it makes perfect sense in hind-sight.

@robotbuilder, I believe your solution would be far less compute intensive instead of calculating distance at every time step, but it will lead to the same basic conclusion and still have the limitation of not being able to work with different arenas. The apples and oranges scenario. Especially in the context that we want the robot to continue learning after it is cut loose in the world.

In my (future) acid test of the mapping robot, I'll have it map that entire school that is about 100 meters by 30 meters. It'll need to adapt using some other technique besides size or distance.

I need to digest all that I've seen, but I think the ACAA method of rewarding speed and deducting for differential speed is the right strategy.

Fitness = ((Vl + Vr) / 2) * (1 - (Vl - Vr) / 2)...

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

Yeah, 7, 9 and 13 looked pretty good but didn't seem to lead anywhere in the long run.

Robot builder's extrema sounds promising at first reading. At least it sounds like it'll reward explorers.

Anything seems possible when you don't know what you're talking about.

Hi @inq,

Just a 'throwaway' comment ... which maybe best thrown away ..

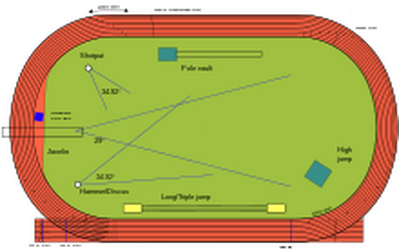

As you effectively have a oval race track with the ACAA, perhaps it should be treated a bit like one, in which an athlete gets credit for crossing the finish line, and if it is a multi-lap race, then each time they cross the line, in the forward direction, they get a 'credit' for completing the lap.

As a reminder a running track looks like this (from https://en.wikipedia.org/wiki/Running_track)

Consider having one (or may be more than one) virtual lines across the track, to indicate a lap (half a lap if you have two lines, etc.). Then modify the fitness evaluation so that crossing the line(s) in a forward direction adds a 'bonus' to the subsequent calculated fitness values, whilst crossing it in the reverse direction subtracts the 'bonus'.

Thus the 'idiot' that spins on a tight circle, never crossing the 'lap' line, can acrue points for covering distance, but not the bonus. Hence if the bonus is worth a 'long' distance, a bot covering a lap will be rewarded higher than one that is the small circle runner.

It might be necessary to have several 'lap' lines to encourage it around the track... e.g. a bit like a 400m running track could be divided into 4 X 100m segments, so bonus credit (and debit) is given for crossing each of the 100m lines.

Whilst it makes sense for the lines to be separated by roughly equal distances, they do not have to be precisely equal.

Obviously, this implies the decision to add (or subtract or no change) a bonus is made each time the fitness value is re-evaluated.

A refinement could involve a subtitution of the points added by distance covered between any two adjacent (part) lap events, and replaced by the bonus increment. This would remove the points added by 'meandering' and credit the 'lap' bonus, favouring the shortest path. Obviously, this could involve some messy accounting and may not be worthwhile.

Perhaps such manipulations are 'cheating' -- I don't think they are -- but I am not a lawyer for AI bots.

Just a crazy thought, best wishes, Dave

You've all come up with some good suggestions. And I may need to try these. My main resistance to these is they require a state of the global environment... either distance from some starting point, min/max limits of travel distance or having pre-known milestones.

If... if... we can assume that all the learning can be done up-front and never addressed again, then these should work. However, the moment the bot is put into a different environment, the fitness equations of the new arena are totally different. I'm thinking that mixing learning from one place with that of another is not a good thing.

At the moment, I'm still trying to go with the concept that all the information necessary to avoid an obstruction can be observed at the instant. I mean... we humans certainly don't need to know the distance from home we've traveled or the size of the room we're in. So I'm going with that simplistic model... until I've exhausted all ideas.

I had an epiphany this morning that this being a very non-linear problem already, why should I expect to solve it with a single, smooth, closed-form equation like:

fitness = V * (1 - Math.Pow(dv, 0.2)) * s;

- V = (Vl + Vr) / 2; -1 <= V <= 1

- dv = Math.Abs(Vl - Vr) / 2; 0 <= dv <= 1

- s = closest sensor distance 0 <= s <= 1

- Vl, Vr = +/- speed of wheels / Max speed

I'm experimenting using the same kind of logic we humans would do in the situation. It's not working quite yet, but in my last life-time run, the best Genome did attempt to move around the arena. In all previous tests at least 50% of the better fitness Genomes all spun around in tight ovals. This is the last run's piece-wise fitness function:

// Arbritary distance constant TBD. This might need to be

// be a function... we need more distance if we're traveling

// fast.

double critDistance = 100; // mm

critDistance /= ToFRange; // Normalize it.

double fitness = s > critDistance ?

// If over critical distance, we only want to encourage

// what it's doing. We optimize on speed and discourage

// spinning to encourage it to speed up.

V * (1 - Math.Pow(dv, 0.2)) :

// Otherwise see if we're heading for or away from an

// obstruction.

(_shortestToF - _lastShortestToF > 0 ?

// We're pulling away from the obstruction, we're doing

// the right thing... keep doing it. (Forward or Backward)

Math.Abs(V) * (1 - Math.Pow(dv, 0.2)) :

// Otherwise, we need to be discouraging what it's doing.

-Math.Abs(V));

_lastShortestToF = _shortestToF;

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

Hi @inq,

I'm experimenting using the same kind of logic we humans would do in the situation.

That sums up why I suggested the lap bonus idea.

And also implies a general problem I have had at the back of my mind for a while. Some goals, like standing up, or moving in a straight line are 'immediate' and 'continuous' in that at any one moment, the bot is either achieving or failing, so that they are likely to be capable of being expressed as a simple expression like:

IF (x >y) THEN

..{you are doing the right thing, carry on}..

ELSE ....{you are not doing the right thing, do this instead}..

(where X & Y are summaries of multiple sensor outputs and their boundary/goal values, etc, as required)

But something like:

"Do two laps of the arena, avoiding the obstacles and the walls, as quickly as possible"

is a bit more tricky, (especially if you refrain from using an appropriate algorithm, like wall following, on the basis it is too specific to that particular environment).

And I suggest part of the difficulty involves expressing the goal in a simple manner, that doesn't assume an LLM AI machine interpreting it, nor should it be expressely coded as how to do it.

More specifically, to cover two laps, the bot (or runner, assuming the runner cannot continually see the entirety of the lap and is not familiar with the task) must do an arbitrarily large number of operations before it gets any indication that it is doing the required 'thing' from its sensors.

I suggest, that some of the AI demonstrations, e.g. the 'dog' that learns to stand up, has at least some immediate feedback from its sensors measuring the direction that gravity is pulling.

Dogs racing on a track have a 'hare' to follow for similar immediate feedback. Whilst I have never been interested in dogs racing, I suggest that in the absence of the hare (and any other real-time target or instructions), it would take a long time for the dogs to complete a (say) 4 lap race, unless perhaps they had been specially taught to do it, over a long period of time, as a 'party trick'.

----------

That does not mean I am in any way recommending my lap bonus model ... it was only a simple interpretation of what you would 'tell' a runner to do when outlining the aim of a race.

I was just suggesting the need for a 'term' in the fitness expression, which continuously rewarded moves in the desired direction(s) and 'punishes' those in the 'wrong' direction(s). Hence, the 'idiot' that runs in a small circle would only accrue a small 'payment' at any one time, as the second half of each circle would offset any 'payments' from the first half.

How you encode such a reward principle is a personal choice.

Best wishes, Dave

If... if... we can assume that all the learning can be done up-front and never addressed again, then these should work. However, the moment the bot is put into a different environment, the fitness equations of the new arena are totally different. I'm thinking that mixing learning from one place with that of another is not a good thing.

Why is the fitness requirement entirely different? If it is in the form of "don't hit a wall" or "follow a wall" then the requirement, the goal, is the same even if you keep changing the layout.

It is a requirement to be able to transfer learning from one situation to another without the need to relearn from the start.

Once an ANN has learned to recognize an object it can continue to do so even if the object changes size or position or is even partially obscured by other objects or slightly different in some way.

So the robot has to be exposed to many different layouts and the weights need to be adjusted to handle them all and hopefully layouts not yet encountered.

In a hard coded solution like an algorithm to map a house from the lidar readings the robot is able to learn new layouts although the method of learning the layouts is the same algorithm.

... we humans certainly don't need to know the distance from home we've traveled or the size of the room we're in.

But we do need to know what direction to take to get home. And we really do know the size of a room we are in, we just don't know the size in exact measurements.

There is so much data pouring into the human brain that it uses "attentional networks" to filter out anything that is extraneous to its current goal/s.

The output of the two neuron robot simulator pays attention to all the inputs so the actual path is controlled by all the walls not just the one being followed. It is like trying to pour water in one glass based on the position of two glasses.

Another thought I had was this. We see a stable fixed world even though the actual inputs to the eyes are continually changing. So in the case of the simulated robot there is a continuous change of input values, just like the eye, but there is no stable interpretation of those values on which to use to avoid or follow walls or navigate.

As the simulated robot zooms around it is just seeing a crazy world as we might see when someone is taking a video while waving it all over the place. Making a stable input requires the data be directed to constructing a stable world in short term memory. Most of our behaviors are based on that stable constructed world "out there" not on our immediate inputs.

I suspect the wasp is constructing a stable layout in memory when it flies around its nest so it can find its way back later. In other words there are some (most?) things the simple 2 neuron brain can never learn to do.

There are some things it can learn to do using genetic algorithms such as moving around without hitting anything (and that may appear to be wall following) and in that sense it is an example of how to use a genetic algorithm to learn something.

Gents,

Check out this article. In the second experiment there are some visualizations on the activation of hidden nodes while the robot moves around. It's interesting how some nodes are more active during specific behaviors.

Tom

To err is human.

To really foul up, use a computer.

Gents,

Check out this article. In the second experiment there are some visualizations on the activation of hidden nodes while the robot moves around. It's interesting how some nodes are more active during specific behaviors.

Tom

Thank you for this reference. This being a second paper I've seen using the same bot, and arena and is about 20 years newer, it cleared up some of my assumptions... some I guessed right, some wrong. It has made a significant improvement of the results. I need to do a more exhaustive Internet search using principle's name, bot's name, to see if I can find others. 😉

Here are some observations of the changes made.

- My first thought was to reset values to be as close to the ACAA bot as I could within the constraints of it being virtual and comparing it to their real-world-only results. I made these changes one-at-a-time to see how it affects the behavior of the bot. Fortunately, doing it in the virtual world allows this since the lifetime can be completed is under 30 seconds whereas their real-world one takes over two days to run the same lifetime.

- Infrared Sensor Range - This paper indicated the practical range of the infrared sensors was only 4-5 cm whereas I had estimated them to be 12 cm. This made for a very manic bot using all my other current modifications (fitness equation, etc). It tended to do a loop in the upper right corner before continuing around the track and it wobbled more within the lane.

- Genome Size - In the first paper there was no guidance on how they implemented the ANN. In this paper they confirmed that the Genome had 20 elements. This is the size of the Weights matrix, so I had the correct. As time went on, I found that adding a Bias layer improved results which add 2 elements in my latter tests. I've configured this to be able to revert back to their model. I did not see any noticeable, significant changes, but I'll be exploring this more.

- Fitness Equation - Although I was showing promise with a piece-wise-logical function, it was troubling that I should have to go to this trouble. The whole idea is for AI to figure these out for itself. I reverted back to their equation of using the square-root function. I retain the distance versus intensity component.

- 0.0 <= V <= 1.0 - This was the most troubling as the wording in the first paper claimed that their equation did not constitute a bias for traveling forward or backwards and the bot simply learned that it had better vision forward. Yes, the bot has better vision forward, but the equation forcing a 0 to 1 range, cause negative speeds to have a fitness below 0.5 and positive speeds above 0.5. This obviously, causes a preference to move forward. The second paper corrected this wording. I adjusted the V value accordingly to the 0-1 range.

- I noticed it eliminated the circling behavior almost completely from the populations. Going from about 90% traveling in tight circles, ovals and eye shapes to about 5%.

- However, it did add an new bad behavior about 90% of the time. In this the bot would start out and travel various distances and finally slow down to zero. This was always without hitting a wall.

- Velocity Determination - In their bot, they use their wheel encoders to determine speed of the bot. It was not stated in either paper whether the wheels overcome friction and continue to turn when stuck against a wall. Not knowing the strength of their motors, I couldn't answer this question. However, in my virtual bot, I have programmed for their to be zero speed when hitting a wall and that the feedback assumes this.

- Mutation Probability - They use a huge percentage of 20%. Most of my readings had it closer to 3%. I've set it 20%.

- Bot Fitness - They confirmed in this second paper that the fitness is an average of all the step fitnesses.

- Misc Settings - Their step time is a longish 330 milliseconds versus 100 that I've been using. They only use 80 steps in a trial run and do this for expedience of their test. Before... I used 100ms and 300 or 1000 steps. I've set both to be consistent with theirs.

- Reality - This biggest hurdle of transferring a Genome (or even a Generation) to the real bot and it have a meaningful, initial advantage relies on how well the virtual bot models reality. I have incorporated inertia, but is is an incomplete model. It only models a finite acceleration of the each motor. It does not take into account things:

- It's easier to increase the speed of a bot spinning around like a top than to accelerate forward / reverse. IOW, I did not incorporate polar inertia versus linear inertia.

- I've noticed that the stepper motors can slow down a bot far faster than they can accelerate a bot. I'm wanting to quantify this in tests at some point. Another ToDo list item.

- Noise - To improve real-world simulation, I incorporated a random noise in the return value of the distance measurements. It was interesting to see that this caused some bots to be useful that weren't with zero noise. As mentioned above about 90% of the bots ended up slowing down to zero somewhere on the course without hitting a wall. With noise added, this same behavior occurred, but the bot jitters around turning back and forth and moving small increments based on the variations in "what it's seeing." A small fraction of the bots were able to get out of this jam and continue on.

Failure to Identify a Good Bot

Before these changes and even after, the most troubling thing is that the fitness value does not identify the best working bot! Not one lifetime run has resulted in the best Genome being of any use at all. No best Genome has even succeeded completing one lap. I don't see this mentioned in either of their papers. They show graphs of the up/downs of the best and average fitness, but they don't talk about actual Genome selection and being useful in the real-world in relation to these metrics.

This is troubling for two reasons.

- How does a computer select a Genome to be used in a practical sense.

- How does the on-board computer take advantage of on-going learning in the real world. The best fitness value is more likely to result in a dysfunctional bot than help improve it. I guess ChatGPT is showing some of the this dysfunction so I don't believe it is a inherent limitation of my current implementation.

Early on I had the ability to playback the best Genome and the best Genome out of each generation. Because the former has never been useful, I started suspecting even the best of a generation at identifying a bot worth looking at. IOW, I was only visually looking at the best of a generation (of which most failed) while a good choice be hiding at 80% of the best Fitness.

I decided to add @davee's suggestion and implementing a lapping function. However, this does not feed into the Genetic Algorithm selection/mating (as yet). It is simply used to identify all Genome's, out of the entire lifetime (8000 Genomes typical) that are capable of going around the ACAA track. Further study is called for.

VBR,

Inq

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

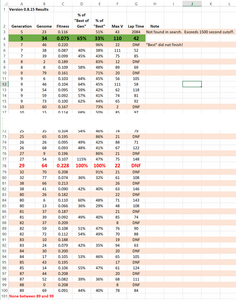

The results are somewhat better. However, the biggest change is not in the actual analysis, but that using a search of any that can Lap the course, has given far better results than the Best Genome or even the Best Genome of a Generation. The Best Genome did not even finish a lap. Almost all of the Best Genome of a Generation also DNF'd. If you'll note the percentage of best columns in the following spreadsheet, you'll see the truly good lapping Genomes have Fitness values that are mere fraction's of the Genomes that were considered best.

Here is a screen-shot and if you're really interested, the spreadsheet of the results.

And here is the best lapping Genome with a Fitness value a mere 33% of the "Best". It was found in only Generation 5. It is achieving a speed of 110 mm/s which is still not reaching the 204 hard limit I'm imposing.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide