Test 3 - Using the Carrot and the STICK

In the previous text, I notices the preference for driving backwards. As I don't have eyes in the back of my head and don't run backwards, I don't see why it would be unreasonable to encourage my bot to prefer moving forwards.

In this test, I simply changed the Velocity (V) term to output from -1 <= V <= 1. The fitness of a Genome is the sum of all the fitness' at each time slice. So this stick only discourages moving backward by reducing the sum of the total fitness. It still permits the bot backing up to bounce off a wall, but it doesn't reward it for hauling long distances backwards. The equations look like:

- V /= MaxSpeed; // -1 to 1

- dv /= 2.0 * MaxSpeed; // 0 to 1

- i /= ToFRange; // 0 to 1

- fitness = V * (1 - Math.Sqrt(dv)) * i;

This accomplished the goal in that you don't see the predominance of driving backwards into a wall. Again, the best Genome fitness occurred early in the life cycle at Generation 30. All subsequent generations failed to improve on it.

Generation: 30

1.2635 1.2635 1.2635 1.2635 1.2635 1.2635 1.2635 1.7173 1.2635 1.2635

1.2635 1.2635 1.2635 1.2635 0.9770 1.2635 0.1936 1.2635 1.2635 1.2635

1.2635 1.2635 1.2635 1.2635 1.2635 1.2635 1.2635 1.2635 1.2635 1.2635

1.2635 1.2635 1.2635 1.2635 1.2635 1.2635 1.2635 1.2635 1.2635 1.2635

1.2635 1.2635 1.2635 1.2635 1.2635 1.2635 1.2635 1.2635 1.2635 1.2635

1.2635 1.2635 1.2635 1.2635 1.3418 1.2635 1.2635 1.2635 1.2635 1.2635

1.2635 1.2635 1.2635 0.8401 1.2635 1.2635 1.2635 1.2635 1.2635 1.2635

1.2635 1.2635 1.4312 1.2635 1.2635 1.2635 1.2635 1.2635 1.2635 1.2635

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

Test 4 - Why the Square-Root

This is where I came to the conclusion that the square-root term used in the ACAA fitness equation was purely a weighting factor placed on the difference in speed between the two wheels. By taking the square-root it makes this term more significant. This is born out in the video below where I simply take out the square-root function.

- V /= MaxSpeed; // -1 to 1

- dv /= 2.0 * MaxSpeed; // 0 to 1

- i /= ToFRange; // 0 to 1

- fitness = V * (1 - dv) * i;

By removing the square-root in this test, this term has less significance as compared to the strait-ahead speed and the distance from the walls. Watching this life-cycle in relation to the previous ones, you'll certainly note the predominance of it turning around and around.

Generation: 12

1.1440 0.6066 1.5575 1.0842 0.4777 0.7988 0.8154 1.1669 1.5532 1.0394

2.9073 1.0586 0.7424 1.3885 2.3031 2.5132 1.1486 0.8975 1.4054 0.8009

2.3031 0.7988 0.9228 1.4144 1.0056 0.7314 0.3855 0.7086 0.8769 0.7988

0.6928 1.4828 0.8466 1.0764 0.7757 2.0389 1.9213 0.7988 1.0408 1.0221

0.7988 1.4167 0.7988 0.9394 1.0586 1.5344 1.4902 0.7988 1.3983 0.7988

0.8466 1.0957 1.1121 1.0221 3.7784 1.5099 2.1916 0.7988 0.6389 0.8154

2.0847 1.1425 0.4679 1.0222 1.1242 0.7988 2.2826 1.4813 0.7251 1.5852

0.7001 0.7988 2.6794 0.7424 1.0957 2.1447 0.7988 0.3957 5.2352 0.8009

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

To infinity and beyond! Buzz Lightyear

By this time, I'd seen hours of the robot dancing on the screen and realized that their was nothing cosmic about scaling the input parameters V, dv and i to be in some strict range. All they do is get returned for each time slice fitness value that is summed up into a total fitness of a Genome. Even after that... all they are used for is to compute a Genome's relative superiority over the other Genomes. At which point it gets to potentially produce more children with at least some of its hopefully good traits. So, for now, I've taken out the normalizing all-together. Now the equations are simply

- V /= MaxSpeed; // -1 to 1

- dv /= 2.0 * MaxSpeed; // 0 to 1

- i /= ToFRange; // 0 to 1

- fitness = Math.Pow(V, A) * (1 - Math.Pow(dv, B)) * Math.Pow(i, C);

Where A, B and C can be tuned to the desired characteristics. < 1 makes it more significant, > 1 less significant.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

Would it be possible to substitute some expression of (actual distance travelled)/(potential distance travelled) instead of the difference in wheel speed? I'm thinking that you'd want to install a preference for longer runs rather than short segments in a continuous turn.

This ratio would be self-normalizing and still be -1<= X <=1.

Anything seems possible when you don't know what you're talking about.

Would it be possible to substitute some expression of (actual distance travelled)/(potential distance travelled) instead of the difference in wheel speed? I'm thinking that you'd want to install a preference for longer runs rather than short segments in a continuous turn.

This ratio would be self-normalizing and still be -1<= X <=1.

I'm not sure if I already have that or I'm not quite on the same page as you are.

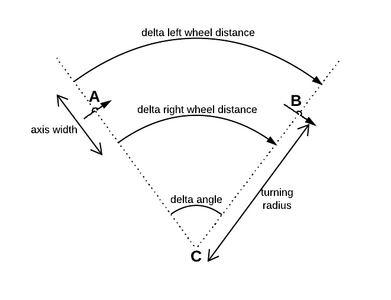

Velocity is being used since the two motors are controlled by telling them what speed to go and using the diameter to get distance traveled by each wheel. Then it uses this kind of geometry to get the updated location and orientation.

The actual code used is:

// Calculate left and right distance that each wheel should

// move along the ground. Use the average speed over the interval.

double sL = (_output[0, 0] + _input[0, 0]) * ts / 2000.0;

double sR = (_output[1, 0] + _input[1, 0]) * ts / 2000.0;

// to determine the updated location and orientation

// of the bot.

if (Math.Abs(sL - sR) < 1.0e-6)

{

// basically going straight

location = new PointD(

_location.X + (double)(sL * Math.Cos(_orientation)),

_location.Y + (double)(sR * Math.Sin(_orientation)));

orientation = _orientation;

}

else

{

double R = _track * (sL + sR) / (2 * (sR - sL));

double wd = (sR - sL) / _track;

location = new PointD(

(double)(_location.X + R * Math.Sin(wd + _orientation) -

R * Math.Sin(_orientation)),

(double)(_location.Y - R * Math.Cos(wd + _orientation) +

R * Math.Cos(_orientation)));

orientation = _orientation + (double)wd;

const double PI2 = (double)Math.PI * 2;

while (orientation < 0) orientation += PI2;

while (orientation > PI2) orientation -= PI2;

}

As far as encouraging it to go strait versus turning, the fitness calculation should be doing that.

V = average speed = (Vleft + Vright) / 2;

dv = difference in speed = Math.Abs(Vleft - Vright);

fitness = V * (1 - dv); Says fitness increases with average speed, but decreases as the difference in speed increases.

I might be missing something in your suggestion. If after seeing these code snippets and you still think your idea is not being addressed, please take a second stab at it.

VBR,

Inq

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

I think the only difference is that I was suggesting calculating the absolute distance (i.e. the square root of the sum of squared differences of the original and final positions) whereas it seems that you're calculating the length of the ARC followed by the centre of the bot.

It's likely that at a small enough time slice the deviation between the two is negligible and you'd still have to do your calculations anyway to determine the bot's new location and orientation.

The only substantial gain I was thinking about was if you consider the bot turning in a circle at high speed then it may be moving when you look at the distance around the arc followed, but the physical distance is much smaller. I can't remember how long you said your time slice was (300 ms ?) so I think the absolute distance will build up slower during any kind of turn ing motion.

It was an attempt to prefer longer changes in absolute position (i.e. straighter lines) than purely based on the distance that the turning wheels experienced.

Anything seems possible when you don't know what you're talking about.

I think the absolute distance will build up slower during any kind of turning motion.

Yes the distance travelled is the change in position of the robot not the distance the wheels have travelled.

I use a similar routine to convert the two speed outputs of the neural net into motor actions to get the change in direction and distance travelled and thus compute the new position ox,oy and of the robot and the new direction theta to draw on the screen.

@inq's real robot uses stepper motors so it should be fairly accurate whereas my real robot uses PWM and relies on the encoders to see how far the wheels have actually rotated.

In theory the real robot should be able to compute its change in position and orientation by reading the encoder values per unit of time regardless of the actual speeds of the motors. With stepper motors you should be able to navigate just by sending the right number of steps to each wheel per unit of time.

I don't need to calculate the actual velocity as I simply used area covered in a given amount of seconds as the fitness factor without hitting a wall. Probably not a good measure if it was following a very long wall but did indicate it had moved around the whole layout. If it hit a wall it did not reproduce.

sub moveRobot()

dim as single w = 150 ' width of wheel

dim as single expr1

if (SL=SR) then

' Moving in a straight line

ox = ox + SL * cos(theta)

oy = oy + SL * sin(theta)

else

' Moving in an arc

expr1 = w * (SR + SL)/ 2.0 / (SR - SL)

ox = ox + expr1 * (sin((SR - SL) / w + theta) - sin(theta))

oy = oy - expr1 * (cos((SR - SL) /w + theta) - cos(theta))

' Calculate new orientation

theta = theta + (SR - SL) / w

' Keep in the range -PI to +PI

if (theta > PI) then

theta = theta - (2.0*PI)

end if

if (theta < -PI) then

theta = theta + (2.0*PI)

end if

end if

end sub

The only substantial gain I was thinking about was if you consider the bot turning in a circle at high speed then it may be moving when you look at the distance around the arc followed, but the physical distance is much smaller. I can't remember how long you said your time slice was (300 ms ?) so I think the absolute distance will build up slower during any kind of turn ing motion.

I'm getting the gist and I see your point. It could be traveling max speed of one wheel and some fraction of that on the other causing a pretty high fitness value, yet it wouldn't be covering any real ground. I'm not quite sure I'm seeing a way to code that... yet.

BTW... ACAA Paper uses 300 ms as that is the best they could do on getting all the sensor data of their infrared sensors. I'll be using 100 ms, as that is what I can get the ToF to do.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

[I'm getting the gist and I see your point. It could be traveling max speed of one wheel and some fraction of that on the other causing a pretty high fitness value, yet it wouldn't be covering any real ground. I'm not quite sure I'm seeing a way to code that... yet.

In the other post, I suggested (actual distance travelled)/(potential distance travelled). If you calculate the distance from [X0,Y0] (the cart center's original position to [speed*time,speed*time] to [Xf,Yf] you'd get a value which expressed the movement in terms of it's fraction of the largest potential distance which might've been travelled in that time period.

I don't know if that's reasonable to use instead of dV ?

Edit:

Oops, I forgot to put in the actual final position [Xf,Yf] after the time slice has ended.

Anything seems possible when you don't know what you're talking about.

I'm not quite sure I'm seeing a way to code that... yet.

I just add up the changes in position over the run.

distanceTravelled = distanceTravelled + sqr((ox-prevOx)^2 + (oy-prevOy)^2)

I keep the last position prevOx,prevOy to reset the position if any move hits a wall.

Hi @inq et al,

Just to say I continue as a casual observer.

As you comment, I previously also realised the Sigmoid function forces all of the 'action' towards the middle of the range say -0.5 .. +0.5, since outside of this range, it is near 0 or +1. I didn't try to figure out if this was good or bad for this application. By contrast, linear is ... linear!

Constraining each of the three parameters to 0..1 range seemed to have a few effects, all of which you have probably realised, and some you mention, but are maybe worth emphasising in a summary:

- No negative numbers .. I wasn't sure how 'terminal' to an individual trial, a negative fitness value was ... but in some games I think it means time to restart the game...as you are now dead.

- With square root, positive numbers below one, increase with square root, positive numbers above one decrease. (And negative numbers play a different game altogether!) So if you want to emphasise (or even de-emphasise with a slightly different fitness function) that parameter, it probably needs to stay the same side of the '1' dividing wall on all occasions

- The ACAA paper's need for self-contained robot, with an abstract says 'but most -if not all- studies have been carried out with computer simulations', suggests that this was a crucial factor in getting the paper accepted for publication, and maybe why the reader is reminded more than once, to avoid the risk of it being thrust in the 'nothing new to see here' scrap pile.

- This may have meant the maths for creating the matrix in real time had to be small microcontroller capable. By limiting the range, it might have been possible to do most of the calculations using integer maths, with a scaling factor .. e.g. 0 is 0.0, and 250 or 256 is equivalent to 1, or maybe 10000 or 65536 is equivalent to 1. The choices are many, and might even support a lookup table or two for awkward things like a square root. Hence, no need for a floating point capability. Whether this still worth considering is another question.

A quantitative question that is intriguing me, is how much 'information' is being stored in the machine, after its 'adjustable' matrices are 'adjusted' by the trials/learning process. If the trials were doing something trivial, like learning a simple linear relationship, that might have been determined in other ways as an 'y = mx + c' type of function, then clearly only a couple of numbers, representing m and c, might be sufficient. But this is a rather more than that, potentially a great deal more.

A corollary question/pondering, is for this robot, in what is essentially a trivial maze, there seems to be at least two learning processes mixed into one:

- How to successfully move around in a constrained space

- The limitations of this maze

The first of these should be useful to it for any maze it might find itself in

The second is largely confined to this maze, and others very similar in size and shape.

I am wondering what can be done to make use of this, or even how to test it.

e.g. Assume it is trained on this maze, then, using the best of the first trained matrices etc. as a starting point, train on a second rather different maze. Finally, put it back on the first maze ... has it 'forgotten' that it was there before?

----------

Please treat all of the above as just random daydreams ... take with as much salt as you desire.

I'll try to continue my casual observations of whatever you publish.

Best wishes and thanks for the ride, Dave

I notice in the last examples the simulated robot has a different layout of distance senses than the TOF sensor?

https://scholar.uwindsor.ca/cgi/viewcontent.cgi?article=1101&context=etd

"if there are any changes to the sensors, the architecture of that network requires to be altered and the entire training process (collecting samples and training the network) has to be carried out all over again"

It seems like I lived a million life-times. 😋

But, I'm not getting any real convergence. Had some close ones, but eventually they run into a wall and don't have the gumption to back up. Reading you post, and preparing to respond, I did find new things to try, but still no convergence.

As you comment, I previously also realised the Sigmoid function forces all of the 'action' towards the middle of the range say -0.5 .. +0.5, since outside of this range, it is near 0 or +1. I didn't try to figure out if this was good or bad for this application. By contrast, linear is ... linear!

Constraining each of the three parameters to 0..1 range seemed to have a few effects

I'm seeing the Sigmoid is significant in the fact that it introduces a lot of non-linearity that must exist since the problem is certainly non-linear and/or at least piece wise non-linear. Basically, its a differential equation with 10 variables.

What kicked of the anti-Sigmoid was that the inputs are speeds of the two wheels that range from -204 to 204 mm/s. The distances from the perimeter are 0 to 305. Normalizing these with Sigmoid seemed to waste way too much information. However, the weights and bias matrices start out with values of -1 to 1. And the output is also run through the Sigmoid and then scaled to the desired speed range as illustrated in the Pseudo code above.

No negative numbers .. I wasn't sure how 'terminal' to an individual trial, a negative fitness value was ... but in some games I think it means time to restart the game...as you are now dead.

If I let negative inputs speeds enter in and scale into 0 to 1 via Sigmoid or by just linear shifting it allows positive fitness for traveling backwards. In fact, anytime I allow this, the bot prefers driving backwards. I attribute this to the majority of sensors always increasing distance and only two on the rear having showing closing values.

With that, I feel confident that the leaving them negative causing negative fitness, decrements the sum of fitness's that make up the entire Genome Fitness value. Bots still show the ability to back-up, but they only do it long enough to back off a wall.

With square root, positive numbers below one, increase with square root, positive numbers above one decrease. (And negative numbers play a different game altogether!)

Yes, I think I discussed that somewhere in that War and Peace above. That the square-root actually emphasizes the negative affect of the bot turning... thus encouraging the V term to dominate and thus tend to stay strait. I've tried even lower value Math.Pow(dv, 0.1 to 0.5) and I haven't quite found the right number. Too low causes it to go strait (into the wall) as expected and toward the square-root value, they tend to love driving in circles.

but most -if not all- studies have been carried out with computer simulations', suggests that this was a crucial factor in getting the paper accepted for publication, and maybe why the reader is reminded more than once, to avoid the risk of it being thrust in the 'nothing new to see here' scrap pile.

This makes a lot of sense, even though it was 1994 and pretty early in the AI robot craze.

This may have meant the maths for creating the matrix in real time had to be small microcontroller capable. By limiting the range, it might have been possible to do most of the calculations using integer maths...

They were using a Sun Spark station. That was a pretty significant machine back then. I used them in my former career about the same time.

Given the small network size, each synaptic connection and each threshold was coded as a floating point number on the chromosome...

Like I mentioned above... after the learning is done, the part that uses the ANN is trivial...

However, it should be noted that the software that implements the genetic development of neural networks [6] could be slimmed down and downloaded into the robot processor.

A quantitative question that is intriguing me, is how much 'information' is being stored in the machine

In the pseudo code above, the entire matrix is the Genome and all that is needed to store on the bot for running purposes. However, as @robotbuilder mentioned. It would be beneficial to be able to continue the learning process as more of the real-world is explored. For this situation the learning algorithm also needs to be on the bot MPU. Also the base-line Generation is also needing to be stored. Since the generation involves having 80 Genomes, it would need to store 80X matrices as shown in the pseudo code example. This data and the GA code should be no trouble on even an ESP8266, but would be too much for an Arduino UNO level microcontroller.

A corollary question/pondering, is for this robot, in what is essentially a trivial maze, there seems to be at least two learning processes mixed into one:

I'm not seeing that the learned Genome is arena specific. Of the fairly good Genome's I've seen, I can re-orient the bot and make it run backwards, start out facing a wall or in a corner and it still work. So for an equally confining maze, I don't expect it to need a re-learning. My thoughts are that in a wide open area like the Auditorium arena, I'm assuming that the fitness equation won't see anything in range of the sensors, so it should just go balls-out strait forward until it starts seeing something.

I am wondering what can be done to make use of this, or even how to test it.

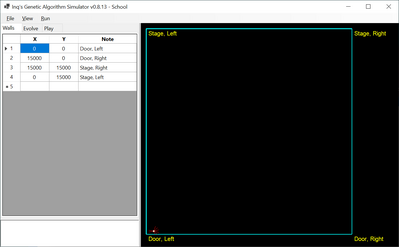

The virtual program uses a spread-sheet interface for creating the arena so any size / shape room with obstructions can be made fairly easily. Here is the one for the School Auditorium. Tiny bot down in lower, left corner. 😉

Assume it is trained on this maze, then, using the best of the first trained matrices etc. as a starting point, train on a second rather different maze. Finally, put it back on the first maze ... has it 'forgotten' that it was there before?

Maybe you're remembering me saying something about mapping a house/building. That is a long-term goal. At this phase, there is no memory of a configuration by the GA. It is simply reading the sensors and reacting. And since the sensors are only distance to a wall and the wheel speeds, it has no way of retaining any concept of a floor-plan. If the Genome (matrix) is well learned, it should have parameters when seeing no obstructions, that it simply goes strait, accelerating to its top speed.

I'll try to continue my casual observations of whatever you publish.

Please, keep interjecting... You've caused me to re-think several times already. ... and thanks. Actually, I'm getting tunnel vision. The mere fact of answering your questions made me get in the code (that I haven't seen is a while) and say... "What was I thinking?" 🤣

VBR,

Inq

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

I notice in the last examples the simulated robot has a different layout of distance senses than the TOF sensor?

https://scholar.uwindsor.ca/cgi/viewcontent.cgi?article=1101&context=etd

"if there are any changes to the sensors, the architecture of that network requires to be altered and the entire training process (collecting samples and training the network) has to be carried out all over again"

I've downloaded your reference, but I haven't seen it before. A cursory glance is encouraging a follow-on reading. I'm using the ACAA paper that I've referenced about a million times so far. The orientation of the "sensors" is as close as I can model off the drawings in the their paper.

But as you pointed out they must be adjusted... and I have modified the learning fitness equation base what I'm using. Whereas the ACAA paper used Infrared sensors that return a stronger value when things get closer and they use the equation:

Fitness = V * (1 - sqrt(dv)) * (1 - i);

where i = the intensity of the closest obstruction.

I'm using ToF sensor where it gives me actual distances... basically the opposite concept. The equation I actually use is adjusted accordingly...

Fitness = V * (1 - sqrt(dv)) * s;

where s = the distance to the closest obstruction.

Keep throwing those darts to my assumed failings. I still try to use them constructively.

VBR,

Inq

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

I've fiddled with the Fitness equation some, but still have some things I'd like improve on.

Original fitness equation: Fitness = V * (1 - Math.Sqrt(dv)) * s;

New fitness equation: Fitness = V * (1 - Math.Pow(dv, 0.2)) * s;

I've also logic to determine if it has run into the wall and was unable to back off. I'm running each Genome for 300 steps. If the speed is ZERO for the last 50 steps (or more) then I set the Fitness to 0.0. Basically, during the mating process... it'll not get contribute to the next Generation.

Way too many of the Genomes are just running around in tight circles. Unfortunately, they do it fast enough to drown out the desirable Genomes. I need to work on some way of incorporating that into the Fitness calculation.

I'm considering the algorithm @Will suggested. I think it should be pretty clear by what I've posted, the geometry is not an issue for me. The problem I can't see an algorithm for is the added bookkeeping necessary to retain that through multiple steps. It is not generalizable whereas the Virtual Engine so far is. Also... there is the problem of how many steps to retain in history. It is perfectly acceptable for it to return to and is expected to return to the start location at some time. The need to back up and make tight turns is necessary and that can't be filtered out as well. Therefore some finite history would be needed and it would be variable on the size of the arena the bot's in. That is the issue, I don't see an algorithm for... yet.

Anyway with the changes I have now, I do have a good candidate Genome. It doesn't run into walls and it does work in other arenas. It showed up in only the 4th of 100 generations. The problem is, it was not the best Genome and in fact the circular idiots simply drowned it out and by the 10th generation, it no longer existed. Only by my viewing it, was the selection made. Obviously, that says the algorithm can not be left to evolve on the robot as it might just get senile and start spinning around. The first video shows the bot running several laps around the learning arena (ACAA's arena). The second video shows the same Genome placed in the Auditorium Arena. This is a boring video, but it does show the Genomes are not location specific. Another problem with this immature Genome (4th generation) is that it does not take advantage of the top speed. I would expect a good Genome to really open up in the Auditorium to full speed as it travels around in near open space.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide