Hi @inq & @robotbuilder,

RE: I've re-evaluated my crude Inertia calculations and have improved them some.

It would be neat if a genetic algorithm could evolve those types of calculations itself.

Inq, perhaps you could please clarify if I am following your 'drift' or not:

From your description: You were previously trying to model inertia in the fitness equation(s) ... which implies that you probably were 'fudging' the AI machine a bit. (I am sure there is a more technical phrase, but its late here!)

BUT, in the latest incarnation, you have moved the inertia calculations to modify the data being fed back to the AI machine as to the velocity and/or acceleration that the 'bot' was actually achieving. In other words, the AI is machine instructing the motors to one thing, but the data being fed back was saying something different has actually happened. In this case, inertia got in the way.

If so, that sounds like what a simulator should do .. it is then the job of the AI to learn to allow for the 'real world' with inertia, and make the best of it.... e.g. if it needs to slow down for a corner, then it needs to start slowing before it actually reaches the corner. The inertia equations are not 'in' the AI machine, but in the external simulator.

-----

In my previous comments, I did muse whether it was a good idea to expect the AI to deal with some of these effects, but that is a different philosophical question for another day. This test is hard enough for now.

---------------

Best wishes, Dave

From your description: You were previously trying to model inertia in the fitness equation(s) ... which implies that you probably were 'fudging' the AI machine a bit. (I am sure there is a more technical phrase, but its late here!)

It was kind-of three stages...

- My first attempt just limited the wheels to change speed too quickly. This was only in the virtual model of the bot... not the genetic algorithm. The resulting Genomes when transferred to the real bot were trying to drive the stepper motors from 0 to 1200 mm/s in one step. Guess what happened? 🤣

- Because of this, I went back to the virtual model to try to incorporate an acceleration term in the fitness equation. Something like: Fitness = V * (1-sqrt(dv) * s * (1-a); IOW, as the acceleration exceeded some constant, the last term would go to zero... giving a poor Fitness. It didn't really work out well.

- I went back to incorporating a slightly more sophisticated inertia model that calculated the wheel speeds into bot speeds and that was capped to what stepper can handle. This gave good results in the virtual evolutions. But... it still gives high-accelerations as outputs. So... I have to add the same acceleration limiting functions to the real bot. I've tried them out here at the house... and at least the bot doesn't chatter. Unfortunately, the school has my test arena all filled with another project:

Some canoes are getting refurbished and I don't think I want to try dodging them... yet. 😉

In other words, the AI is machine instructing the motors to one thing, but the data being fed back was saying something different has actually happened. In this case, inertia got in the way.

Yes, I think you've got it. In the ACAA bot, using DC motors, the Genome can tell it to go full speed. The PWM is dumping full speed at the motors, but they can't go instantly to full speed. In their case, the encoders, feed back the real, actual speed. In my case the stepper motors are being told to go full speed, but instead, they can't comply and miss steps and go to zero speed in action. Having no encoders, and since the design assumes what I tell it is actually happening, there's a disconnect. So... my solution is to simply intercept the output of the AI and only allow accelerations that the steppers can honor. I also return the artificially attenuated speed back to the next step of the AI. IOW... it will see the same results as if DC motors are attached.

if it needs to slow down for a corner, then it needs to start slowing before it actually reaches the corner. The inertia equations are not 'in' the AI machine, but in the external simulator.

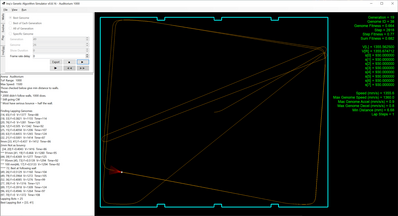

You got it! Except, it's interesting to watch the virtual simulation... it does slow down in the corners and with the new logic in the virtual bot (not the AI) it gets back up to max speed in the straightaways. I've also thrown them into other, tighter simulated rooms. I've seen where they'll slow to a stop, back-up, turning and then continue on at full speed into an open area.

I don't model friction, so I don't know if it's slowing down enough. 😆 We've gotten into me sounding like some speed fiend. In this version, I merely want walking speed... say 3 to 4 mph (1500 mm/s). In future bots, I imagine the gathering of mapping data will be the limiting factor. I'm just wanting the limitation being the data acquisition...not the bot's maximum speed.

In my previous comments, I did muse whether it was a good idea to expect the AI to deal with some of these effects, but that is a different philosophical question for another day. This test is hard enough for now.

Did you get a chance to read that paper @thrandell posted? It has some very impressive results considering... the fitness equation was made even simpler: Fitness = V * (1 - i). IOW, it was the hardware on the bot and the environment that taught the GA. The fitness played a far lesser influence.

VBR,

Inq

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

Hi Inq,

Thanks for your comprehensive reply. Whilst you were writing it, I was just replying to a new thread robotbuilder started, on much the same topic. I think it has a reference to your 'forum name', so I presume you will get a notice from it. I hope I haven't got too much wrong. It's too late for me to take in all of what you have written now, so I'll probably return to it later.

Thanks once again, best wishes, Dave

It's too late for me to take in all of what you have written now, so I'll probably return to it later.

No kidding. It was late for you with your previous post. You're on Greenwich time... right? 😳 You must be a night-owl.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

In the hope of clarifying my comments, @Inq has buit a simulator and an AI engine, apparently in the same computer, possibly even in the same program, I don't know.

...

Actually, you're pretty much spot-on! Considering the perception (by most people) that AI is some complex, black box... not to be fiddled with, you have a clear picture. Although some aspects of it are extremely complex, the basics are really kind of simple. I hope my InqEgg thread shows (or will show others) that AI can be used in our projects with great success, or at least to add to our desires for knowledge. AI is here to stay. I for one do not wish to put my head in the sand and be one of those old grand-dad's that can't use a calculator, much less... play a video game with their grand-children. Knowing fundamentals about AI might just be useful in the real world for financial or just dealing with an AI enabled IVR system or... some day... a robot cleaning the drool off my chin when I get really old.

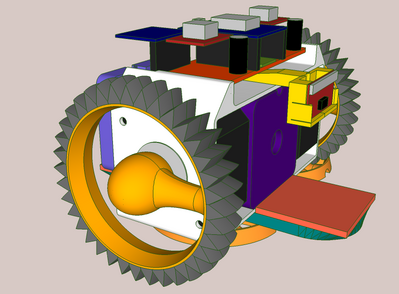

The virtual program is not something I'm proud of. Considering my background, I would be embarrassed to let it out of my possession. It formed organically... IOW with a band-aide on top of a whim on connecting pieces with bailing wire and duct tape. I have about 40 hours total invested in the visual project shown in the videos. It has five conceptual parts. Fortunately, these are all in four C# classes, so there is some well defined boundaries and interfaces. How these came about and grew...

(1) The Genetic Algorithm - This was the beginning of the journey and long before any thought about making a virtual simulator. I originally wanted to do the project like the ACAA paper, in that the entire learning would be completed on the bot. This part is 99% based on the Goldberg book. @thrandell got me started down this very interesting path with his use of the ACAA paper and the Goldberg book (two months ago). I was going to try translating it from the Pascal in the book, but I finally just did a clean-sheet design based on what the book said in English. I learned about it far better this way than translating code. It is generalized and can be used for any Genetic Algorithm project. It has no knowledge of the robot, the simulator or even the real-world. If I took out all the comments, it'd be less than 400 lines. After a few bugs were eradicated, it has largely been left un-touched. I've also have a C++ version that can go on the MPU. I believe the evolution can be performed on at least an ESP8266. Once an evolution is kicked-off on this GA Engine, it simply supplies a block of data that can be interpreted in any way... as a Genome. Something outside of the GA Engine must evaluate the block of data and return a relative score (The Fitness Value).

(2) Artificial Neural Network - This part is fairly small also (less than a hundred lines). This receives the raw data block and converts it into the ANN. In the simplest version of the ACAA bot, this is merely a single matrix that does a matrix multiplication on the input data to arrive at some output data. That's it! This portion of code will go on a real bot. In fact... after the learning process that part (1) does, the learned matrix could be the only code on the bot. Part (1) code is no longer needed to perform the trained task. This part only changes if different types of ANN's are used. For instance, the next more complex version uses an additional vector of data that is added once the first matrix multiplication is performed. It is called a bias in most of the literature. I'm now learning about doing a Recurrent Neural Network where there is an intermediate hidden layer that feeds on itself to adjust the output based on changes over time. In this ANN there are at least 2 matrices and 3 vectors in addition to the input/outputs. Again, the GA Engine simply spits out more data to be used, but its code does not have to change to facilitate this added complexity in the ANN.

In a real bot with all the needed hardware, the input is supplied by some kind of vision sensors. This could be ultrasonic, infrared, lidar or in my case, I'm using a time of flight sensor. The ANN would then do its calculation and tell the wheels what speed to run. This would repeat forever driving the bot around avoiding obstacles. It is not tied to a specific room geometry. As the ACAA paper did, parts (1) and (2) and the real robot are all that is needed to perform the learning evolution and the eventual taught bot. The ACAA paper kept the GA Engine on an external computer and relayed the Genomes to the robot. I believe these could both just as easily be put on the robot and have the bot learn by itself.

(3) The Virtual Robot - Reality stepped in at this point. The training process is hard on a physical bot. It also takes a couple of days. I felt that I (and the bot) might learn faster if some or all of the learning happens in a virtual world. It sounded simple at first. I just have to mimic the interaction of the vision sensor hitting virtual obstacles, do the ANN Math and spit out the velocities. This part of the coding grew in scope and ended up taking the most time and none of it goes on the real bot. It is simply trying to simulate what the bot does naturally. There is a great deal of geometry, finding intersections for line segments and distances to line segments that ends in defining how far the vision "rays" go out before hitting some obstacle. It tells (2) the distances and (2) tell the Virtual Bot how to move. This then gets into most of the problems of how to model what to do. This is where the real bot just goes (as best it can). The virtual bot has to factor in all the issues like inertia, wheel friction, variation in wheels, variations in how motor respond to the ANN commands. This can get VERY complicated.

(4) Fitness Calculation - In it simplest form, this is merely a one-liner. It is what is needed to tell the GA Engine (1) how to proceed with the evolution. It is a score of how the ANN did toward the task at hand. In the bot, this is calculated to be based on giving a higher score to a bot that goes faster in a strait line and stays away from obstacles. This is only needed during the learning phase. It is not required on the bot unless the bot is expected to do the learning.

(5) GUI - This took a lot of time for me to re-familiar myself with doing Visual Studio C# coding with graphics. It merely wraps all the other parts into a user interface that allows for some configuration of the rooms the bots will learn in, and some factors of the bots. Some things I don't have exposed and thus I have to change somewhere in the code and recompile. The most notable of these are changing the fitness equation, changing the ANN structure.

So... probably far more than you wanted to read, but yes... it sounds like you have an excellent understanding of the moving parts I've been working on.

that putting inertia into the fitness equation is muddling the boundaries between the simulator engine and the AI engine.

True... and the final revelation of this came from @thrandell's second offering of another paper using the same ACAA bot where the bot learned a far more specialized task without any extra factors in the fitness equation. So... I've gone away from adding acceleration terms... as well as global aspects like how far away from the start point or area covered or lapping influences in the fitness equation.

VBR,

Inq

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

Hi @inq,

A few more musings from the casual observer ... treat with as much salt as appropriate ... 🤨

Thanks for taking the time to explain the virtual sysytem you have built. I don't think there were any major surprises for me, but it helps to see a little more detail. I naively envisaged an initial two-way split as the 'top level', with your Virtual Robot and Gui in one part forming the simulator, and the GA, ANN and Fitness Calculation in the other part. Obviously, these would then correspond to the software running on the bot, and the real-world of mechanics and physics of the bot, assuming the bot was able to learn, or maybe continue continue learning and refining, if you transferred the consequences of an initial learning phase from simulated experience.

I don't know if my experience of simulated electronics is a reliable guide, but if it is, then I would expect the simulator to provide a useful starting point, and insight into what is happening, but there are often still 'surprises' that the real-world exposes, so I would imagine a 'real' bot, would need to retain the self-learning capability, in spite of inheriting the learning from many hours of simulated experience beforehand.

Of course, if the 'real' bot is cloned, then the cloning could include that of the enhanced learning, so that providing the clones were mechanically 'identical', then the clones might not need, and may be safer, as well as cheaper, without learning capability.

-----

Until recently, I had mainly considered the AI system as handling the navigation aspects, assuming the bot was 'relatively sedate', and whilst it would accelerate and decelerate, this could have been a fairly simple hard-coded algorithm, possibly borrowing from the 3D printer world, in which the AI system would have provided a stream of 'go to' commands to each motor, roughly analogous to a list of Gcode commands supplied by the slicer, to the printer, and the bot's motor controller/driver would translate this into a set of timed steps, in the same manner that the printer controller + motor driver, ramps the step speed up and down to allow for inertia. Of course, in the case of 'budget' 3D printers, the parameters and algorithms used in the controller/driver are human derived, probably mainly on a trial and error basis, to find the 'quickest' that can be found that did not produce failed prints. In the case of the bot, a (virtual or real) bot can obviously detect that it has crashed into a wall, and given a fitness calculation that praises straight line velocity, will tend to try to find a path and speed profile that emphasises those aims, whilst avoiding crashing. By the look of your videos, your virtual bot can achieve these aims, which is impressive!

-----------

As an off-beat ponder ... Perhaps new 3D printers should/will/are fitted with sensors that detect what the motors are actually achieving and put an AI system in to 'optimise' the stepping. One could imagine doing a 'no filament' dry run, to minimise the amount of wasted prints. Whether this could be refined to include optimising the extruder drive, minimising the artifacts like blobs and strings is a harder proposition. I don't see this as essential, everyday Ender 3 upgrade, but maybe it could play a part on more 'experimental' printers, such as you have been contemplating for your catamaran? 😎 😎

---------

Also, I confess to not yet being familiar enough with the mechanics and terminology of the GA+ANN, so I am presently limited to regarding this as a single element. This is a stage I have only glanced at so far, which on one hand, looks 'straightforward' when initially flicking the pages describing equations, etc. of a particular example, but soon becomes mired in the numbers of variations and jargon, that have appeared. Perhaps, starting with an 'early-ish' protagonist like Goldberg helps to reduce the noise that surrounds a more contemporary explanation?

I can certainly appreciate a 'real world-like' part of the simulator could grow into a complex and tricky to calibrate beast, especially if you wish it to be an accurate model which can be pushed to the limits. In the latter case, I guess some adjustments would be needed for changes like floor surface, in the same way the handling of a car is affected by the road surface material, smooth or 'bumpy', wet or dry, and so on. I'll be interested to see how this works out, when you have real and virtual in a side-by-side comparison. I totally agree with using a simulator as part of a sensible strategy, but also appreciate it could be a tough challenge.

Thanks for your support. Obviously, this is just a muse. Any response will be welcomed, but is not expected, as I fear I am distracting you too much already.

Best wishes, Dave

I don't know if my experience of simulated electronics is a reliable guide, but if it is, then I would expect the simulator to provide a useful starting point, and insight into what is happening, but there are often still 'surprises' that the real-world exposes, so I would imagine a 'real' bot, would need to retain the self-learning capability, in spite of inheriting the learning from many hours of simulated experience beforehand.

I agree completely... I'm expecting there to be some got-cha's if not outright head banging. The virtual version has afforded me with a fantastic overview. Seeing it actually run and evolve in super speed-up time, has afforded me some insights.

As you effectively have a oval race track with the ACAA, perhaps it should be treated a bit like one, in which an athlete gets credit for crossing the finish line, and if it is a multi-lap race, then each time they cross the line, in the forward direction, they get a 'credit' for completing the lap.

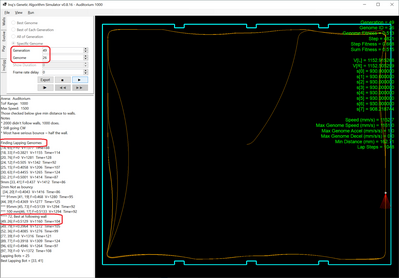

What has given me great consternation is implementing your lapping concept. I simply place "pylons" at places the bot is required to go around. I don't require them to go around in any certain order and some bots actually do act like an early Robovac that goes all over creation, but does eventually gets around all the pylons. Any that get around all pylons, get pulled for me to visually inspect. I even give the number of steps (time) that it took to finally succeed. Here is such an evolution of 100 generations based on certain aspects. In this evolution I was comparing limiting the range of the ToF sensor. It's max range is 4m. In this evolution, I limit it to 1m. The lap search feature looks at all Genome... not just best of each generation. Here you can see the output of the search. I can annotate the results as shown and store it in the project file that contains all the Genomes and their results.

What I have found, is that the one with the highest fitness overall never is very good. Some even run into walls and stall. The best Genome of each generation is rarely that good either. The point of my ramblings in relation to your observation is... I'm having troubles how to implement a learning on the bot. The fitness value is not a good indicator of better. I do believe there is value in getting the bot to learn on the job, but I don't see how to tell it that it learned something new and/or better. Some of their findings on continual education with ChatGPT where it is actually getting worse at certain tasks over time bare-out what I'm seeing. Maybe you have some ideas???

I guess some adjustments would be needed for changes like floor surface, in the same way the handling of a car is affected by the road

It's no distraction. The room I've chosen for the first trial runs of the Genome is still full of canoes. I expect I have a few days. I like the venue for its openness and the old/warped/rough flooring. It adds a layer to the real-world. I suspect the AI will have no trouble with the flooring as every choice it makes for speed and turning is based on what it sees at that instant. It getting jostled by a crack or ridge in a board shouldn't affect it all that much. I do wonder about the few chair legs that will still be in the room and doorways that I have not modeled. It will be interesting.

I am not sitting idle. I have incorporated a global position/orientation values. I will eventually need dead-reckoning (DR) and I was already in that part of the code. It was pretty trivial to implement. It will work regardless of whether I'm manually driving it or the AI is in control. It works at the single micro-step level so, it should be accurate to sub milli-meter accuracy... albeit the wheels irregularities and flooring will easily defeat it. I'm working now on how to influence trajectory, but retain the obstacle avoidance. Right now, it follows the walls effectively. I would like to use the DR to return back to the start point, but on the way, to still avoid obstacles and likewise, even if I am manually driving it, I would want it to do obstacle avoidance, but return to the heading I was pointing it.

As part of that, today, I am now working with TCP communications between the virtual desktop app and the robot so I can control it from the virtual app, down/upload Genomes and/or drive at the same time.

Much to come...

VBR,

Inq

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

Also, I confess to not yet being familiar enough with the mechanics and terminology of the GA+ANN, so I am presently limited to regarding this as a single element.

The piece I wrote on the Genetic Algorithm is great sleeping material and easily forgettable. 🤣

Where as the Genetic Algorithm has all kinds of random number generation and wishy-washy logic, the ANN in the simple version as used by the ACAA and InqEgg bot is trivially simple and strait forward. I usually try to take the verbal (written) route, but with this learned group, maybe the source code will be easier to read than my dry montage. This is the entire brain that does obstacle avoidance and wall following as shown in the videos above. It still amazes me how simple this is to the equivalent hard-coded version and how a couple of matrix math operations and some sigmoid non-linearity can reproduce fairly complex behavior.

The _weights and _bias matrix/vector objects described are created by the GA evolution. But once the learning evolution is complete, the values in these are fixed for use below.

void AI()

{

// This is called at ~10 Hz - Best rate of ToF data.

// _weights is a matrix object of 2 x 10 floating point variables.

// _input is a vector object of 10 elements holding the previous

// two wheel velocities (mm/s) and eight ToF distances (mm)

// _output is a vector object of 2 element holding the velocities

// of the two wheels (mm/s) to be sent to the drive mechanism.

// Transfer velocities from previous time step.

// Normalize them using the maximum value.

_input(0) = _output(0) / _config.maxSpeed;

_input(1) = _output(1) / _config.maxSpeed;

// Transfer ToF data from row we're using of the 8x8 sensor.

// Limit and normalize them based on our desired clip range.

for (byte i=0; i<8; i++)

_input(i + 2) = min(ToFRange,

_tofData.distance_mm[START_OF_ROW + i]) / (float)ToFRange;

// Do the actual ANN matrix math.

_output.product(&_weights, &_input);

// Add the bias vector.

_output.add(&_bias);

for (byte i=0; i<2; i++)

{

// Normalize using Sigmoid

_output(i) = sigmoid(_output(i));

// Scale it back up to the speed range in mm/s.

_output(i) = (_output(i) * 2 - 1) * _config.maxSpeed;

}

// STEPPERS ONLY BELOW -----------------------------------------------------

// The outputs are now in mm/s. However, they can be such a drastic

// acceleration that InqEgg stepper motors can't handle it. We have

// to limit the acceleration. If using DC motors w/ encoders, we

// could simply give the motors these outputs and skip all this.

// _input is normalized. restore to mm/s.

_input(0) *= _config.maxSpeed;

_input(1) *= _config.maxSpeed;

// Calculate the change in speed the AI wants.

float dSL = _output(0) - _input(0);

float dSR = _output(1) - _input(1);

// Get longitudinal bot acceleration.

static u32 Last = millis() - 100;

u32 now = millis();

float a = (dSL + dSR) / 2.0F / ((now - Last) / 1000.0F);

Last = now;

// Determine if we are accelerating away from zero or toward zero.

// Steppers seem to be able to stop better than they can accelerate.

//float vi = (_input(0) + _input(1)) / 2.0F;

//if (((vi > 0) && (a < 0)) || ((vi < 0) && (a > 0)))

//a /= 2.0F; // Decelerates twice as fast.

a = abs(a);

// If we're above the max acceleration, scale it down.

if (a > _config.maxAccel)

{

// Get scale required to get both below abs(1.0).

a = _config.maxAccel / a;

_output(0) = _input(0) + dSL * a;

_output(1) = _input(1) + dSR * a;

}

// STEPPERS ONLY ABOVE -----------------------------------------------------

// Tell the motors.

// lS, rS = +/- _config.maxSpeed

_drive.setSpeed(LEFT, _output(0));

_drive.setSpeed(RIGHT, _output(1));

}

// =============================================================================

float sigmoid(float val)

{

return 1.0F / (1.0F + (float)exp(-val));

}

I hope this helps. By all means... if you want clarity on some part, let me know.

VBR,

Inq

Edit: If you cared to count... if you use DC motors with encoders, this is ONLY ~10 lines of code! - For complete regulated, obstacle avoidance and wall following. No PWM feedback monitoring code required. No dealing with non-linear start voltage/currents code required. Nada! Just add your sensors (could be ultrasonics, Infrared, LIDAR or ToF) It doesn't really matter. Add you motor drivers. Done... self driving robot.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

Hi @inq,

---

PS .. sorry our messages have crossed in the ether .. I need more time to read it, but apologies if it looks like I have ignored it.

---

Thanks for your excellent reply.

The only immediate (and sorry, probably blindingly obvious to you) comment, is that my 'lapping' suggestion was an attempt to make the fitness calculation result more responsive to 'doing the right thing'.

-----------

Overall, in the bot trials, I am reminded of some other computational approaches, like simulated annealing, as a method of hopefully finding the lowest minima, when there may be many minima, and a calculus approach isn't viable. The problem here though, is that the basis of the fitness calculation appears to be that it is a measure of success, which should yield a high value, when a 'good solution' is demonstrated, but in reality, 'good solution's only yields low to mediochre fitness values.

This feels like only giving a child a favourite chocolate treat when their behaviour is unacceptably bad, and then being surprised when the child is worst behaved in the street.

--------

I confess to having a certain blindness/confusion about how you convince the bot to do what you want, when the aim is somewhat abstract. For cases like picking the right digit from 10 choices, it is trivial. But navigating somewhere seems more subtle.

I can see that an equation that favours high speed in a straight line will prefer to go around the perimeter of a rectangular room, but you are showing, that when it 'hits' on this 'solution', it is only recording a mediocre rating, implying that this solution is near worthless.

Hence, I was suggesting that the fitness calculation should be modified to give its best score, when it is doing what you want.

My suggestion of giving a bonus for each lap was only a trivial example of how the calculation might be changed, hopefully requiring only a few of lines of code, so if the suggestion failed abysmally, I wouldn't feel too guilty about sending you on a massive wild goose chase.

........

Admittedly, I do have a confusion in my mind, due to ignorance of the details, as to whether the fitness calculation actively influences each 'run' as it proceeds, like offering someone chocolate if they do something for you, or the more Darwinian influence of being a survivor that is likely to passe on its characteristics to next generation, or maybe both.

---------------------------------------

But either way, am I misguided in the general presumption, that a high fitness value should correlate with success of the mission, to enable the 'smartest' models to be inherited to future generations? (Or is the another mechanism/scoring system for this?)

If you, as the designer of the system, are not 'supposed' to 'optimise' the fitness calculation, then how will the machine 'know' it is finding a good solution?

-------------

Sorry if this drival is 10x longer than it needs to be, and you were well in front before I started, but that is a hazard of not knowing what you are thinking.

Best wishes and thanks for you patience, Dave

This feels like only giving a child a favourite chocolate treat when their behaviour is unacceptably bad, and then being surprised when the child is worst behaved in the street.

I won't ask your opinion (nor state mine) about corporal punishment. 🤣 🤣

I confess to having a certain blindness/confusion about how you convince the bot to do what you want, when the aim is somewhat abstract. For cases like picking the right digit from 10 choices, it is trivial. But navigating somewhere seems more subtle.

I am constantly amazed at a simple linear-algebra multiplication with a non-linear fudge factor can achieve such remarkable results. What borders on black magic... the fitness doesn't even say which side of the bot the closest obstacle distance is on... yet, I've seen it turn both directions and even back-up from a wall. In almost all the Genomes that survive, I'd say only about 1% actually hit a wall.

I think the AI stuff (at least in this context) is pretty cool! 😎

I can see that an equation that favours high speed in a straight line will prefer to go around the perimeter of a rectangular room, but you are showing, that when it 'hits' on this 'solution', it is only recording a mediocre rating, implying that this solution is near worthless.

I've not shown the best Genome. It does not follow the walls the columns slow it down. The best Genome does diagonals... further at top speed. So it is doing what the Fitness equation is asking.

Note it's fitness of 0.664 versus the ones I find more desirable at around 0.5. So yes, you are correct. The fitness equation needs to get modified to influence the Genomes and produce a better Genome. I just don't see a clear "follow the wall" factor.

Optimally, I'd like it to stay about 3 meters away from the wall. That way I can pause it every few meters, turn toward the wall and scan it for mapping. I then combine that with the dead-reckoning logic to be able to map the entire room/building. Right now it already does the follow the walls on one side or the other until it returns to the starting point. I'm far further along toward this goal than I would have thought at this stage.

My suggestion of giving a bonus for each lap was only a trivial example of how the calculation might be changed, hopefully requiring only a few of lines of code, so if the suggestion failed abysmally, I wouldn't feel too guilty about sending you on a massive wild goose chase.

I hope you didn't take that as a complaint. It did supply very valuable information that there are far better Genomes in the evolution than my initial simplistic Best and Best of Generation features were giving. I would have been fumbling in the dark when they were just below the surface I hadn't seen.

When used as part of the fitness equation, it did not lead to better results. Let me see if I can brush one of those off to show. At this stage (before getting a real running) using a virtual Genome, I think it just missing walls would be a milestone. I really have to convince myself that is transferrable... and how well. After that, I'll start refining the fitness equation.

This will sound contrary to my speed is everything reputation, but really, precision of following walls will be primary. I don't see an equation for that yet. For it to see a doorway on the (say the right) and enter the room and follow its boundaries (on the right) until it exit the door and continues down the hall. Speed will come with refinement, but will likely be bottle-necked by the scanning / mapping process.

as to whether the fitness calculation actively influences each 'run' as it proceeds

No... the GA generates the Genome. When handed to virtual or real bot as in the code above, it simply plugs the inputs, and calculates the outputs forever. It does not dynamically adjust based on fitness. It only adjusts based on inputs. In the plain running of a Genome as will be in the first bot the fitness won't even be calculated. In the evolution process it is a running calculation during a trial run. The final summation is returned to the GA for it to rank the Genome. So... it's not like being offered chocolate. I'd say it's more like a gladiator in the Roman arena. See lion... live or die. 😆

If you, as the designer of the system, are not 'supposed' to 'optimise' the fitness calculation, then how will the machine 'know' it is finding a good solution?

Did you get a chance to skim the last @thrandell reference: https://www.researchgate.net/publication/5589226_Evolution_of_Homing_Navigation_in_a_Real_Mobile_Robot

To not waste your time... the on-line doesn't have page numbers. The PDF on page 7, you'll see an even simpler fitness equation Fitness = V (1-i); Read the results section B. below the equation. Basically, even with this Fitness equation, it was able to learn and find a simulated charging station and periodically return to it to recharge. Without ANY influence of the fitness equation. It simply did it because it had an input saying it was nearing empty. IOW only environment and self preservation taught it.

Sorry if this drival is 10x longer than it needs to be

Not at all. I welcome the diversion.

VBR,

Inq

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

My test arena is still filled with canoes and I've been down with a cold, but... it gives me time to do software. You might say, I'm a captive developer.

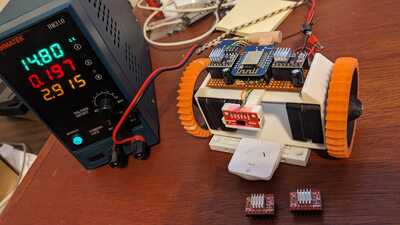

In this installment, I've made some sweeping changes to core, infrastructure code. IOW, to code that has nothing to do with robots or AI. I'm finding, I'm really maxing out the ESP8266. My InqPortal library has a lot of overhead. Between HTTP, dealing with multiple connections and using a far more robust communications protocol, I'm starting to get random reboot exceptions. Feeding them into Exception Decoder, they invariably end up in the Arduino Core code or worse even, the Espressif codebase. These only start cropping in when I'm trying to use interrupts or hardware timers. As you all know some libraries like Servo and Stepper drivers use these. It doesn't seem to matter what I try... invariably a low-level WiFi interrupt stomps on some driver interrupt or vice versa. I can never backtrack to where it is actually causing the exception. It's random nature tend to point to interrupts running at inopportune times. Throw handling the 8x8 ToF sensor running at 10Hz, gyroscope running at 190Hz, the accelerometer running at 100Hz, the magnetometer running at 100Hz, I feel fortunate I haven't run into more problems than I have.

New Communication Methodology - So... what I've done is taken out that library and wrote a strait TCP connection. It causes me to have to write all the protocol and handle TCP idiosyncrasies, but now that, that is behind me, it does seem to put less stress on the ESP8266. Moving forward, the next bot will be using an ESP32-S3, so that TCP/Comms/Protocol should be portable over to it and allow me to add even more AI and robot capability.

In the following video, I've added a new tab to the Virtual InqEgg that has the same joystick interface that the previous InqPortal / web page had. Whereas all previous versions of the Virtual InqEgg were totally self contained and all the PC's compute power was solving the problems... this new tab is only a passive window to calculations being performed on the real InqEgg and returned to the desktop's Virtual InqEgg.

Dead Reckoning - Also, because I was already neck deep in the code, I've added the ability to do dead-reckoning on the real InqEgg. This is based on lots of geometry and the actual micro-steps of the stepper motor drivers. In theory, the resolution is +/- 0.03 mm and +/- 0.0005°. IOW, the theoretical accuracy is far beyond my ability to validate. At the very least any discrepancies should now be due to environment... wheel slippage, wheel and track dimensional variability, irregular driving surface bumps and cracks... but it should get me in the ballpark.

Futures??? - The main one is to validate the Genomes on the real bot and hopefully see it move around the auditorium without hitting the walls. After that gets resolved the next steps will be to merge the AI abilities with the Dead Reckoning (DR). The next hurdle is to assign some goal (location and orientation) yet have the AI monitor the travel and make adjustments for obstacles, turns in the building, etc and return control to DR to re-calculate the proper trajectory to the eventual goal.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

I took a forced break because of the boats in MY robot test facility. At least I'd like to think of it as my personal Nürburgring. They're gone now and now I only have to dodge the Jazzercize classes. 😎 😘 Not keeping idle, I have been learning to throw clay and mix my own pottery glazes. And will also be taking up bee-keeping, building my own hive boxes. Have ordered the bees and I'm told they'll show up sometime in the spring. I have joined a local bee-keepers organization and the guys are great and helpful! Of course, I want to instrument the hives with (you guessed it) with IoT! I've been researching LoRa, of course starting out with Bill's excellent videos and have my first modules coming in Monday. The way I figure it... as long as I keep busy, learning, I can't die!

InqEgg Update

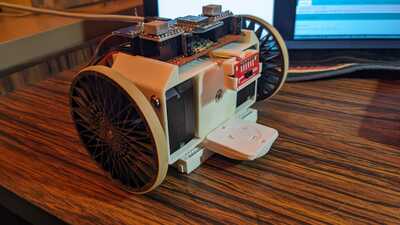

Anyway, although a little stagnant on InqEgg, I have made some progress. Thinking more long term for the project that I want to map the building in 3D with the ToF sensor, I need to get the Dead Reckoning working with some degree of accuracy. Even if the math is completely accurate, there are still other issues. The wheels are one... but even if they're perfect, there is dealing with an 80 year old public building with warped, shrunk, twisted hardwood floors and multi-generations of add-on construction with different materials. Did I say, this is the perfect acid test for mapping... Oh, yes it is!

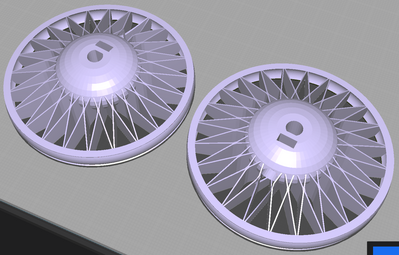

The wheels were made for traction on things like carpet and even taking small steps like going from carpet to tile to wood floors... those in the half-inch height difference category. In older robots, the heat from the stepper motor shafts softened the ABS plastic and would sometimes send a wheel flying.

Later version, I added set screws into the designs, but over time, between the heat and eccentric force being exerted caused the wheels to deform out of round. Not good either. In InqEgg, I used Carbon Fiber filled Polycarbonate. I also went radical (an experiment) using a single spoke design.

The CF/PC seems to hold up well under the heat. The heat generation has been greatly reduced. I've experimented with reducing the maximum current of the DRV8825 stepper motor drivers down to below 0.5 Amps. My accelerations have taken a hit, but I couldn't get enough traction anyway. A side benefit - I've been running on the same set of 4X 18650 charged cells and have nearly 4 hours and still have more than 70% charge left! I'll be doing some studies of current setting vs battery life.

Even with the lower heat, and maybe because I overtightened the set screw and because of the One Spoke design... these wheels suck! Hop Along Cassidy is more appropriate. (I didn't say it was a good experiment). I've also decided to start airing more of my fails. (I don't want to work @zander so hard finding my errors. 😉)

Grant me the Serenity, to accept the things I can not change, Courage to change the things I can, and Wisdom to know the difference.

The impetus for this post, is the last thing I can change - The Wheels.

In this version, I've reverted to the BBS Mahale style wheels that were on Inqling Sr.

... well... not quite that nice!

These many spokes should enforce roundness of at least the 3D printers roundness ability. It has the same nominal 70mm diameter. Although I did add the ability to put a set screw, I've got the proper shrinkage figured out on this CF/PC so I have a nice tight fit and will avoid using it, if I can. That way it doesn't force an eccentric deformation. The design also expands axially toward the shaft to utilize the full length of the motor shaft (17 mm). This should to help reduce out of plane wobble.

And finally, I punted the 3D printed TPU tires. Although, the TPU flexes, its more like those plastic squeeze toys for babies. The tractions doesn't seem that much better than the bare wheels on smooth, interior floors. I've replaced them with rubber bands. A lot more traction, if I don't throw a tire. Another experiment in the making.

I've also leveraged the current dead-reckoning code on the bot to help do the calibration. I can tell it to go precisely x number of steps on each wheel (independently). I can measure the distance out and have it return to the start position. I'll have a nearly 100 foot hallway, with Linoleum flooring, to do out and back tests. I have also thought of a spin test. I can put a laser pointer on the bot and point it at a wall (about 50 feet away) and spin it 360°. I can do it on one wheel at a time to determine diameter of the wheel and the bot's track. With the resolution of the stepper's micro-stepping, and nominal dimensions, the theoretical angular resolution is 0.015°. With the laser pointer at 50' that equates to 4mm. It will take several iterations through the tests but, I should be able to compensate for wheel diameter variations and be able to back-calculate the diameters and bot's track width to at least 3 decimal places in millimeters.

More experiments, more fun!

VBR,

Inq

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide