Combining Two Genomes

One Point Crossover

In Goldberg, the process of combining two parent Genomes to make two Child Genomes is called One Point Crossover. Remember a Genome is simply an finite length array of variables. In this method, a random number is generated between 0 and the length of the Genome. That is the One Point. The first child gets all the data from the first parent up to this point and gets the data from the second parent after this point. The second child gets the reverse combination. Say, my Genome has 20 double variables. I generate a random number between 0 and 19 (inclusive)... say... 7. Of the 20 double variables in the first child, the first 7 are identical to the first 7 in the first parent. Those from 8 to 19 are the same as 8 through 19 of the second parent. The second child reverses that same trend with the same random One Point Crossover.

Natural Biology

One Point Crossover sounded way too contrived and certainly nothing like what I learned in Biology (I did listen some times). I decided to experiment with a second design concept to combine two parents, I'm calling it Natural Biology. In it, I simply go through the 20 variables of each child, one at a time say gene[6] and get a random number between 0 and 1. If it is zero, the child gets gene[6] from the first parent, if one, it gets gene[6] from the second parent. Each child is handled separately.

This is what I learned in Biology.

I haven't started testing things yet. I found it works, but I haven't really analyzed the differences between the two methods. Need to find a more quantitative method of handling the study.

VBR,

Inq

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

Think of it as puzzle game. A bit like the Master Mind game where you have to work out the hidden colors and arrangement of a row of colored pegs.

https://en.wikipedia.org/wiki/Mastermind_(board_game)

You have to work out a secret code from a set of clues (fitness values).

You start with a list of a combination of numbers.

32875625967351363371

72274612873651842719

21827442361447382572

... and so on

For each set of numbers in the list you are told how many of the numbers are correct (fitness value).

You are not told which of those numbers are correct, only how many.

Thus I might say there are three correct numbers in the correct position in the first combination. Thus the fitness value of this combination would be three.

You might generate 100 such examples and given the fitness value of each combination.

Using those fitness clues you have to generate another 100 trial combinations.

Repeat this until you converge on the correct combination.

In it, I simply go through the 20 variables of each child, one at a time say gene[6] and get a random number between 0 and 1. If it is zero, the child gets gene[6] from the first parent, if one, it gets gene[6] from the second parent. Each child is handled separately.

I did exactly the same thing 🙂

Plus a random mutation now and then to try and find a better set of values.

Plus a random mutation now and then to try and find a better set of values.

I was leaving Mutation for a separate post. I wanted to give a strait through conceptual run first. 😉 There are a couple other aspects I do differently than strait Goldberg, but they may or may not be significant or desirable. They just sounded like a good idea at the time.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

- God does not throw dice. Albert Einstein

- Not only does God play dice, but... he sometimes throws them where they cannot be seen. Stephen Hawking

Another long topic, but a continuation of how to build your own AI controlled bot. Well... at least according to Inq. I think I still have to write about:

- Time Step

- Test Interval

- Genome Size

- Fitness Value

Except for Fitness Value all these are related to the problem at hand and aren't part of the Genetic Algorithm proper. Let’s back up a little and talk about what the GA is. It is simply a search algorithm that is trying to find a solution. It is not like Simpson’s Rule being based on finite time intervals, or incremental steps. It’s more like searching a list of ordered strings or number… where you compare the mid-point and decide if it’s the left or right… then half again. GA is basically similar.

In a 2D example, Goldberg’s book uses the GA to solve a simple equation (Y = X^10). The GA is asked to find the location of the maximum value of the equation on the interval between 0 and 10. In this simplistic example he walks the reader through the GA steps I described in the previous post. The GA hands us a Genome. To make it a little more abstract… the Genome is based on bits (not bytes or floats). These bits represent the X value, but the GA doesn’t even know these bits represent a number or that there are limits. We interpret the bits that it sends us as our X value and either tell GA, the Fitness value is zero if outside the bounds or we do the calculation and tell it the Y value that represents the Fitness value. By evolving, GA eventually approaches the answer. From the computer/GA standpoint, you have to really place yourself behind a curtain, not knowing that there is an equation or even that you’re solving Math. Besides, the “equation” goes to zero at anything past X = 10.0 in this contrived situation.

Let’s up the problem complexity by an example in 3D. Let’s say we have a map of the moon. One slight difference… the craters don’t have rims. Now, if you throw a ball out over this map and it finally settles in the bottom of a crater... Is that the lowest point? No, that is a local minimum. Now, if you throw a hundred balls out, your odds go up that one will be in THE lowest point. Using GA, we’re not only throwing out the original Genomes of the original generation, but as they reproduce, they find local minimums… plus, generate new locations to check as well. It’s both statistically based and randomly based. And that is only in two variables (X and Y) that return Z as the Fitness value.

Now… even our little bot problem is considerably more complex. What happens when the problem has 10D… 100D… or 1 million Dimensions? At some point the problem is impossible to solve in a brute-force, kinematic/systematic method. The likelihood of boundary conditions, edge conditions or simply human logic breakdowns or software bugs start to exceed the lack of fundamental understanding of the statistics and non-linear behavior the Genetic Algorithm represents. The one aspect that is constant… The same Genetic Algorithm at 1D should work just as well at 1MD… it just takes more time to learn. But still far less than the development time for such a complex problem.

Genome Size and Definition

As we saw earlier, the Genome replaces the equation between the inputs (X) and the outputs (Y). No more, no less. The fundamental difference… it figures out what the equations are. We don’t have to.

Getting back to the case at hand, we know what the outputs are. That is simplest part… our creation moves by differential speed of two wheels. So our output consists of two double variables with a range between –top speed and +top speed. My biggest hurdle understanding the Genetic Algorithm was… how in heaven was I going to tell the GA how well it was doing with setting the correct speed choices???

Fortunately, I had a higher power. Before I came to my own epiphany, the ACAA paper gave it to me. There robot is fundamentally the same. It is tiny, which makes it easy to control and tether for power and communications in the laboratory. It also permitted a tiny testing room.

On the down side it uses short range sensors. Although it does not state the model, hints in the paper indicate that it likely on the order of 12 centimeters. They further stated that because of the limited range of the sensors, the bot could not attain its top speed of 80 mm/s or it would be unable to stop in time of a wall. On the good note though… the robot figured out that it could maintain a speed of 48 mm/s and stop or turn safely on its own!!!

InqEgg is a rocket ship. It is fully capable of human runner speed, but it is currently software capped at 1200 mm/s (about walking speed). It is also backed up with range sensors claiming to be good to 4 meters. In previous studies here on the forum, we found that to be marketing hype to some degree.

Back to the Genome definition. As has been illustrated, the important aspects for this problem are the range sensing devices and the speed of the bot. In our case, we have 8 range sensors and 2 velocity values as input. And there you have it. The problem becomes a matrix multiplication problem…

OutputVelocities[2] = Genome [2, 10] * InputRangesAndSpeeds[10]

Thus our Genome has 20 double/floating point variables. We tell the GA about these and it starts supplying candidate Genomes. We know how to determine the speed of the bot and we can read the sensors of the bot so the inputs are defined. We just don’t know what the speed can be under those conditions. Turning back to ACAA, we find they came up with a Fitness equation that doesn’t take into account any physics, but simply the desired goals of the bot. In their case they identified it in words as:

- We want to maximize speed (well… 80 mm/s worth)

- We want to minimize turning and especially spinning around like a top.

- We want to be as far away from walls as possible.

They came up with a simple equation for this and I’ve changed it slightly based on the sensors we’re using.

Φ = V * (1 – sqrt(Δv) * i

Where:

Φ = Fitness value

V = the average velocity of the two wheels = (Vl + Vr) / 2. This is normalized by dividing by the top speed (1200 mm/s for InqEgg). Thus, V ranges from -1.0 to 1.0.

Δv = the difference in speed of the two wheels = |Vl – Vr|. This is also normalized by dividing by 2x the top speed. Thus, Δv ranges from -1 to 1.0.

i = the minimum distance as reported by one of the sensors. Note… we don’t even care which side of the bot the minimum distance comes front. GA just doesn’t know or care. This still makes hair stand up on the back of my neck. This number is also normalized by the maximum range of the ToF sensor (4 meter for InqEgg). Anything further than 4 meters is consider to be 4 meters. Thus, i ranges 0.0 to 1.0.

As we can clearly see, the equation returns a perfect score of 1.0 only if the bot is at the top speed, going in a straight line and with no obstructions closer than 4 meters. Anything less than this condition results in a lower score.

So what about Time Slices and Test Durations?

Let’s think about this. We give the GA the Genome size and it starts spitting out potential Genomes (of 20 double variables). We plug this totally randomly generated data into our [2, 10] matrix. Remember, the first generation is totally random data in ALL 80 Genomes of the first Generation. We then read our sensors and the speed of our wheels. We plug this into our Fitness equation above. V = 0, so it doesn’t matter what anything else is… the answer is 0. It will always be zero for the first step of an incoming Genome.

To have the system learn anything, we have to take it out for a test drive with this engine controller data. In a case of a robot (not other problems being asked of GA) we have to drive around for a while. This is a Test Duration or as shown on the GUI of the virtual InqEgg, this is called the Av Genome Time. The Time Slice number is also an artifact of being on an Microcontroller, using commodity sensors and motors. Things can only be done so often. In the case of the ACAA robot, they could generate new data off all their sensors at a rate of 1 every 300 ms. InqEgg can do it in 100 ms. They chose to run each Genome for 80 steps at 300 ms = 24 seconds. Currently, I’ve got InqEgg using 100 steps, but that is really just to get the virtual program working correctly.

With driving it around, we can give it a chance to accelerate and bounce off walls or spin around like a top. So for 100 steps we can sum up the Fitness equation readings at each time slice to get a Fitness value for the entire run. Better yet, I need to take the average so that I can compare it to runs with more or less time slices. Note to self - add that feature. This is what is returned to the GA to be compared with all other Genome's Fitness value for the mating game to come.

This is also where I decided it was time to make the virtual simulator. The ACAA study requires more than 64 hours to complete the learning using their number of Generations, number of Genomes and Test Duration. To do it on InqEgg, it would be on the same order. With the Virtual InqEgg, the same study can be done in 84 minutes. Besides... not banging off real walls sounds like a good idea. Especially at 1200 mm/s.

If I really wanted to go hog-wild, I could make it multi-threaded and use the GPU and get it under 10 minutes… of if I got real stupid, I might try to justify an AMD 7950XRD with an NVidia 4090 and get it under 3 seconds, but I haven’t been hit with the stupid stick enough times… yet. But my old, trusted i7 box Blue Screened on me twice today, so the stupid stick is out!

VBR,

Inq

P.S. – Next, I’ll discuss some of the other things in the GA and the InqEgg usage of GA that I have or will be experimenting. We’ll also need to discuss some of the pitfalls and attempts to mitigate those pitfalls between using the Virtual InqEgg to train the “brain” of the Real InqEgg.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

BTW - If you are one of the casual observers, it would be helpful for me to understand if this topic is of interest to you (even if you read this on the crapper 😆). I tend to write it for myself... like a diary or my notes. I just hope the forum stays around as long as I need to refer back to it to see "my notes". BUT... if my learning about AI helps you (whether just casually Inquisitive or wanting to fully understand the concept or even to copy what I've done) it would be nice to hear any questions. Nothing is too Noob. I mentor students and they are afraid to ask questions. I may be talking over their heads and not know it. Even the slightest questions from you helps me teach them. Besides, I might discover something by just explaining it out-loud like I did above. Hey... if you are more knowledgeable, and see something that can help me... let me know. I only have one real book, two virtual books (that I haven't cracked) the ACAA paper and a couple of other links. If you don't have a question, give me a thumbs up just so I know you're out there successfully wasting your idle time. 🤣 Thanks - Inq

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

I am following most of it with interest, albeit with no plans nor intentions of trying it out.

Anything seems possible when you don't know what you're talking about.

If it is zero, the child gets gene[6] from the first parent, if one, it gets gene[6] from the second parent. Each child is handled separately.

This is what I learned in Biology.

I haven't started testing things yet. I found it works, but I haven't really analyzed the differences between the two methods. Need to find a more quantitative method of handling the study.

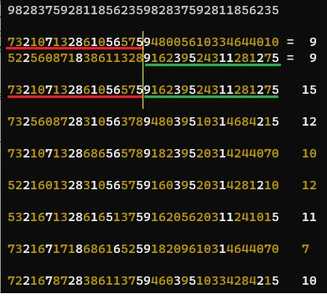

Below we have two parents with 9 correct values. A child is produced by cross over which in this case produces a value of 15. The other five children were produced by random selection of a single number from each parent. I think you can see in cases like this why cross over will converge faster to a solution?

@inq I am a very casual observer. I don't understand anything about it. I don't know what it is for. I assume all the famous robots don't do this since it takes a LOT of computing power. If it is anything to do with AI, I worry for you because I have been having a heck of a time with some sort of AI foisted on me in Windows. Every time I go to type in a search argument the AI starts to take over and I neither want nor need the help.

Don't spend much or in fact any time trying to explain it to me, because as this topic is now 7 pages long so if it isn't revealing itself to me by now it never will.

If I ever am foolish enough to try to build an autonomous robot, it will just use the good old Polaroid land sensors and when it 'sees' a barrier, turn right until it isn't there.

First computer 1959. Retired from my own computer company 2004.

Hardware - Expert in 1401, and 360, fairly knowledge in PC plus numerous MPU's and MCU's

Major Languages - Machine language, 360 Macro Assembler, Intel Assembler, PL/I and PL1, Pascal, Basic, C plus numerous job control and scripting languages.

My personal scorecard is now 1 PC hardware fix (circa 1982), 1 open source fix (at age 82), and 2 zero day bugs in a major OS.

I assume all the famous robots don't do this since it takes a LOT of computing power. If it is anything to do with AI, I worry for you because I have been having a heck of a time with some sort of AI foisted on me in Windows. Every time I go to type in a search argument the AI starts to take over and I neither want nor need the help.

Don't spend much or in fact any time trying to explain it to me, because as this topic is now 7 pages long so if it isn't revealing itself to me by now it never will.

I understand what you mean. There are some things I really don't want help with.

I know most people don't want to rush out and make a bot, but I do have to at least try and explain it so you grasp the potential. 😆 Even if you think you can't get it... I pretty sure you can.

The compute time is pretty minimal... at least at this level. Now at the Boston Dynamics or Tesla robot level, I'm sure its quite extensive and I'd bet money they both use this. The compute time is all in the learning. After all the above stuff comes up with the solution the bot can run easily on the answer. I'm sure you're familiar with Matrix math, so for a obstacle avoidance bot it becomes just that matrix equation above. The steps would simply be...

- Read 8 ToF sensors

- Read 2 velocity off DC Motor Encoders or get it from the stepper motors.

- Put 10 values in a vector

- Multiply by the Genome Matrix

- Get 2 output that you use to supply the new speed setting to the motors.

THAT'S ALL. Rinse and repeat forever. The bot moves around and avoids hitting things. No if/then logic like... if sensor 3 has a reading so and so on the left, adjust motor speed by X, but only if sensor 5 isn't maxed out... blah, blah... IOW none of that coding is required. Just the one Matrix math statement.

Let's ask @robotbuilder... How many lines of code do you have in your first principles robot? Are there videos here on the forum showing it moving around? The search engine here isn't working, so couldn't find any. Sorry!

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

Let's ask @robotbuilder... How many lines of code do you have in your first principles robot? Are there videos here on the forum showing it moving around? The search engine here isn't working, so couldn't find any. Sorry!

🙂

If you want to search a forum use Google and include the name of the forum. "dronebot forum".

You have to sign up to Google to post a video which I have avoided doing.

I have posted some still images to the forum.

There aren't any other members here interested in bouncing ideas around for building and coding a "real" robot so I have mainly taken an interest in their projects.

Machines that can evolve and learn has interested me for decades but I don't see them as a practical solution only as a research project.

"Behind all the hype and excitement of machine learning and creating a complete AI, there is almost always some GOFAI in the background. In one form or other, GOFAI is a necessary part of an AI solution to get the full job done."

https://www.teradata.com/Blogs/AI-without-machine-learning

I have been working on another medium sized robot base but sourcing affordable medium sized geared motors has been an obstacle so I have been limited to those tiny robot vacuum motors that come with geared wheels and encoders to play with code. Other more important family financial responsibilities take precedent.

Hi @robotbuilder and @Inq,

Thanks to both of you for your replies. I am still re-reading & cogitating some of Inq's, so for now, can I just say thanks, for the time and effort you have taken; it is much appreciated.

I am going to refer to a specific small but vital part of Inq's explanation, which seems to me to overlap with Robotbuilder's input.

It will take a lot longer to spot and appreciate some of the many sub-plots to the whole of Inq's story.

-----------

Regarding Robotbuilder's "Think of it as puzzle game. .." contribution, I think it encapsulated the reason I asked one of the main questions, namely how is 'Fitness' defined? The snippets of examples on training various AI machines I have glanced at, all tend to use the same type of examples, and all too often, the same data sets.

The first one that comes to mind, is the recognition of the set of handwritten digits 0 to 9, which is trotted out like Hello World or Blinky, as an example of training a machine. Now, like Blinky, in terms of explaining the maths/code of the machine, and ensuring the machine has been correctly coded and installed, this may be fine, but because the relationship between each image to be recognised and the 'correct answer' is trivially easy to convey to the machine, it sidesteps how to approach a more complex task, even if the main underlying computational machinery is capable of doing either task without change.

To illustrate, an even simpler case from the point of view of defining 'success' or 'fitness', occurs when the data set is a series of images, each of which has a photo of a cat or a dog, and the machine must decide which one it is, for an hitherto unseen image. In this case, success is a simple yes or no, with the machine only having two choices to choose from. On average, the 'randomised and untrained' machine might expect to be right about 50% of the time, so even with a modest number of training images, it will have fair number of successes and failures to 'tune' its parameters and improve its success rate.

The handwritten digits presents the machine with 10 options to choose from ... a wider choice than just cat or dog, but still a small number.

The Mastermind game similarly only has a small number of options for each peg, plus having several pegs in each attempt, aids the machine by parallelling the number of pegs checked in each attempt, combined with a graduated success route, albeit more obscure than the handwriting one.

--------

Now contrast that with Inq's 'dream' of a robot that can be asked to go to the fridge, to fetch a beer .. which might have a simpler forebear that only attempts to navigate its way to fridge.

So how would a function indicating success be defined, without defining a specific route?

The simplest would be a "Is bot in front of fridge?" Yes or No. But assuming the path to the fridge is not a trivial straight line, then the number of alternatives to choose from at each move is typically large, and hence the number of possible routes can esily become vast. The problem has 'shades' of the travelling salesman problem. ( https://en.wikipedia.org/wiki/Travelling_salesman_problem)

Thus it typically take a long time to find the fridge, because there are so many ways to fail. Also, even if the bot succeeds in finding the fridge from one position, this 'learning' would probably be of almost no value of how to find the fridge, if restarted from a different position. Plus, unless some 'pruning' mechanism is included, many routes would probably include a large number of redundant moves.

Obviously, with enough atttempts, it may succeed in being able work out a route from any starting position, but it now has the 'feel' of the infinite monkey theorem ( https://en.wikipedia.org/wiki/Infinite_monkey_theorem), which is probably even more compute/time expensive than the travelling salesman, that is confined to the limits of a road (or rail) system.

------

Thus, to 'educate' the machine in a realistic time, it seems likely that 'success' will need to be indicated in a more graduated way, so that incremental moves in the right direction are in some way shown as a 'reward', but without defining an actual route. How that is achieved is not obvious to me.

------

My first impression of reading Inq's replies, is that the goal/success criteria has been 'simplified' to one of maximising speed, whilst maximising the distance from the walls. Intuitively, for a square room, this suggests it might find a smooth route, like a circle whose diameter is the 'best compromise' between keeping away from the walls and going as fast as possible around a curve, but more complex paths like a square with filleted (rounded) corners, or even a figure '8' might also emerge.

In a 'maze' world, this might be successful in finding a way out, but I wonder how it will get on in a more arbitrary world.

Howver, it is an interesting challenge to develop the underlying machinery.

-----------

As a 'casual observer', the initial thing is to attempt to interpret what the simulation (or real bot) is showing, and to do that, it seems vital to know what the 'fitness' criteria is.

Thanks and best wishes to all, Dave

@inq AH, Matrix Math is something I understand and have done when I started a game company. We were only a few months into it and I had got as far as being able to fly a 'thing' around in 3D space. Then DOOM hit the market and when I read how they did it and what they used to do it decided it was too big a mountain for 3 guys working after work on weekends to take on. Now I will have to re-read all your posts for InqEgg to see what I can understand.

First computer 1959. Retired from my own computer company 2004.

Hardware - Expert in 1401, and 360, fairly knowledge in PC plus numerous MPU's and MCU's

Major Languages - Machine language, 360 Macro Assembler, Intel Assembler, PL/I and PL1, Pascal, Basic, C plus numerous job control and scripting languages.

My personal scorecard is now 1 PC hardware fix (circa 1982), 1 open source fix (at age 82), and 2 zero day bugs in a major OS.

@inq AH, Matrix Math is something I understand and have done when I started a game company. We were only a few months into it and I had got as far as being able to fly a 'thing' around in 3D space. Then DOOM hit the market and when I read how they did it and what they used to do it decided it was too big a mountain for 3 guys working after work on weekends to take on. Now I will have to re-read all your posts for InqEgg to see what I can understand.

Well... that would put you to sleep for sure! I think the key point, is the Genome is a matrix that transforms the sensor inputs into command outputs... once the learning process is over. All those Boston Dynamics and Tesla Bot videos are after all the learning is done. The MPU processing required at run time is negligible... FAR LESS than the detailed Physics with a bunch of it/then logic. The lowest Arduino could run the bot once it was given the "learned" Genome Matrix.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

The first one that comes to mind, is the recognition of the set of handwritten digits 0 to 9, which is trotted out like Hello World

This is very true and sent me on a tangent. I think I discussed that in @thrandell's Genetic Evolution thread. The digits problem lead me to believe there were two potential methods to solve an AI problem. Genetic Algorithm (GA) and Back Propagation (BP). BP is completely different in that it is based on Calculus... No random generation anything. HOWEVER, you must know what the answer is. In the case of digits we do. The image is a 1 or a 7.

GA allows problems to be more qualitatively defined or inferences. In the bot's case, the outputs are two speeds and we can't tell the learning process what those speeds must be. But, we can tell it what affect we want to achieve (go fast in the straightest line that doesn't bang into something) instead of something like (left wheel 23.58mm/s and right wheel 72.32mm/s).

My first impression of reading Inq's replies, is that the goal/success criteria has been 'simplified' to one of maximising speed, whilst maximising the distance from the walls. Intuitively, for a square room, this suggests it might find a smooth route, like a circle whose diameter is the 'best compromise' between keeping away from the walls and going as fast as possible around a curve, but more complex paths like a square with filleted (rounded) corners, or even a figure '8' might also emerge.

My expectations are based on physics and logic as well. That is why I'm starting out using two room sizes. With sensors good out to 4 meters in a 15m X 15m room, I'd expect it to opt for a square with fillets. But, at the moment, it doesn't have the concept of skidding due to centrifugal forces, so I don't know what will come out. I also run it in a 1.5m x 1.5m room where it always sees the walls. In this, I'm guessing a circle path. I could do the calculations and may still do them for S&G to optimize between bigger circle going faster or tighter circle being further away from the walls.

I don't know what will come out of it or if that will be transferrable to the real bot. We'll see. It's like a real science experiment where you don't know the outcome... unlike when we were taught and know what happens when we mix two chemicals. I really like this unknown aspect... and theorizing what to do to improve in the next experiment toward the end goal.

Thus it typically take a long time to find the fridge, because there are so many ways to fail.

Well... no one can be blamed for not knowing my big picture. I have stated it or parts of it many times in many threads, but unless you kept notes, there is no way anyone would put all those pieces together.

In my way of thinking, I always fall back to how we learn from birth through our day to day ways now. Does this video remind of a baby in a crib, waving around, then later on floor shaking limbs, finally pushing up, crawling, falling, trying again?

I see this InqEgg, obstacle avoidance as being the equivalent (with the best bot building skills I can muster).

Now contrast that with Inq's 'dream' of a robot that can be asked to go to the fridge, to fetch a beer .. which might have a simpler forebear that only attempts to navigate its way to fridge.

So how would a function indicating success be defined, without defining a specific route?

The simplest would be a "Is bot in front of fridge?" Yes or No.

Again, I fall back to how does a human do it. My game plan follows that.

- I wake up... say from falling asleep at half-time because the talking-heads bore me to tears.

- Where am I? Having forgot since I was asleep.

- I look around and realize, I'm in a recliner watching... oh yeah... Football is back on, I'm in the living room.

- Oh, my beer is warm and since only Englishmen drink warm beer 😋 (I understand this has something to do with Lucas refrigerators) I get up and go to the fridge to get a cold one. My fridge is designed by Koreans and built by Chinese so it keeps things cold.

Now, what things did I do to accomplish my goal???

- Well... I've already achieved the getting up, walking and obstacle avoidance aspects a long time ago and InqEgg will be doing those sometime soon... hopefully faster than I did.

- I remember my house. For this, I learned my house by walking around in it and memorizing the floorplan when I considered buying it. You might recall, I said I want to do mapping of a house. That the robot will bot around and scan the walls, and use its other sensors for orientation and location to build a 2D map. This will likely be with the addition of a RaspPi w/ a big SD card in a next bot, say... InqLarva or better yet InqWorm. I see this phase being a separate GA learning process to follow along a wall instead of avoiding them. It will have the added aspect of having a higher order process over it to stop every so often and scan around to do a mapping. I don't see this as being part of the GA. I see it as keeping track of how far the GA portion as moved and interrupting it to scan and map. It then would return control to the GA following routine so forth and so until it has scanned the entire house.

- I recognized where I was in my house. The bot will boot up and scan around and compare what is sees to the step 2 map. It will statistically decide where it is.

- It builds a plan to head to the fridge.

- It kicks-off the plan avoiding any new obstacles.

- It continues to scan to confirm that it guessed correctly where it started and adjusts the plans accordingly if it was wrong.

- It arrives at the fridge.

For, now I'll forgo teaching it the aspects I used for acquiring a taste for beer. 🍻 Otherwise, I might not get my goal back in time for the game.

VBR,

Inq

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide