Still no AI!

This is totally just RC control. I wanted to try some new things out with InqEgg that were problematic with Inqling Jr.

- Inqling Jr.'s stepper motor driver was optimized for move distance. You told it exactly how far to move and it was good to fractions of millimeters. InqEgg will be AI controlled and should take into account variabilities. Therefore, I optimized the driver for speed settings. (Aside - The AI is able to compensate for miss-matched DC motors and still optimize the robot for traveling a strait line.

- Stepper motor do have an idiosyncrasy that DC motors don't have. You must set the maximum current the driver will deliver to the stepper motor. This has good points and bad points. The bad point is that once it hits that maximum current, it will deliver no more. This equates to torque. So if I ask it to accelerate too much one side or another will start skipping steps and the bot will start turning around in circles. On the good side I can reduce the current requirements and thus extend battery life.

- An interesting science project will be to see if the AI can be trained to accelerate at the maximum rate of the bot can do without asking too much and spinning out. This should be possible just as easily as it can balance two DC motors.

- I also added code to limit the top-speed and have set it to be 1200 mm/s or about walking speed. Inqling Jr had double digit mph possible speeds, but it wasn't controllable. I want to give AI a fighting chance.

- Inqling Jr was very touchy turning. I added code to restrict turning rates. It's a first cut, and I already have ideas how to improve it.

Anyway, here's a video of InqEgg driving around via RC. I'm currently working on the GA software.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

Now... if you're using map the same way I mean in mapping a house/building... I see that as being totally after it has done all the learning of how to get around. The learned ANN wouldn't know or need to know anything about the building. It's merely going to be how to move around, avoid obstacles and follow walls to gather data.

If your ultimate aim is to gather data (to make a map?) then I will be interested to see if you can evolve a network capable of executing that in a methodical or complete way.

I assume here it is more about the fun of seeing if you can evolve a network capable of doing something without being told exactly how to do it by giving it feedback in the form of a fitness value.

I assume here it is more about the fun of seeing if you can evolve a network capable of doing something without being told exactly how to do it by giving it feedback in the form of a fitness value.

Yes, this latter one. This bot is not expected to do the building mapping. This is just a test bench to study Back Propagation and Genetic Algorithms. The Back Propagation, I want to study various hidden layers and sizes since there doesn't seem to be any literature on sizing those. The Genetic Algorithms still seem rather inefficient with all this translating between the mathematics required to actually run the bot versus the string manipulation used for the actual evolution. I have an idea to merge that into one so it won't require the back/forth translation. I'm also curious about the one-point crossover that Goldberg and ACAA both use. For all the trouble that they go to to mimic biological evolution, this one aspect is a slap to Darwin. I want to compare the one-point crossover method to something I've been working on that is more consistent with biology... mixing of the parent's genomes.

So... again, the end-goal of InqEgg is solely studying many different things with AI with the leaning toward robot motion. I would like it to get to the point like the quad-ped robot that learned to walk on its own and then on to a bi-ped with the same goal having to refine balance to a very high degree.

I completely agree with you that a direct, sensor input to kinematic/dynamic engine to motor control can easily be done for a simple 2-wheel robot. However, I do not see that logic flow being possible for a bot with limbs having many DoF. In theory the same exact AI engine that runs the simple 2-wheel bot should handle a bi-ped. It just requires more inputs, more outputs, larger matrices, more CPU performance and more time to learn. (Although the quad-ped learned in one hour I believe). I'd rather fully understand AI methods and lock the bot in a room and tell it to come out when it can open the door itself. 😆 😉

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

All a bit heavy duty and time consuming for me 🙂

I guess you have been looking at how others have applied GA to their robots.

This uses reinforcement learning.

https://towardsdatascience.com/evolving-a-robot-to-walk-using-python-83417ca3df2a

https://github.com/shepai/Biped?

All a bit heavy duty and time consuming for me 🙂

I guess you have been looking at how others have applied GA to their robots.

This uses reinforcement learning.

https://towardsdatascience.com/evolving-a-robot-to-walk-using-python-83417ca3df2a

We're certainly not talking about the same thing here. These bots are not much better than wind-up toys of the '60s. They depend on static stability. I'm talking about at least a child height, bi-ped bot equivalent to at least... Boston Dynamics Atlas requiring active control just to balance and able to get up on its own if it falls over... that can adjust to any terrain from hills, gravel to nice smooth floors.

If you think you can hard-code a sense and reaction system based design, I encourage you to try. I feel quite certain that no single person no matter how much education and experience could succeed in that venture in their lifetime no matter how much they can scarf off the Internet. Not even a Boston Dynamics or Tesla robot engineer. That's why they're using AI and still have huge teams of engineers. Compared to the 4 hours I might spend on getting a C version from the Internet working on the MPU or even the 40 hours I might need writing one from the first principles seems trivial. I don't expect to succeed even with such an engine working. I firmly believe my failing will be on the hardware side... getting activators strong enough and power density high enough to run such a beast.

As mentioned above, I see InqEgg as simply the first stage in that path. But, I also believe the software engine will largely remain unchanged from InqEgg to a quad-ped or bi-ped. It will just require more inputs, outputs and time for it to learn.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

We're certainly not talking about the same thing here.

I think we are. The link was about the coding of hill climbing algorithms, how complex the task is doesn't matter, except of course the more complex it is the more components for it to work and the more time to climb that hill. Dynamic stability requires a fast enough response before the system tips over into an unrecoverable state (falling no way to stop it). Getting up again requires learned sequence of changes in the servo positions while making dynamic balancing adjustments. Another requirement is goal seeking added to the ability to ambulate in order to achieve that goal. A popular task is a game of soccer.

With these robots their state is defined by a list of servo positions and tilt values and maybe even pressure sensor values in the feet. For any given state there is an action to move it to another desired state. Learning what action to apply to any of the possible states is the trick. But it all comes down to a list of input states and an associated list of actions to change one state into another state.

A trained neural network "simply" maps a particular input state to a particular output state just like in a simpler example a full adder maps an input state into an output state. Methods have been developed to move the initial random connections toward connections that are closer to the desired input/output behavior. One thing a neural net can give you is not an exact answer "this IS a cat" but rather "given past examples this is 90% similar to a CAT". This is the same as in a hard coded example where you count how many features this new input has in common to the features in in some training set. The problem with the NN is we don't know what features it has randomly hit upon.

If the robot is lying down the servo motor values might have the same values as when standing up. However the sensors that show the vertical orientation are different thus the states are different. So you have to put the servo positions through a series of changes to get the vertical orientation sensors to return the desired values to the standing state.

The way I got to think about these things came from a book I bought decades ago, "An introduction to Cybernetics" by Ross Ashby.

I have found these little soccer bots enchanting to watch with the awkward movements like little todlers who are still learning to walk properly. I see they use vision to find the ball.

I have found these little soccer bots enchanting to watch with the awkward movements like little todlers who are still learning to walk properly. I see they use vision to find the ball.

Yes, I've seen those and yes, I think they're really cool and quite sophisticated!

But this is a perfect example... of why I am confused with your well established position that you believe you could ever get near that level of bot using direct, hard coded kinematic and dynamics theorems and direct sensor to function to motors software solutions. They had a team of physicists and developers and they still chose AI methods and still took grundles of time.

Even though I have most of the requisite background, physics, engineering and software development, I wouldn't even start that Everest mountain direct method. There are not that many decades of time left in my life. And why would someone abuse themselves when solving the ant-hill size, AI solution can accomplish the same end-result.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

With regards to wall following, obstacle avoiding, house mapping and navigating we already know how to hard code those things. The latest vacuuming robots do it every day. However as a demonstration of what a neural net can do then evolving a wall avoiding "brain" is a fun and educational project and it will be interesting to see how far you can go with it.

With the soccer robots in the link it was a little bit more involved than feeding data into a single neural net and magic happened.

I think I could have hard coded a practical wheeled robot to "fetch a beer" but one that could run about and play soccer using neural nets is something beyond my ability or understanding.

So don't misunderstand me I am cheering you on 🙂

Discussing in another thread... I see I have neglected a price break-out. Here is a spreadsheet of the pieces I purchased on Amazon in the US. Obviously, prices will differ all over the world, but I see a lot of you buy directly from AliExpress/Banggood/etc and get it far cheaper than these prices. I also buy in quantity so the links provided on the spreadsheet might have multiple extras to let the smoke out of or to make a future bot... Always my philosophy. However, the prices are based on the itemized part price.

- Total comes to $84 buying everything.

- If you're willing to scrounge LiIon 18650 batteries from say a laptop, the total drops to $60.

- The next real expensive part is the 8x8 ToF sensor at $25 that I'm really not fully utilizing in the obstacle avoidance portion of the project. There might be cheaper options for that sensor that might drop in under $40.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

Reading through all this about AI and using a Genetic Algorithm (GA) and actually writing the code to run on the ESP8266 and the PC, I came to a realization of some of the pitfalls. It takes many hours or days for a real robot to run through all the Genomes of all those generations. If I want to experiment with various software settings, learning algorithms Genome sizes, Population sizes, Generation counts and if I have to restart a learning process over and over, I'm looking a months of watching a bot turn in circles and running into walls until it gets out of the stupid child phases. My TODO list is long. Also... there is the shear damage that might be caused. Whereas the ACAA paper's bot ran at a top speed of 48 mm/second, that is just entirely too slow for me. I've set a tentative top speed of 1200 mm/second and that is merely a software limit. That's another TODO list item. 😆

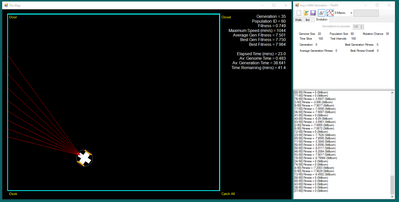

Anyway... I decided it might be prudent to make a virtual version of InqEgg. The following video shows my first cut at a Genetic Algorithm for a robot learning in the virtual world. My theory (my hope) is that I can at least get it through the stupid spinning around like a top and running into walls phases and that I might pre-load a pretty good generation into the bot to start from. The only limiting factor for that theory is how well I can model the real world... in the virtual world. Since this bot is pretty simple with 8 ToF vision rays, all I really need to model on that end is when those rays hit a wall. Pretty easy with geometry and graphics. The second part of the equation is how close I can simulate the motor's responses. They can't instantly go from 1200mm/s to -1200mm/s, so I've added at least the concept of inertia and we'll just see if the GA can learn how to drive it around.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

If I want to experiment with various software settings, learning algorithms Genome sizes, Population sizes, Generation counts and if I have to restart a learning process over and over, I'm looking a months of watching a bot turn in circles and running into walls until it gets out of the stupid child phases.

And you may have to restart the learning (evolving) process every time you change the physical make up of a robot such as the types and distribution of sensors, neural nets and motors used.

[snip insights provide by biological evolution which is probably boring 🙂 ]

Anyway... I decided it might be prudent to make a virtual version of InqEgg.

This gives you the opportunity to get something working with the simulated InqEgg and then test how useful the simulation has been when you transfer the code to the physical InqEgg. A simulation is often a simplification of real world physics and the differences may be important.

There are simulations that can be used to learn how to use ROS including 3d simulations. 3D simulations may be required for a wider test of your TOF sensor?

My other thought was having two more TOF sensors on either side of the robot? With only a forward view there may be things that would evolve faster if side views were part of the input. Otherwise it might have to evolve a look right and left and use the resulting data to determine the next motor action.

Hi @inq,

At present I am just a 'casual observer' of your simulation, and I am drowning in some of the terms which will be obvious to anyone involved more deeply, so I hope you won't mind if I ask a few top-level 'stupid' questions to try to get to an overall picture .. with apologies in advance for when you have already stated the answer or it is in one of the references that I haven't read.

Could you please clarify or correct my guesses, and fill in the unknowns:

- Time step ... In principle, InqV(irtual) {apologies if that is the wrong name) is continually "moving" (including sometimes with a velocity of 0), and hence its coordinates,etc. are continuously changing. As this is a digitised simulation, it is impossible to perform a continous simulation, but rather it simulates snapshots, like frames of movie film. So "Time Step = 100", implies time between any two frames is 100 millisecs.

- For each frame or Time Step means calculating a new set of coordinates, velocities, distances etc. at that instantaneous moment.

- Genome size ... this is the number of input data values to the "AI machine".

- Genome Size = 20, implies, once per Time Step, 20 (floating point of some flavour) values are sampled.

- e.g. 8 of the values would be distance measurements from the Lidar sensor, looking horizontally at each of the 8 different forward facing angles.

- Fitness - I have never played Doom or the like, but it seems common for the lead character to have a parameter like 'Health', which reduces with events like being shot, and the game ends when Health goes to zero (or negative), signifying death.

- Fitness seems to be such a value, but, assuming InqV is not being shot at, how is it determined?

- Also, is the Fitness input to the AI as one of the 20 genome values or does it have a special input route?

- Generation and Population ID are not clear.

- From my oversimplistic Darwinian viewpoint, I guess the Population is the number of individuals alive at any one moment in time, and Generation is a time snap for the average lifetime/lifecycle of an individual, with the implication each generation inherits the 'characteristics' needed to be successful, from preceding generations, as well as having the opportunity to introduce and/or change the characteristics, at least by a modest amount, to see it it fares better. How this relates to InqV I am not clear.

- Do subsequent InqV Generations inherit any characteristics from previous ones?

- The simulation only shows one InqV at a time, but you could have (say) 100 generations, with (say) 50 InqVs 'alive' at any one time, and each of the 50 would spawn a new offspring one at the end of its 'life' with characteristics inherited from its parent (who presumably dies or retires into oblivion).

- Obviously, something must be about those whose fitness goes negative before full lifespan, if InqV isn't to become prematurely extinct.

- From my oversimplistic Darwinian viewpoint, I guess the Population is the number of individuals alive at any one moment in time, and Generation is a time snap for the average lifetime/lifecycle of an individual, with the implication each generation inherits the 'characteristics' needed to be successful, from preceding generations, as well as having the opportunity to introduce and/or change the characteristics, at least by a modest amount, to see it it fares better. How this relates to InqV I am not clear.

- Test Intervals - I assume this is the number of Time steps that an individual InqV is observed, unless the individual hits a negative Fitness level first, although the exact definition is somewhat unclear.

- Thus for Test Intervals = 100, then the 'success' if a particular InqV is assessed at the Fitness Level after 100 Time Steps have elapsed.

So sorry the questions are so naive, but hopefully I am not the only one bein so stupid.

Best wishes and thanks in advance, Dave

So sorry the questions are so naive, but hopefully I am not the only one bein so stupid.

Best wishes and thanks in advance, Dave

No, not at all... with the lack of other people posting to the thread besides the ones that know more about this than me, I forget that others might be watching from the sidelines. I also should expect this to get hits from Google searches. So... let's see if I can give an overview. I still get tied up in the terminology, because I don't actually get to use it in day-to-day conversation. 😆 I'll keep it in laymen terms.

Generation and Population ID are not clear.

- From my oversimplistic Darwinian viewpoint, I guess the Population is the number of individuals alive at any one moment in time, and Generation

... Very good. I hope to assume the reader (you and others) are somewhat familiar with Darwin Evolution (DA). At least as much as I remember from High School Biology (and I didn't much care for Biology in the classroom). Some of the terms are related to the Genetic Algorithm (GA) and some are related to the nature of the project being the InqEgg (IE). I'll try to break all out for you... maybe jumping back and forth.

- DA - Darwin Evolution

- GA - Genetic Algorithm (as described by Goldberg)

- IE - InqEgg implementation of GA.

I'll start out with GA. This will be the basic version as explained by the Goldberg book. Basically, everything we hear these days about ChatGTP, Dall-E, etc all owe their heritage back to this basic version. GA is an attempt to model DA in a computer... not to the level of like Gene splicing or creating drugs by gene analysis, but by the basic mechanics of Darwin's theory.

Genome - (DA) This is the list of genes in a plant, animal or human body. All cells have this string of DNA in them. It is what constitutes the owner's ability to live its environment. As history has told us, some Genome's like those of the Dinosaurs, Neanderthal and those in the Darwin Awards lead to their death or worse... death of the species. (GA) This is some data that represents an object and how it affects and/or will be effected by its environment. For the object that it represents, it is a fixed length, but can be made up of bits, bytes, or higher variable types. The GA doesn't really care what the data represents (no more than the Earth cares about us). (IE) This is an array of 20 C# double variables in the virtual version of InqEgg. Once I transplant into the ESP8266, it will be 20 C++ float variables. I'll explain in an another post what and how this array is used.

Generation - (DA) Obviously multiple generations of plants, animals and humans live at any one time. (GA and IE) Only one generation is alive at any one time. The mating process, forming the next generation all happens at one time and all the children's generation are birthed and parent's generation die at that time. I guess like a Mayfly is the model.

Population ID - (DA) There is no corollary except maybe our names for individuals. (GA and IE) For computing, it is assumed that there are a certain number of individuals per generation. Goldberg mainly describes a fixed number in the population, but hints that it is not required to be fixed. The Population ID is simply the index of each Genome (array of data) in an array of Genomes that make up a Generation.

Fitness - I've read different names for this, so YMMV depending on your background. (DA) I guess the best analogy is the environment or other creatures and the Genome's (entity's) ability to deal with both the climate and predators. (GA) This is some value that represents how good a Genome is able to achieve the task being modeled. (IE) This is simply a double variable representing how well the Bot did in the environment (Room). The fitness value calculation is not part of GA. It is simply used by GA. It and the other values you see on the screen and asked about are totally within the the scope of the task at hand. Think of it like... the DA evolution theory doesn't control the environment and predators... but it is solved with their input. I'll describe its calculation in a separate post.

Putting It All Together - EVOLUTION

(DA) - Plants and animals are affected by predators and the environment. Some die, some live to reproduce and begat the next generation. Survival of the fittest type story.

(GA and IE) - I'll try to keep this brief so people don't glaze over. The process steps are quite simple. My C# class doing it, is about 350 lines and includes a bunch of comments, a lot of white space lines for my readability and some code options that Goldberg didn't mention, but I want to try out. Also remember, the GA process is generic. This class will solve any GA task set for it. It is not in any way geared to robotics or InqEgg. You'll see why in a moment.

The GA algorithm does not have any concept of the what the Genome (array of data) represents or how it is used. It is simply a block of data. With that said, here are the steps for the Genetic Algorithm.

- (GA) Create a Generation array. This is a fixed size. It will hold a fixed (even number) of Genomes. (IE) I used 80 Genomes in the Generation array.

- (GA) For each in the Generation's population, create the Genome data array. In the generalized concept, these can be made up of any variable type. Remember in (DA) these are made up of a variable with only 4 values - (A, C, G, T). Fill this Genome array with random data. Think of it as the primordial soup. It's totally random. (IE) I've decided to model my InqEgg Genome as an array 20 doubles. I'll use floats on the ESP8266. All 80 Genomes have 20 Genes that are filled using a random number between -1.0 and 1.0.

- Each Genome, in turn, is passed up to the Evolution gods and they return a Fitness value. As this calculation is not part of GA, I'll describe this outside... in an another post. But the main point in GA evolution is the Genome's are ranked by this Fitness value.

- At mating time (all have been ranked) the mating process is described as follows. I'll use a Population count for a Generation of 80 Genomes for talking purposes.

- The sum of all the fitness values is calculated.

- Each Genome calculates its relative strength by dividing its own fitness value by this sum.

- Two Genomes are picked by weighted random number. IOW a number is generated between 0 and the sum. A Genome is picked if that number is in its weighted strength. IOW... a better Genome is picked more often, but it is still random.

- Two Children Genomes are generated based on merging of the data of the two Genomes. I'll describe this in another post.

- Forty pairs are mated. Each begets two children to get 80 children in the next generation.

- Notes:

- That not all individuals may get picked to mate

- Some individuals may get to mate multiple times

- Even the strongest may not get to mate

- Even the weakest may get to mate.

- It's all statistically, random.

- The new Generation returns to step 3 and Evolution continues until we (the Evolution gods) determine a good enough Genome meets our needs.

That is all there is to the Genetic Algorithm.

More to come...

VBR,

Inq

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide