Good Luck FYI I'll place a hold on my DB1 - interested to see how you progress. Been following the posts and viewing the Henry IX video ( and his videos ) At this point I don't know where I should be going - a lot of stuff coming at me and I'll try to digest it. If interested FYI- I'll send you two video clips; one I did of ultrasound robot - nothing great just followed a tutorial from Parallax , other Is servo motor hooked up to the sensor like radar . Was going to add the servomotor to the ultrsound robot but never got around to it Keeping , I'm in the loop

Adding the ultrasound to the servo is actually the very next video I have to do.. My students are going to need that for the in class lab that starts after this week. So watch for that, if it helps.

/Brian

Can you delete the post that this reply is attached to .It has my email address It served it's purpose

Thanks

I assume you could use the Jetson the way I use the robot laptop to program and test the Mega via a remote PC. The computers on our unmanned explorer space ships and Mars rovers can be reprogrammed remotely. If you put a robot arm on the robot you could manipulate things outside from the comfort of your home.

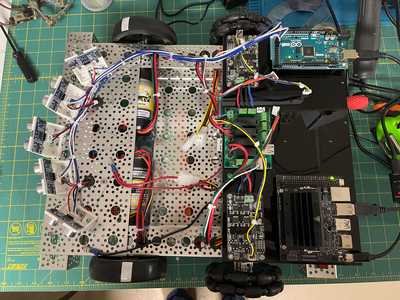

I successfully added a Jetson Nano 2GB to my DB1 chassis and using tigerVNC was able to connect to the Nano from my desktop an open the IDEs on the nano. Next step is to wire in the Motor Drivers to the MEGA (removed most of DB1's boards) so I can test creating a sketch and running the motors.

Very good. So once you have wired up the motors you can get on with writing code so it can move to a designated destination avoiding obstacles along the way? I am still struggling with getting useful results navigating using the wheel encoders.

Yes. I am going to follow the approach for Henry IX in Brian's videos. I've installed the sonic sensors, but I also have a LiDAR and Intel RealSense camera. I hope to ultimately have serval modes of navigation. But that is a long way off. I first need to get the boards wired and test code written.

@robotbuilder @ huckohio this is good stuff , huck..." appreciate the pictures . Like you I have a fair amount invested in the DB1 and it would be great to walk away from this project with some sense of satisfaction. I'll keep monitoring for now . Huck.. " are the sensors HC SR04 with four pin outs . I do have five of those but with my little robot I used the Parallax sensors with three pin outs - see attached -I see you still have the four wheels - any thoughts about a single swivel caster on the rear

Yes, they are the SR04 sensors.

I hear what Brian is saying about 3 versus 4 wheels, but if the robot is getting constant input from the sensors and making constant adjustments, then the error introduced by the wheel 'drag' would (in my simple mind) be accounted for in the navigation inputs in the 'flow' model. Maybe I'll understand as I get smarted....maybe.

BTW, I did purchase a castor wheel to make the change if/when necessary 🙂.

4 Wheels can work, especially if you have the 90 degree mecanum wheel as you do. I have not tried that setup, mostly because the 3 wheel setup works so well and I have not had to "upgrade" it yet. So i have been sticking with what has worked in the past. If there is drag (not saying there will be) it does have an effect. If a wheel slips due to the drag cause by the other wheels it leads to drift error. The encoder sees the correct number of clicks, but since traction was reduced the robot did not actually turn the amount the math shows.

As an aside, if you use a compass or accelerator / gyroscope you can try to detect the error this way, or if you have a map or are doing SLAM you can correct against the map as well.

/Brian

Now I'm kinda curious, has anyone used an optical sensor(preferably IR) or a laser sensor like or from a computer mouse? I thinking near the drive wheels and under the chassis. I'm not sure on the range they might have. I'm not sure if I could use it for error correction or if it will be accurate enough to rely on. I plan on using my robot on slippery surfaces. My drift concern is more about staying straight. That last post made me realize I won't be able to count on ticks alone.

I would if I thought I had something worthy of a thread. I started building DB1 in hopes of following Bill's tutorials, but that source dried up. I was following Paul McWhorter's threads, but he has stopped posting lessons and is doing 'chats' instead (and moving to Africa). James Burton has an interesting channel - but it's more of "look what I've done" versus teaching people how to build robots. I liked the approach Bill offered of combining mechanical, electrical, hardware (microcontrollers, etc.) and software into a project (really like designing PCBs).

I am not an engineer or software programmer, and I do not possess the experience to blaze my own trail. At this point I am a follower and my only vector is what I learn from these videos. When Brian started his thread I was again hopeful that someone would lead us along the road to a successful autonomous robot.

I am not an engineer or software programmer, and I do not possess the experience to blaze my own trail. At this point I am a follower and my only vector is what I learn from these videos. When Brian started his thread I was again hopeful that someone would lead us along the road to a successful autonomous robot.

Well if your project is going to follow the Henry IX build step by step then that makes sense but I am not sure how much detail Brian is actually going to share with anyone but his students. He did cover some introductory encoder code in the Pololu robot but it is rather painful copying the code from the video into the Arduino IDE. As its uses a Pololu header file I don't know how much it would need to be tweaked to work with your robot.

Brian did write:

I am a huge advocate of open source, creative commons, etc. And if I am spending the time and effort to make the material why not try to bring it to as many people as possible.

So there is some hope on that front but he also wrote,

unfortunately I do not have to the time to expand beyond that (videos) for now

So I guess you will be alone for a while.

It seems to me that using rotary encoders to calculate the position of a robot along the path it is currently navigating will only work for short distances. Great for correcting a short run to correct motor bias and to calculate distance travelled, but only if the surface is smooth and consistent. As I have posted in the past, I find its no good for outdoor grass field robots, but also not very accurate for indoors work when traversing the usual mixture of tile, vinyl , carpet, rugs etc found inside. Just across the Kitchen floor it may be OK.

For getting the robot to move along a desired route then an accurate positioning system where an accurate position of the robot and I'm thinking of centimetre accuracy is the way I seek to go. For outdoor bots we have GPS RTK utilising both a bot based GPS and a GPS base station achieve this level of accuracy. For indoor positioning systems there are radio beacon solutions such as that in this link:

https://marvelmind.com/product/starter-set-hw-v4-9-nia/

Whilst I can contemplate the cost of the outdoor GPS RTK such as that offered by Sparkfun for my outdoor bot (when I get round to building it) the cost of these indoor positioning systems, especially as I'm only looking at an indoor bot to practice with is far more than I want to spend.

So I have been looking to see if there are any reasonable cost solution for an indoor positioning system. One that may be promising is to use a camera based solution to determine the bots position, just like it can do face recognition. I have been looking at openCV where it seems relatively easy to pick out particular shapes or colour regions from the camera input. My idea is to put a coloured disk on the bot, an orange colour as I have a nice bright orange filament for my 3D printer and not much else of that colour in the room I have designated for my experiments. It just so happens that my conservatory is being cleared for renovation and decoration, and when its nice and clear I will mount a camera on the ceiling facing down and use its field of view as my experimental positioning system. I hope to be able to use openCV to pick out the orange disk on the bot and calculate its screen coordinates. I will then input a target set of screen coordinate to be the destination point and navigate the bot to this destination via constant feedback of the current position of the bot. OpenCV is free and and I already have some spare rpi's and rpi cameras so this is a nice cheapo solution for me.

Whilst some initial playing with openCV and some 'blobs' waved about in-front of a web-cam on my mac were encouraging there is a long way to go to see if this is approach is going to work, and my experiment room wont be ready for sometime as I await tradesmen do some renovation work when the pandemic shutdown is all over and normality resumes.

There are other ways of getting the position of the bot and finding its direction of travel like these LIDR's and sticking barcodes (or something) about the place for a robot based camera to read, and I may well have a go at some these ideas too.

In the meanwhile if there are any other ideas around indoor positioning systems for hobby outlay then I would be interested to hear. It would also be interesting to hear how others have tackled or plan to tackle their indoor bot navigation conundrum.

Hello everyone, I just wanted to update that I created a new video working with servo control. This video discusses how to mount an ultrasonic sensor to a small 9g servo and control the servos movements so that the sensor can get accurate readings at different angles. In the video coming after this one I will show how to use this data to avoid obstacles. I am thinking it will be 1-2 weeks for 2nd half, my students are finally getting caught up and once they are ahead I will be able to have the videos follow where they are. (Guess I will need new material for next year 😉 )

Any-rate, enjoy and let me know what you think!

/Brian

Great post, sorry I have been heads down for the better part of this week and am getting some air now...

I have not used the positioning sensor in the link you provided.

You are right that error becomes an issue with wheel odometry the longer you use it. Drift error in particular is problematic. Sensor fusion is a usually the way to over come this for long distances (or only use short ones).

A compass can give a good idea if your angles are correct and help avoid drift.

an IMA (Accelerometer / Gyroscope) can help determine if the robots actual movement matches the expected movement.

SLAM techniques are idea if you have a higher level controller such as I am building with the Jetson. You can use optical or lidar to create a map once you have the map you can localize within it given your your current sensor state.

/Brian