@thrandell

Maybe the training set could have the motor values adjust as the obstacle gets closer. You know, like obstacle on the left is 5" away so increase the pivot turn sharpness to the right...

I wondered what mathematics (weight and bias value) would be required for a neuron to output an increasing high value (plus or minus) as the input value became lower?

As you approach a light source the value increases as you get closer to the wall. As you approach a wall the value returned by sonar or TOF which decreases as you get closer to the wall.

If the rotation speed was proportional to the distance measure it would slow down as it approached the wall and spin like crazy as you moved away from the wall which is what happens in my experiments. We want the opposite to happen.

@thrandell

Maybe the training set could have the motor values adjust as the obstacle gets closer. You know, like obstacle on the left is 5" away so increase the pivot turn sharpness to the right...I wondered what mathematics (weight and bias value) would be required for a neuron to output an increasing high value (plus or minus) as the input value became lower?

As you approach a light source the value increases as you get closer to the wall. As you approach a wall the value returned by sonar or TOF which decreases as you get closer to the wall.

If the rotation speed was proportional to the distance measure it would slow down as it approached the wall and spin like crazy as you moved away from the wall which is what happens in my experiments. We want the opposite to happen.

I'm not sure where you're leading with this question? You already know for an hard-coded solution, you have to explicitly write the code. I'm still a little fuzzy on the mechanics and how it manifests itself in the GA version like @thrandell is doing. In the ANN version of my first attempt, the matrix will learns by trial-and-error and adjust elements of the matrix appropriately. I suspect that it will manifest as very small fractions << 1 for inputs needing to attenuate the response to negative values that need to negate or do the opposite.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

I wondered what mathematics (weight and bias value) would be required for a neuron to output an increasing high value (plus or minus) as the input value became lower?

What a great question! It might be pretty straight forward to implement an obstacle avoiding Braitenberg vehicle as a neural network in your simulator. Of course without a training set or a genetic algorithm there will probably be a little fine tuning of the weights, maybe not… I agree with you that the tough part here is mapping the distance to motor speed. I real head scratcher.

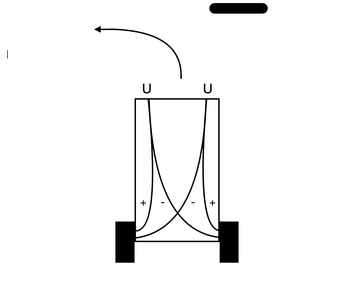

So here’s what i came up with, a Braitenberg vehicle with both excitatory and inhibitory connections. The excitatory connections would have a weight value in the range [0,1] and the inhibitory connections a range of [-1,0].

Let’s assume that the sensor input is in centimeters in the range of [0,20] and that they get normalized.

The output neurons would be in the range [-0.5,0.5] and would be mapped to the appropriate range for your motors. Mine are [-100,100]. Arduino has a map() function to do this.

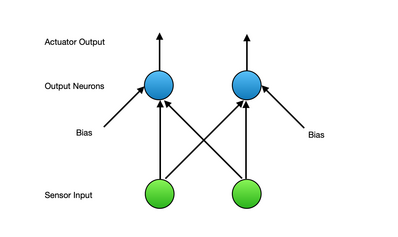

Here’s the network architecture. A one layer feed forward perceptron (as @Robo-Pi would say).

- Weight for left sensor to left output neuron = 1.0

- Weight for left sensor to right output neuron = -0.7

- Weight for right sensor to left output neuron = -0.7

- Weight for right sensor to right output neuron = 1.0

- Bias settings for both output neurons = 0.1

So to normalize the sensor input I came up with this formula:

- max sensor range - sensor1 input = sensor1 reading

- max sensor range - sensor2 input = sensor2 reading

- sensor1 = sensor1 / square root of ((sensor1 reading * sensor1 reading) + (sensor2 reading * sensor2 reading))

- sensor2 = sensor2 / square root of ((sensor1 reading * sensor1 reading) + (sensor2 reading * sensor2 reading))

Use the normal feed forward neuron activation computation, i.e.

- neuron1 = bias + (sensor1 * weight from above) + (sensor2 * weight from above)

- neuron2 = bias + (sensor1 * weight from above) + (sensor2 * weight from above)

Finally run neuron1 and neuron2 thought a sigmoid function shifted by -0.5. That will give you the input to the left and right motors that will have to be mapped to the range they are expecting… Clear as mud?

Tom

To err is human.

To really foul up, use a computer.

Thanks for the suggestions. I will implement them some time today (5:30AM here)

The forward/reverse direction has the opposite needs to the rotating directions. As the robot approaches a wall it needs to slow down and eventually stop while the rotational speed needs to increase.

A thought about biological inputs: They need to handle very high values to very low values.

The rustle of leaves in the wind to the roar of a jet engine.

Very bright light conditions vs very low light conditions.

The ear also has mechanical means to attenuate the volume and the eye uses pupil size to control brightness.

I'm not sure where you're leading with this question? You already know for an hard-coded solution, you have to explicitly write the code.

Consistency.

I used 1/distance * 1000 for all but the front distance sensor. The distance never gets closer then the radius of the robot base.

This two neuron network is very simple. I can imagine a more advanced network would have no problem using the same distance data to turn when near a wall while at the same time slowing down or even stopping near a wall. As far as I can see these toy neurons can only output a plus or minus value proportional to the input values.

distance spin rate 10 100.00 15 66.67 20 50.00 25 40.00 30 33.33 35 28.57 40 25.00 45 22.22 50 20.00 55 18.18 60 16.67 65 15.38 70 14.29 75 13.33 80 12.50 85 11.76 90 11.11 95 10.53 100 10.00

Consistency.

I used 1/distance * 1000 for all but the front distance sensor. The distance never gets closer then the radius of the robot base.

You could get consistency and extra speed using 1000/distance 🙂

Anything seems possible when you don't know what you're talking about.

I found a type-o in the sensor normalization formula. It should read:

- max sensor range - sensor1 input = sensor1 reading

- max sensor range - sensor2 input = sensor2 reading

- sensor1 = sensor1 reading / square root of ((sensor1 reading * sensor1 reading) + (sensor2 reading * sensor2 reading))

- sensor2 = sensor2 reading / square root of ((sensor1 reading * sensor1 reading) + (sensor2 reading * sensor2 reading))

An example, sensor 1 reports 10cm and sensor 2 reports 2cm

if max sensor range is 20 then

20 - 10 = 10, and

20 - 2 = 18

sensor1 = 10 / sqrt(424) = 0.48

sensor2 = 18 / sqrt(424) = 0.87

Now let’s plugin the activation sum of weighted input computation.

neuron1 = 0.1 + (0.48 * 1.0 + 0.87 * -0.7) = 0.1 + 0.48 - 0.60 = -0.02

neuron2 = 0.1 + (0.87 * 1.0 + 0.48 * -0.7) = 0.1 + 0.87 - 0.33 = 0.64

sigmoid(-0.02) = -0.0049

sigmoid(0.64) = 0.1547

Looks like a sharp turn away from the obstacle at 2cm.

We are going to have to supply a default when the sensor is out of range. The max range could work.

Another example, sensor 1 reports 0cm and sensor 2 reports 0cm. So they could be assigned the default, 20.

20 - 20 = 0, and

20 - 20 = 0

if (square root of the sum of the squares of the sensors <= 0) then sqrt = 1. (Funky, I know)

sensor1 = 0 / sqrt(1) = 0

sensor2 = 0 / sqrt(1) = 0

Now let’s plugin the activation sum of weighted input computation.

neuron1 = 0.1 + (0 * 1.0 + 0 * -0.7) = 0.1 + 0 + 0 = 0.1

neuron2 = 0.1 + (0 * 1.0 + 0 * -0.7) = 0.1 + 0 + 0 = 0.1

Sigmoid(0.1) = 0.0249

Sigmoid(0.1) = 0.0249

Looks like a slow forward movement.

Tom

To err is human.

To really foul up, use a computer.

Did I read you have some kind of wiring gizmo to allow for the bot to spin without winding up the umbilical or do you just let it wind up? If so... do you have a link for such a device.

Thanks.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

Hi @inq,

Hopefully you will get an informed answer. In case not:

I just googled AliExpress with 'rotary connector' and various slip ring things appeared ... never used or seen one, so I can't advise further.

https://www.aliexpress.com/w/wholesale-rotary-connector.html

If they are in AliExpress, they may well be in the more local bazaars, albeit probably at inflated prices,

Best wishes, Dave

Tom are you going to fully document your experiments in one place perhaps with all the code and hardware?

I don't know, I've got a ways to go yet. The IR sensors on my swarm bot were designed for a much larger arena and to send messages, not this short distance stuff.

Do you think that anyone is interested in this besides you and inq? I kinda doubt it.

Tom

At least that was two that showed some interest. I even spent the last two weeks working on genetic algorithms and networks myself just to have something to talk about as I have also done with other people's projects. No one has ever shown any active interest in my projects. I notice I have received a dislike so after four years I think it is time to move on.

Hi @robotbuilder,

RE: I notice I have received a dislike

I haven't got a clue when, where and who from, you got the 'dislike', (I am hoping it wasn't me 🤔, as I have never knowlingly sent a dislike.) but I would suggest there is a strong possibility that it was in error ... that particular bit of the user interface is susceptible to a momentary 'twitch' at the wrong moment, turning a like into a dislike. And it isn't even always apparent to the person adding the like/dislike, because it just tells you that you reacted.

So please, don't take an occasional 'dislike' as having any real 'meaning'. It might well have been intended to be a 'like'!

---------

Of course, this particular topic may not be everyone's "cup of tea", but I for one, have been glancing at it, to try to get an overview of the technology, and its failures and successes. AI has been around for decades, starting I am led to believe in a similar abstract programming form to that which it has now returned, but because initially the processing power available was orders of magnitude below that required, the 'direct programming' lobby had an open goal to score in. The processing power constraint no longer has the iron grip it used to enjoy, and previously unrealistic approaches are progressively becoming realistic. Where it will take us, I don't know, but we ignore it our peril.

Best wishes and take care, Dave

I was wondering if you are up for experimenting? IOW, are you doing this thread/study as only a means to an end (Swarm Robots) or are you also studying how the mechanism works also??? As I'm reading through and planning my strategies, I very interested in trying things so I can get a broader picture of the inner working of Back-Substitution methods and Genetic Algorithms. I'm assuming you have methods of saving off the trained Genome so you can always go back to it at any time. What I'm curious about with regards to your work, you mentioned early on that you were following Goldberg pretty strictly and using the Sigmoid function for normalizing. I was wondering if you had also tried ReLU instead of that one line in code to do the normalizing??? If you did, did you find it converged the solution any quicker or any better? Did you compare the Genomes derived from the two solutions and see any significant differences?

Just curious. Thanks.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

I'm assuming you have methods of saving off the trained Genome so you can always go back to it at any time.

What I’ve done so far was more of a “proof of concept” for me. My immediate plans are to put together a purpose built robot and not cannibalize one of my swarm bots. It will be about the same size but without a battery, battery charger, bumper, LEDs, buzzer and voltage meter. It will have about 8 IR Lidar distance sensors, 2 photo transistors on top, a reflectance sensor on the bottom, a SD card breakout to log data and one free GPIO pin. Same motors, driver, wheel encoders and MCU from my pile of swarm bot parts…

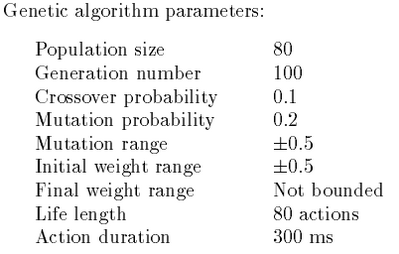

As far as investigating another activation function like ReLU that’s pretty low on my list. I believe things like how you encode the ANN parameters as a chromosome, what probability you select for the cross-over and mutation operations, and the range of initial default parameter values will all have a bigger impact on the ‘evolution’ of the robot.

So it sounds like you want to start with the back propagation of error approach. Would that be with a physical robot or on your laptop? My first exposure to programming an artificial neural network was using that technique on the XOR examples that are floating around the Internet. Is that your plan?

Tom

To err is human.

To really foul up, use a computer.

So it sounds like you want to start with the back propagation of error approach. Would that be with a physical robot or on your laptop? My first exposure to programming an artificial neural network was using that technique on the XOR examples that are floating around the Internet. Is that your plan?

It was! 🤣 ... but best laid plans...

My 10,000 foot view was that back-propagation (BA) and Genetic Algorithm (GA) were two distinct methods of solving the same problem of learning the weights and biases. I still see that is broadly correct, but they appear now to me to be geared to two separate types of problems.

I re-created a couple of the BA doing the MNIST Database (ANN Hello World equiv). First I found a solution doing it in C and ran that under VC++ to get a feel. I then converted the engine part to C++ using one of my old Matrix libraries and generalized it for: number of inputs, outputs, number of hidden layers, etc.

I'm still in the dark on how to select the hidden layers (count and size). So far every reference glosses over that subject. One reference did 2 hidden layers with 16 elements each. Another reference did 1 hidden layer of 300 elements. Both got it better than 96% accuracy on the MNIST. So far it seems rather arbitrary. I plan to do my own studies if I can't find some logic on the subject.

It wasn't until I got it on the ESP8266 and it slapped me in the face. BA requires you know what the output vector is for it to do the hard-Calculus to back propagate it. For a bot with n sensors solving down to only two +/- motor commands is too nebulous for BA.

I'm now seeing that GA is more geared to this solution because of its Fitness Criterion. I hadn't really started diving into the GA coding. I veered away from Goldberg reading, focusing on BA, but I'm back to it to bone up on GA.

However... I'm still playing around with the BA solution. I thinking that I can set up BA on the bot and use it real time. I can manually drive the bot around by RC and the bot will fetch both the inputs from sensors and read what settings I am real-time manually setting on the motor, feed it through BA and potentially pre-learn the weights/biases. This might get it over the initial awkward spinning around in circles and head banging phases. I'll obviously compare it to the random initialization GA version.

I've also scoped out and there is enough memory on the ESP8266 to hold the GA parameters as done in your ACAA reference. In that way, I can only send passive telemetry back to via WiFi.

It will have about 8 IR Lidar distance sensors

What kind of range on your sensors? Mind sharing which sensor? I'm using ToF senor with 45° FoV and it's rated to 4m, but 2-3m is more realistic.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

What kind of range on your sensors? Mind sharing which sensor?

I picked up a pair of these to try out and I think that they will be fine for this robot. https://www.pololu.com/product/4064

Tom

To err is human.

To really foul up, use a computer.