Here are the network weights, for what it's worth.

Because it is a simple network it is possible I think to analyze the solution it has found which is not always the case in larger networks. I hope my analysis will not offend you as being "negative" as it apparently did for inq. I offer it with trepidation...

The robot is essentially moving along a winding passage. If it is closer to the left wall it needs to veer right and if it is closer to the right wall it needs to veer left. The working weights for this to happen requires the sensors on the left to have opposite values to the sensors to the right.

Adding up the weights you have given on the left we have 2.344826 and -1.328887 and on the right we have the opposite signs -0.161165 and 0.802781. If you simply choose a weight value and assign opposites to each side it will follow the passage.

I have done a lot of experimenting on this setup using a sim bot. The actual weights can be identical, all that is required is opposite signs for the sensors on the left and sensors on the right.

The robot is travelling as a train would but instead of the wheels being locked onto the tracks they are locked onto the distance values of the walls which wobble about as the robot moves and turns. It is like holding out your left arm and right arm in a fixed direction to feel the distance of the walls and turning to keep the left distance the same as the right distance. The act of turning changes the values, and thus motor speeds, even though the position may be the same.

The reason it will travel straight into a corner is the same reason you would if you were keeping the same distance on both sides of two walls that converge together. The context neurons are supposed to play a role in preventing this as the action is not simply controlled by the current input but also by the previous input as is the case in a recurrent neural network.

@robotbuilder @inq @thrandell I saw this video and thought of you three. What caught my eye was the fact he had to 'learn' the circuit (so NOT autonomous) and the total failure. What is it the likes of Musk and others are doing differently that you three and the college kids have not figured out yet? Sorry, I will not be subscribing or answering, I just thought you might gain some insight from this, I have ZERO interest in this topic.

Here is the video

First computer 1959. Retired from my own computer company 2004.

Hardware - Expert in 1401, and 360, fairly knowledge in PC plus numerous MPU's and MCU's

Major Languages - Machine language, 360 Macro Assembler, Intel Assembler, PL/I and PL1, Pascal, Basic, C plus numerous job control and scripting languages.

Sure you can learn to be a programmer, it will take the same amount of time for me to learn to be a Doctor.

As a software guy as well as a hardware guy I thought genetic algorithms would be part of your tool kit for solving both constrained and unconstrained optimization problems?

Self organizing systems has always been of interest to me since taking an interest in how it was possible for complexity to evolve out of simpler systems.

@robotbuilder Nope, I never heard of any of that. I don't think it is relevant to business systems; it sounds more like college theoretical stuff.

I don't know if it makes a difference, but we didn't write a single line of code without a business case study that showed a cost-benefit.

Of course, there is room for both, but often I find that the non-professional coder is unaware of business realities.

First computer 1959. Retired from my own computer company 2004.

Hardware - Expert in 1401, and 360, fairly knowledge in PC plus numerous MPU's and MCU's

Major Languages - Machine language, 360 Macro Assembler, Intel Assembler, PL/I and PL1, Pascal, Basic, C plus numerous job control and scripting languages.

Sure you can learn to be a programmer, it will take the same amount of time for me to learn to be a Doctor.

@robotbuilder Maybe I didn't make myself clear. In the video, it shows them 'learning' the track even though these are supposed to be autonomous robots. The second point is that even after all that training, the robot had a 100% failure rate.

The reason Tesla and others have been largely able to succeed is that they have invested millions of dollars in inventing proprietary algorithms.

First computer 1959. Retired from my own computer company 2004.

Hardware - Expert in 1401, and 360, fairly knowledge in PC plus numerous MPU's and MCU's

Major Languages - Machine language, 360 Macro Assembler, Intel Assembler, PL/I and PL1, Pascal, Basic, C plus numerous job control and scripting languages.

Sure you can learn to be a programmer, it will take the same amount of time for me to learn to be a Doctor.

How my approach and results differed from the experimenters.

On the robot and environment …

Instead of searching for a Khepera robot made in the previous century I made my own version using off the shelf components, and while the experimenters stated that “some sensors were slightly active most of the time” the sensors I used were all active all of the time. The Khepera was made by a Swiss watchmaker and mine was made by a hobbyist with a soldering iron. In an effort to mimic the Khepera I used the same layout for the sensors and reduced the robot’s top speed to 88mm/second.

I made use of what I had on hand to build the arena, particle board and brown wrapping paper for the floor, white cardboard for the walls and painter’s tape to hold it all together. Relative to the diameter of the robot I think that my arena is smaller than what Floreano and Mondada used. I did not burn a 60 watt light bulb but ran my tests in a room with natural lighting.

On the genetic algorithm implementation …

This process dragged on a bit because I tried a lot of different things in the genetic algorithm. I tried both binary and real valued genotypes, Roulette wheel, tournament and stochastic remainder selection techniques, a few different ways to do crossover and half a dozen approaches to mutation. With a turnaround of at least 20 hours for each run, it look a long time to go through all those operators.

My longest run was 50 generations and I was able to generate good controllers using most of these techniques, although some of my mutation operators just destroyed the chromosomes and a high probability for crossover was also destructive. I had some success with a low probability for crossover (0.1) and using a mutation technique that added a value in the range [-0.5,0.5] to an existing parameter’s value (bias mutation).

On the fitness function …

But after all these runs I was never able to reproduce those great maximum and average fitness graphs that Floreano and Mondada have in their paper. When I did find a set up that showed promise it was not for robots that could navigate the corridors. For me when the graphs looked good the behaviors of the high fitness individuals were typically the ones that moved straight forward into a wall at high speed or did an elaborate circular motion avoiding the walls. Toward the end, switching from Roulette wheel selection to what Goldberg called stochastic remainder method helped, but the graphs suffered. Not every run produced an acceptable controller and the fittest individual of the last generation didn’t necessarily guarantee one either, but I was finding evolved individuals that could navigate the arena.

I thought it was interesting what Floreano and Mondada wrote about their fitness function: ”fitness function is precisely engineered to perform straight motion and avoid obstacles”; “consists in a detailed specification of the fitness function that is tailored for a precise task”; “In this sense there is not much difference between the tuning of the object function in a supervised (learning) neural algorithm and the engineering of the fitness function in the genetic algorithm.”.

Now at the end of it all I’m wondering if their fitness function may have influenced the design of the Khepera and not the other way around? Was the Khepera engineered to stop when it ran into a wall? Like if there was a certain load on the motors would they stop rotating? The Khepera sensors reported the intensity of reflected IR light with a very short range. Are my sensors to accurate? I noticed that when you normalize sensors, if two sensors both read a 1mm distance their normalized values go down when compared to the case where just one sensor is reporting a 1mm distance. This has me wondering how the Khepera sensors would responded in a similar situation.

On next steps …

Anyway, I haven’t had a chance to learn much about neural networks so I’m moving on to a multi layer artificial neural network that seems to take the same amount of time to run as the smaller one layer network. I want to measure the activation of the hidden nodes at specific points in the path of the robot.

Tom

To err is human.

To really foul up, use a computer.

I just saw an announcement of a RaspberryPi team winning an autonomous car race. That sounded exciting, but then I read that the following steps were required.

1. Blue and Yellow cones are placed around the track.

2. Humans then choose the optimal path

3. Humans created a map.

Car drives 'autonomously'

I don't get it, I am quite certain Tesla cars do not need coloured cones or humans to pre-map their route.

What the heck is different between what Tesla et al do, these contests (I have seen a few recently) and what y'all are doing with this genetics approach?

Is your goal to create an autonomous car/robot? If so, don't you need to find out what Tesla is doing?

Confused am I.

First computer 1959. Retired from my own computer company 2004.

Hardware - Expert in 1401, and 360, fairly knowledge in PC plus numerous MPU's and MCU's

Major Languages - Machine language, 360 Macro Assembler, Intel Assembler, PL/I and PL1, Pascal, Basic, C plus numerous job control and scripting languages.

Sure you can learn to be a programmer, it will take the same amount of time for me to learn to be a Doctor.

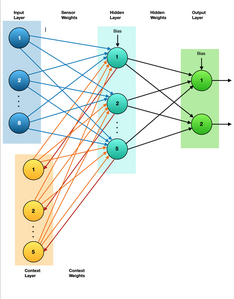

Multi-Layer Neural Network Robot Controller

I‘m continuing to explore the evolution of a robot controller but this time with a multi-layer neural network. By tracking the activation of 5 hidden neurons while an evolved controller guides the robot I’m hoping to better understand the robot’s behavior and navigation strategies. Hmmm. Well it sounds like fun anyway.

I borrowed the neural network design from another paper by Floreano and Mondada. They use it on a robot power source homing task, but it should work for navigation and obstacle avoidance too.

To paraphrase their description: “The input layer consists of 8 receptors, each clamped to one sensor (IR distance sensor) and are fully connected to five hidden units. A set of recurrent connections [25] are added to the hidden units. The hidden units are fully connected to two motor neurons, each controlling the speed of rotation of the corresponding wheel.”

Here’s the colorful diagram I came up with to flesh out the data structures for the neural network.

Tom

To err is human.

To really foul up, use a computer.

After trying a few runs of 100 generations and not seeing anything that resembled the recurrent behavior I saw before, I tried dropping the number of hidden/recurrent nodes from 5 to 3. Although this gave me a chromosome with 44 parameters instead of 82, the smaller network was also not successful. So I tried adding coefficients to the velocity and straightness components of the fitness function, varying them in the range [0.5, 1.0] in the hopes of giving the avoidance component more juice. Alas, still no robot controllers capable of navigating the arena.

So I tried a new approach that borrowed ideas from an experiment that used a “human-guided incremental evolution” approach. I control the selection of the initial population and when the incremental stages of evolution end. At each stage the environment and/or the fitness function changes.

Cliff D., Harvey I. and Husbands P. (1993). Explorations in Evolutionary Robotics. Adaptive Behavior.

Stage 1) Environment: the original arena with no central wall, but it still had the acute and obtuse angled corners. Fitness function: used just the straightness and the avoidance components.

population=80, generations=65, probability of crossover=0.1, probability of mutation=0.2, Roulette wheel selection.

After 25 generations I found an individual that showed some interesting behavior so I cloned it and used it to initialize the starting population of stage 2.

Stage 2) Environment: the arena with the central wall. Fitness function: again just the straightness and the avoidance components.

population=30, generations=30, probability of crossover=0.0, probability of mutation=0.2, elitism selection method where the fittest are twice as likely to be selected as the median.

After 25 generations the fittest individual could successfully navigate the arena in the clockwise and counter clockwise directions. Yahoo, done after just two runs!

So now I want to carry on with the second part of this little project: documenting the state of the hidden nodes while the robot moves through the arena. Hopefully something interesting comes from it.

Tom

To err is human.

To really foul up, use a computer.

To grab the state of the hidden nodes while the robot navigates around the course I thought that I’d record the value of the nodes after the sensors are read and applied to the neural network. Here’s the data structures that I’m using.

/ Neural Network #define NUMINPUTS 8 #define NUMHIDDENNODES 3 #define NUMCONTEXTNODES 3 #define NUMOUTPUTS 2 #define MAXPARMS 44 // Neural Network float inputLayer[NUMINPUTS]; // holds the normalized IR sensor readings float hiddenLayer[NUMHIDDENNODES]; // holds weighted input from inputLayer, contextLayer and hiddenLayerBias float contextLayer[NUMCONTEXTNODES]; // holds the t-1 hiddenLayer output float outputLayer[NUMOUTPUTS]; // holds weighted input from hiddenLayer and hiddenLayerBias float hiddenLayerBias[NUMHIDDENNODES]; float outputLayerBias[NUMOUTPUTS]; // The Weight float sensorWeights[NUMINPUTS][NUMHIDDENNODES]; float contextWeights[NUMCONTEXTNODES][NUMHIDDENNODES]; float hiddenWeights[NUMOUTPUTS][NUMHIDDENNODES];

And in this function the sensor inputs are run through the neural network to populate the nodes. The ones I’m interested in are the hiddenLayer values after they are updated by the sigmoid.

float sigmoid(float x) { return 1 / (1 + exp(-x)); }

float sigmoidShifted(float x) { return 1 / (1 + exp(-x)) - 0.5f; }

void run_ann() {

// move the input and weights through the neural network

float activation;

for (int8_t h=0; h<NUMHIDDENNODES; h++) {

activation=hiddenLayerBias[h];

for (int8_t i=0; i<NUMINPUTS; i++)

activation += inputLayer[i]*sensorWeights[i][h];

for (int8_t c=0; c<NUMCONTEXTNODES; c++)

activation += contextLayer[c]*contextWeights[c][h];

hiddenLayer[h] = sigmoid(activation);

}

for (int8_t o=0; o<NUMOUTPUTS; o++) {

activation=outputLayerBias[o];

for (int8_t h=0; h<NUMHIDDENNODES; h++)

activation += hiddenLayer[h]*hiddenWeights[o][h];

outputLayer[o] = sigmoidShifted(activation);

}

for (int8_t c=0; c<NUMCONTEXTNODES; c++)

contextLayer[c] = hiddenLayer[c]; // move the hidden layer to the context Layer, (recurrent)

return;

}

So I want to try lighting up the Pico’s built-in LED every 2 seconds or so and at the same time record what’s in the hiddenLayer nodes on the SD. Then if I can film this while it’s looping around the track I’m hoping to graph the thing by hand. Ha. We’ll see…

Tom

To err is human.

To really foul up, use a computer.

...So now I want to carry on with the second part of this little project: documenting the state of the hidden nodes while the robot moves through the arena. Hopefully something interesting comes from it.

...So I want to try lighting up the Pico’s built-in LED every 2 seconds or so and at the same time record what’s in the hiddenLayer nodes on the SD. Then if I can film this while it’s looping around the track I’m hoping to graph the thing by hand. Ha. We’ll see…

I would imagine the method @inq used to record the i/o of his robot on a computer would suit your project and give you processing power to analyze the state of the hidden nodes although it is unclear what kind of analysis that would be. The input states depend on the position and orientation of the robot so I would suggest two LEDS? You could use a webcam to capture the frames to then plot out the position and direction of the robot while recording any ANN values.

I re-read all the posts that inq made on this topic and I couldn’t find anything about I/O. Do you remember where you saw his post? I did find his suggestion about capturing data if I used a laptop to run the GA but I don’t do that. I’ve already grabbed the data I need. It’s not perfect, but it’s close enough.

Tom

To err is human.

To really foul up, use a computer.

Yes I got that you can record the data on an SD card. I am unclear what you mean by "Hopefully something interesting comes from it". I assumed you meant some kind of analysis of the data and the associated robot's pose. I assumed you would transfer the SD card data to a computer for analysis. Anyway enough of the assumptions I will back off.

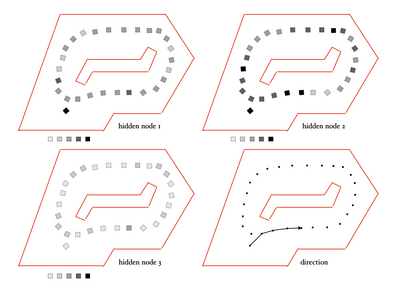

I’m not sure what I expected to find from the activation of the hidden nodes in this network. Perhaps that the nodes had some specialized purpose. One node active on left turns and another node active on right turns. After staring at these graphics for awhile it seems easier to think of activation in terms of the two behaviors that the fitness function encouraged, namely straightness and obstacle avoidance.

I wasn’t sure about the best way to represent the data I collected so I hemmed and hawed a bit and tried out a few things.

I even dug out some books I have by Edward Tufte before deciding on using a square shape that I rotated to show the robot’s orientation. The darker the shape the more active the node.

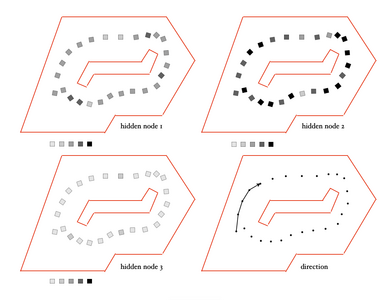

Since the controller that evolved can navigate in both a clockwise and a counter clockwise direction I decided to graph both. The counter clockwise robot moved in a smooth arcing path and took about 1 minute 2 seconds to complete one loop. The clockwise robot ran very close to the inside wall and because of that it made tighter turns and used small left/right adjustments to get into position for a turn. It looped about 5 seconds faster than the counter clockwise direction.

Looking at the counter clockwise example I noticed that with node 2 the activity is highest when the front sensors have the longest open space in front of them so it likely contributes to the straightness behavior. Nodes 1 and 3 on the other hand are most active prior to and while making turns to avoid an obstacle.

The clockwise turner was a little more interesting to me. For example, node 1 was highly active just as it was starting to do a path adjustment to get clear for a righthand turn suggesting that it partially controls the obstacle avoidance behavior. Node 2 may also contribute to that behavior as it seems to be the most active during the turns.

This analysis of hidden node activity would probably be more interesting with a more complex behavior, but for me I think it’s time to move on to something else.

Tom

To err is human.

To really foul up, use a computer.