@davee They are used by the astronomy crowd and are readily available but it will depend on the freq of the laser.

I was just looking for some specs on the TOF and I see it says it has built in IR filters so you should not be getting very much if any ambient pollution.

I have no personal knowledge with the device but if I was designing one I would certainly build it with narrow band filters. In fact they also sell one (out of 7) that allows ambient light detection so that leads me to believe @Inq should be getting very little if any ambient light coming through.

First computer 1959. Retired from my own computer company 2004.

Hardware - Expert in 1401, and 360, fairly knowledge in PC plus numerous MPU's and MCU's

Major Languages - Machine language, 360 Macro Assembler, Intel Assembler, PL/I and PL1, Pascal, Basic, C plus numerous job control and scripting languages.

Sure you can learn to be a programmer, it will take the same amount of time for me to learn to be a Doctor.

@davee Lot's on Amazon. Photography and astronomy and especially astrophotography. Prices can go fairly high for best quality, but for guys who spend $20,000 just for a tripod that's not a problem.

First computer 1959. Retired from my own computer company 2004.

Hardware - Expert in 1401, and 360, fairly knowledge in PC plus numerous MPU's and MCU's

Major Languages - Machine language, 360 Macro Assembler, Intel Assembler, PL/I and PL1, Pascal, Basic, C plus numerous job control and scripting languages.

Sure you can learn to be a programmer, it will take the same amount of time for me to learn to be a Doctor.

Hi Ron @zander,

I agree that it makes sense to consider using a filter that matches the VCSEL wavelength, but this is a low cost part, so only if it could be achieved at virtually zero cost. Narrow band filters have a more complex structure, which I guess would be expensive and it might mean additional optical elements.

@inq clearly observed a change of behaviour when he switched the high pressure sodium lights on ... which strongly suggests that a substantial proportion of the light of the is finding its way into the SPAD array.

My (unsubstantiated) guess is that any built-in filter is a simple wideband one that passes near infra red but attenuates the visible spectrum ... this level of protection is commonly used on commodity products, like household remote control receive detectors. However, according to the paper I found earlier today, the high pressure sodium lamp is an 'unusual' requirement because it has a strong line in the near infra-red, which I suspect may pass through simple filters.

Of course, I am not in position to directly verify any of these suggestions.

Best wishes, Dave

@davee Did not know Sodium had any IR, that may explain the result. Yes, you are likely correct, at that cost the IR filter will not match a $500 astro filter. Don't compare prices directly, remember the huge size differences and the cost is at least partly controlled by the area of the filter.

I any case the demo I saw was next to useless, I don't know how/if Dennis can make heads or tails out of the image data.

First computer 1959. Retired from my own computer company 2004.

Hardware - Expert in 1401, and 360, fairly knowledge in PC plus numerous MPU's and MCU's

Major Languages - Machine language, 360 Macro Assembler, Intel Assembler, PL/I and PL1, Pascal, Basic, C plus numerous job control and scripting languages.

Sure you can learn to be a programmer, it will take the same amount of time for me to learn to be a Doctor.

Hi Ron@zander,

re: Prices can go fairly high for best quality, but for guys who spend $20,000 just for a tripod that's not a problem.

I don't know about Inq's financial position, but since I am paying with my own money, I think twice before spending the equivalent of $20.

$20 is roughly the price of ths sensor, soldered to a card retail from Pimorini and others.

So I am guessing the original factory transfer price of the sensor in quantity is probably nearer $5 each.

Of course, it sometimes makes sense to mix a $5 component with a $10,000 component, especially for one-off research projects, but even if I had that amount to spend, I think I would start by looking for a more expensive sensor.

Alternately, particularly for those with modest budgets, I think @robotbuilder has made some good suggestions about using this sensor as a testbed to develop technology and expertise .... from Inq's experiments, I think it can be made to work in a restricted environment ... e.g. mainly short range ... possibly down to 1m, with some less precise measurements up to 2-3m, shielded from 'bright' ambient light sources (at 940nm), and so on.I think there is a lot to learn in that 'nursery' environment.

It might mean @Inq has to move his beer to a small nearby fridge for his bot to be able to find it, but that seems a 'small' price to pay, at least until the next generation of Inq-lings, with the next generation of sensors became available. 😀 (Only a suggestion, Inq!!!)

Best wishes, Dave

@davee I am following the discussion with some interest although robotics isn't a big interest for me. Artificial site however may be useful to me in a future enhanced game camera.

First computer 1959. Retired from my own computer company 2004.

Hardware - Expert in 1401, and 360, fairly knowledge in PC plus numerous MPU's and MCU's

Major Languages - Machine language, 360 Macro Assembler, Intel Assembler, PL/I and PL1, Pascal, Basic, C plus numerous job control and scripting languages.

Sure you can learn to be a programmer, it will take the same amount of time for me to learn to be a Doctor.

Hi Ron @zander,

So far, I haven't got involved with robots either ... though some, including @Inq's recent creations look really fun.... how does he get the time and skill?

I am however, always keen to find how things work ... and Lidar is one of those things I have never properly understood, beyond the most basic level of fire a laser and look for a reflection. However, it 'pops up' in a number of guises .. and is 'competitive' with other Time of Flight technologies.

I hope that by looking at and describing things to people, it encourages at least a few to look beyond the obvious and create something original.

And I am hoping Inq will persevere with either this is or a comparable device.

Always good to discuss ideas. Best wishes, Dave

from Inq's experiments, I think it can be made to work in a restricted environment ... e.g. mainly short range ... possibly down to 1m, with some less precise measurements up to 2-3m, shielded from 'bright' ambient light sources (at 940nm), and so on.I think there is a lot to learn in that 'nursery' environment.

It might mean @Inq has to move his beer to a small nearby fridge for his bot to be able to find it, but that seems a 'small' price to pay

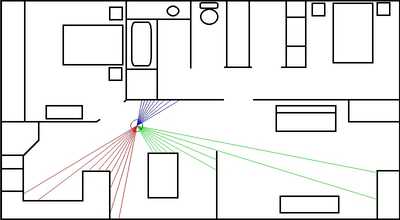

It need not be limited to a small area just because the sensors are short range. With an ordinary camera you stitch together features (image patterns) as the robot moves about so with this sensor you may be able to stitch together shape patterns, as I indicated in the other post on travelling down a passage, to map, navigate and recognize a location. The sensor could be used as a lidar that scans in 8 distances at once. The pattern of 8 distances can be converted into line shapes or 8 line shapes if all horizontal lines are used. Although I may never use this particular sensor the problems are the same for any sensor like a video camera being used to map and navigate the environment. I have been playing around with my sim bot using 3 of the sensors placed front and on both sides. Although the sim data is ideal the principles for using the data to map and navigate is applicable to a real robot. I think ROS has a simulated robot option.

To enlarge image, right click image and choose Open link in new window.

Hi Ron @zander,

So far, I haven't got involved with robots either ... though some, including @Inq's recent creations look really fun.... how does he get the time and skill?

I am however, always keen to find how things work ...

I always had to know how things work even though much of modern physics is beyond me. That was the impetus to learn about electronics to know how radio and tv worked. Then I had to know how computers could play chess and do other "thinking" tasks.

Programming by the way is "wiring up" a general purpose machine which is a collection of parts just as you might wire up components directly. A program can in principle be implemented as hardware thus the existence of math, video and sound chips.

The early computers were literally programmed by wiring them up.

http://www.righto.com/2017/04/1950s-tax-preparation-plugboard.html

@robotbuilder OMG, I haven't seen a 403 board in a very long time. That was the state of the art even into the mid 60's, computers were amongst them but severely outnumbered still for a few more years at least. I left IBM to join a company headquartered in Mountain View where the computer museum is now. I was trained and repaired every machine in that article. Shortly after I joined IBM I got 1401 trained then system 360 in depth, from the hardware to the OS. The 360/65 was an engineering marvel. Between then and now a lot has changed.

First computer 1959. Retired from my own computer company 2004.

Hardware - Expert in 1401, and 360, fairly knowledge in PC plus numerous MPU's and MCU's

Major Languages - Machine language, 360 Macro Assembler, Intel Assembler, PL/I and PL1, Pascal, Basic, C plus numerous job control and scripting languages.

Sure you can learn to be a programmer, it will take the same amount of time for me to learn to be a Doctor.

Hi @robotbuilder,

Re: It need not be limited to a small area just because the sensors are short range.

You are of course correct, in that by 'stitching' small adjacent/overlapping areas together, then the overall area can be extended indefinitely. However, unless you can do it with high accuracy, errors may build up, and cause problems. All I was inferring, perhaps inelegantly and gently facetiously (needing new beer fridge), was to start with more modest expectations, given the sensor also had limitations. Once such modest expectations have been achieved, then there is a very wide scope for extension. It is probable that better sensors will become readily available, and also by combining data from this type of sensor with data from other sources.

-------------

I am only using my (limited) imagination, but using video camera data appears to have different rules from this sensor.

The camera can reliably see objects at any distance (within say a building), providing certain conditions are met, such as there is nothing obscuring the 'line of sight', the objects are in an 'illuminated area' and their appearance includes contrasts such as colours or causes shadows. These characteristics probably assist 'stitching' different views together to cover a wider area. Also, the camera's characteristics are close to those of our eyes, so there is a familiarity with the data.

The sensor in discussion directly provides a detail that can only be inferred from camera views, namely the actual distance from the viewpoint to an object. In principle, by applying a 'scanning' approach, which could be just scanning the data from adjacent pixels across a fixed field of view, or an extension by moving the sensor or using multiple sensors, it is possible to directly build up a 'picture' of the absolute positions of the nearest surfaces. The limitations of the sensor mean this picture may have patches of 'unknown', because the surface is too far away, and the limited resolution of the scanner may cause it to miss small objects that would be noticeable to a visual camera of 'modest' resolution capabilities. These characteristics are quite different from the experience most of us with fully functional eyesight enjoy. I can only imagine this sensor is a little like the experience of a person who is unable to see with their eyes, feeling their way around in an unfamiliar place, with a stick.

Clearly, this contrast of capabilities and limitations between the two technologies suggest that should form a good complementary pair of data sources for a data fusion approach ... but that is extending the complexity to yet another level.

Clearly there is a huge scope to research! Best wishes all, Dave

In fact, both of these had some basis in fact ... although both the leaves and snow started life as perfectly normal leaves and snow ... however, leaves can be compacted on to rail by a train passing over them to the extent it takes a hammer and cold chisel, or equivalent to shift them .. similar snow compacts to ice ... and both defeated the track cleaning equipment available at the time. I seem to remember development of a plasma arc torch to shift the leaves, though I don't know if it's in use

🤣 I initially thought you were joking... leaves / snow! I can't imagine either affecting a railway. I know the tourist one here (sometimes a real coal fired steam engine) is never slowed down by things like that... too much revenue potential to miss if leaves were a problem. Here in the colonies... we even had cowcatchers. Can't imagine it endeared them to the cows though. Maybe, cows got smarter.

Of course, when that happens, it takes a while for those electrons (or snow) to be replaced, so if a second electron is excited by a second photon just after the first one, there are no 'trigger happy' electrons waiting to avalanche and the second electron is just 'forgotten'.

Very interesting stuff... At home had just barely enough Internet to see that I had 37 notifications pending. Decided, it'd be easier to go to the library and go through them. I've printed this out so I can take it back home and study it more thoroughly. The above alone is worth was instantly understandable and far better than any documentation on this sensor I've seen.

I WILL GET BACK TO YOU ON YOUR DISSERTATION... I AM CERTAIN.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

What happens if you simulate that ? Suppose you hack together a case with a lid on a hinge, put the sensor in the box and run it until the readings stabilize and then open the lid to see how long it takes to stabilize again. That might stop from "remembering" a previous state.

A more serious answer, this time... I don't think the box idea will work. It's opposite... anything close (being in the box) sets at the very next 15 Hz cycle. Opening the box from that state to a 4+ meter scan would have this hesitation. And when in bad light (sun, incas, halo) it would also be slow to register. I never really noticed the startup time... its long anyway, but, it might even have this big pause on boot up IF looking at 4+ meters, but not if in the box. Maybe, I can quantify it with some testing on boot up.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

All I was inferring, perhaps inelegantly and gently facetiously (needing new beer fridge), was to start with more modest expectations, given the sensor also had limitations.

Agreed, like obstacle avoidance or detection of simple shapes that fill the whole 8x8 array.

It is probable that better sensors will become readily available,

Well better TOF sensors (more expensive TOF cameras) already exist.

An analogy with vision and the VL53L5CX sensor would be using an 8x8 pixel camera!! You could move such a camera about to build up a more detailed image.

This thread is really about what can we do with an array of 8x8 TOF sensors. They claim it can be used to map a building but without any examples I can find of it actually being used to do that.

@davee fyi @Inq @willCameras not intended for astrophotography which is for instance ALL models of the Canon DSLR lineup but one have a filter internally applied to the sensor (the astro camera has the sensor removed) The filter reduces the red spectrum to 'normal' but astro shooters want to highlight that Hydrogen glow in nebulas.

My point is could a very high pass filter be applied to this sensor to almost eliminate all the ambient light since the laser emitter is very wavelength specific? Would that not increase the signal to noise ratio and does that imply better sensitivity?

@Inq I was watching a video about this sensor and they said you could use multiples of them. Does that help or hurt the mapping you want to do?

I'm not sure what would hurt more... the price per sensor $25 versus an ultrasonic for less than a $1 is one thing. I don't see (at the moment) any advantage to getting the readings simultaneously (microsecond separation) versus using a servo to turn it and get a second reading several 15Hz cycles later. As it is the 4 meter limitation requires to move the bot around anyway, so I might as well assume moving / head turning anyway.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide