While working on my swarm robot sensor subsystem I was struck by how much hand crafting went into programing a robot controller. The motors never rotate at the same speed, no two sensors respond to stimuli in the exact same way,..

One of my roboticist heroes, Dario Floreano, has written about using genetic algorithms to evolve neural networks in his robotics research. One of his early experiments was in solving a familiar robot task, i.e. getting a robot to move and avoid obstacles. A task that has come up many times in my work with robots. His idea was to use a “simple” feedforward neural network to map IR proximity sensors to the two motors of a differential drive robot and to use genetic algorithms to derive the fittest individual to perform the task. Wow! All new territory for me, but I’m hooked.

First on my list was to understand what a feedforward neural network with recurrent connections is and to get more details on how it was implemented in his experiment. Lots of jargon to learn.

Automatic Creation of an Autonomous Agent: Genetic Evolution of a Neural-Network Driven Robot (1994), Floreano, D., Mondada, F.

To err is human.

To really foul up, use a computer.

To err is human.

To really foul up, use a computer.

All that stuff has been of interest to me over the years. Tuning and designing ANNs of various types can be a bit of a black art. I could talk about neural networks (played with coding some) but have no practical application for an Arduino robot. I first read about genetic evolution in Richard Dawkins book "The Blind Watchmaker" where he gave a simple genetic program to evolve biomorphs.

https://www.richarddawkins.net/wp-content/uploads/sites/41/2014/06/Evolution-of-Evolvability.pdf

These produced visual variations rather then variations in sensory/motor behaviors.

I first read about genetic evolution in Richard Dawkins book "The Blind Watchmaker" where he gave a simple genetic program to evolve biomorphs

That's interesting. Did you ever implement the program and create bimorphs?

To err is human.

To really foul up, use a computer.

No I never did my own version of that program. It is really artificial selection as it is the human that selects the biomorphs to breed for the next generation much as we do with our domestic animals and plants.

I do remember playing with some genetic programs.

One thing that fascinates me is self assembly. Biological systems self assemble. They are trying to make little robots that can self assemble.

I’ve been reading about how genetic algorithms use fitness functions and it seems that Dawkins work with biomorphs is the classic example of subjective fitness. Something that an artist might use to create art or music.

Tom

To err is human.

To really foul up, use a computer.

One thing you might evolve is behaviors. A robot has input states that determine the output states. Part of the input state can be internal states (memory) not just the sensory input states. The mental tools I used to understand all this I first learned after reading Ross Ashby's book An Introduction to Cybernetics. It was written for people with limited math skills like biologists who had to study complex systems. Today the principles would come under the umbrella of Systems Thinking.

http://dspace.utalca.cl/bitstream/1950/6344/2/IntroCyb.pdf

In systems thinking a machine is a way of behaving (changing states) which may be mechanical or biological.

So for example you might mutate a set of robot vacuum cleaner's i/o connections and see what happens. The one with the i/o connections that cover more of the floor in the least amount of time is duplicated in the other robots and then they are all mutated (some connections changed) again. In theory they might become better and better at covering the floor area in the least amount of time.

Swarm behavior is an evolved behavior.

Genetic Evolution

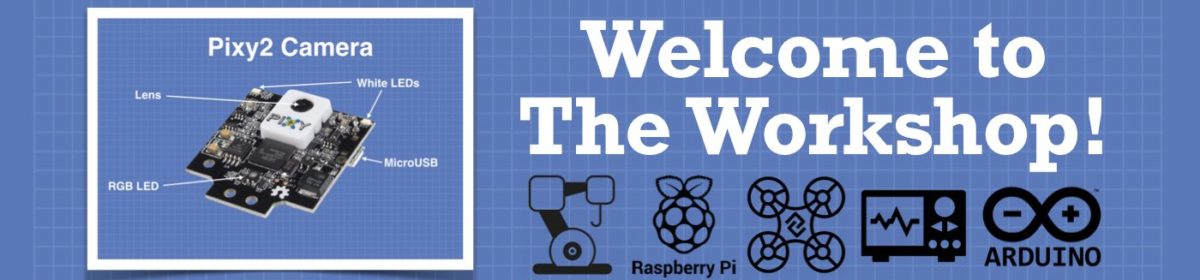

Being new to genetic algorithms I picked up a used copy of Goldberg’s book for about 10USD.

D. E. Goldberg. (1989). Genetic algorithms in search optimization and machine learning. Addison-Wesley, Reading, MA.

“Genetic algorithms operate on populations of strings, with the string coded to represent some underlying parameter set. Reproduction, crossover and mutation are applied to successive string populations to create new string populations.”

The book contains Pascal code that covers the high points that Floreano and Mondada write about. Namely,

- Roulette wheel selection

- Linear fitness scaling

- Biased mutations with probability of 0.2

- Single point crossover with probability of 0.1

- Encoding a chromosome with either floating point or binary values

The purpose of a fitness function is to give a numerical value to the utility, profit, or goodness of an individual chromosome. In this case the fitness measures navigation and the avoidance of obstacles as performed by a robot. Floreano and Mondada came up with an interesting fitness function that I describe later.

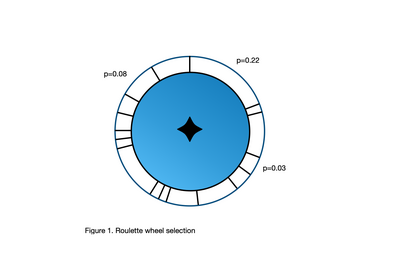

Roulette wheel selection is a way to visualize the reproductive probability of the individuals in a population. The slots in the wheel represent each individual and the width of the slot is proportional to their reproductive probability (fitness). Spin the wheel and the most fit genomes have a higher probability of being selected.

Goldberg explains that there are two problems with this technique. The first is that if one individual stands above all others in an early generation then that individual will be selected more often and will dominate our small population (premature convergence).

The second problem is when the fittest individual is only slightly better than the average. This turns the search for the fittest into a random walk among the mediocre. The solution for both cases is to scale the fitness values of a population before selection. This will reel in the outstanding individual and spread out the mediocre for a better search of the ‘genetic space’.

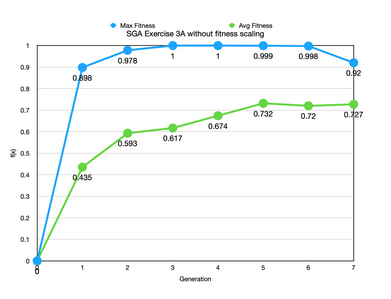

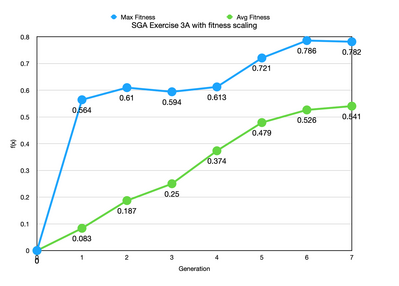

It’s pretty impressive how well this works. These two charts are the result of running a fitness scaling exercise in the book. In the run without scaling one individual was selected 9 times from a population of 30. In the fitness scaling run it was selected only twice.

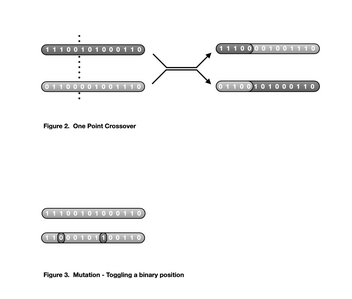

After the fittest are selected they are randomly paired up and their chromosome strings copied over to two children. If crossover is being performed then a random uniform number is used as a crossing point to divide the chromosome. The substrings of the two children are then swapped, see figure 2.

Mutation in their experiment was accomplished by toggling the bits in the chromosome string, figure 3.

All of the 22 neural network parameters were encoded as 4-bit precision binary values concatenated into one long chromosome string. Each individual in a generation had their chromosome decoded and applied to the neural network, with the two outputs used as wheel motor speeds. The robot, after setting the speed of each motor, was allowed to run for 300ms. After which their performance was recorded and stored as a fitness value.

The experimenters used this fitness function.

Phi = V (1 - sqrt(v)) (1 - i)

where 0 <= V <= 1

0 <= v <= 1

0 <= i <= 1

V is the sum of rotation speeds of the two wheels, v is the absolute value of the algebraic difference between the signed speed values of the wheels, and i is the normalized activation value of the infrared sensor with the highest activity. The higher the value the better the performance of the individual.

“These three components encourage - respectively - motion, straight displacement and obstacle avoidance, but do not say in what direction the robot should move.”

Nolfi, S. and Floreano, D. (2000). Evolutionary Robotics: Biology, Intelligence, and Technology of Self-Organizing Machines. MIT Press, Cambridge, MA.

V sounds like meters per second to me and wheel encoders can supply that by counting clicks per time period. This is the formula that I use to determine distance on my swarm robots:

pi * wheel diameter / gear ratio * encoder clicks per revolution; so

pi * 32mm = 100.53 and 75.81 * 12 = 909.7s; for a distance per click of 0.11050653 millimeters.

v uses the absolute value of the arithmetic difference between the two signed wheel speeds. Eg. if the left wheel speed is 0.1 m/s and the right wheel speed is -0.2 m/s then the algebraic difference is 0.3 m/s.

i uses the normalized activation value which is determined by dividing each sensor’s reading by the square root of the sum of the squares of all sensor output.

It’s been an interesting study so far. I’m still baffled as to how a genetic algorithm can find a set of neural network parameters that allow a robot to navigate and avoid obstacles. I get how the history of actions provided by the recurrent connects help with things like getting stuck in a canyon, but the search space just seems so huge…

Tom

To err is human.

To really foul up, use a computer.

That is really all about evolution (learning in the species not the individual). Learning and adaption (relearning) is also a useful function. Being born already knowing what to do is good in terms of brain resources and is found in grazing animals. The extra cost of a brain capable of learning pays off in predators and thus they have a childhood during which they learn things over and above their natural instincts. They are dependent on parenting to keep them alive and teach them things.

Actions need to be goal driven. The robot acts and then checks if the desired result has occurred. If it has not it needs to change its actions. A sensor may fail or a motor may malfunction so other means might be applied to achieve the goal. A mouse may use its legs to navigate a maze but tie its legs up and it will roll around the maze to find the cheese (the goal).

While working on my swarm robot sensor subsystem I was struck by how much hand crafting went into programing a robot controller. The motors never rotate at the same speed, no two sensors respond to stimuli in the exact same way,..

One of my roboticist heroes, Dario Floreano, has written about using genetic algorithms to evolve neural networks in his robotics research. One of his early experiments was in solving a familiar robot task, i.e. getting a robot to move and avoid obstacles. A task that has come up many times in my work with robots. His idea was to use a “simple” feedforward neural network to map IR proximity sensors to the two motors of a differential drive robot and to use genetic algorithms to derive the fittest individual to perform the task. Wow! All new territory for me, but I’m hooked.

First on my list was to understand what a feedforward neural network with recurrent connections is and to get more details on how it was implemented in his experiment. Lots of jargon to learn.

Automatic Creation of an Autonomous Agent: Genetic Evolution of a Neural-Network Driven Robot (1994), Floreano, D., Mondada, F.

I'm finding your thread here very interesting and I would like to learn more. When I hear AI or neural-network, I see the typical branching logic trees. I would like to get to the level of obstacle avoidance, face or even person recognition, or voice command recognition. I have some ESP32-S3's coming today (I hope) and they seem to make a big deal about the vector processor being able to do this last topic on the board.

With the 20-20 hindsight that you now have, do you have say... a list of links to topics or YT that you'd now recommend in an order that makes sense to a complete novice? OR... do you still recommend the order that you have in this thread?

Thanks!

VBR,

Inq

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

Well @inq, I don’t know what to tell you. I don’t have a methodology more like a curiosity about a few Roboticists.

Tom

To err is human.

To really foul up, use a computer.

So are you going to try to implement evolving obstacle avoidance in one of your swarm robots?

https://www.sciencedirect.com/science/article/pii/S1877050916308808

"This paper proposes an evolutionary algorithm for autonomous robot navigation. The results obtained in simulation enabled the development of new strategies. The simulator also made possible rapid evaluation of different parameters such as different mutation rates, reproduction, and selection strategies. The simulator produced a satisfactory collision-free behaviour on average after 15 generations. The solution developed by our system was embedded in a real robot and experiments demonstrated that this embedded system was able to successfully complete the autonomous navigation task in a real arena."

https://www.sciencedirect.com/science/article/pii/S1877050916308808

"This paper proposes an evolutionary algorithm for autonomous robot navigation. T

Did you happen to notice the first reference that they cite for this article?

Tom

To err is human.

To really foul up, use a computer.

@thrandell wrote:

"Did you happen to notice the first reference that they cite for this article?"

No. But I now see it is the author of the book you referenced.

It might be of interest to others if you can code such an algorithm in one of your swarm robots.

"I took out the initialization and print statements for brevity."

I like complete code I can run with print outs of what it happening.

Execution

So I had a bit of an epiphany after re-reading Floreano’s publications on their experiment and what they meant by ‘actions’.

My initial understanding of how the genetic algorithm and neural network worked together was wrong. The fitness of a chromosome is a measurement of how much the robot improves its situation after starting from the parameters encoded in the chromosome and completing 80 ‘actions'. An action entails reading the IR sensors, applying them and the recurrent output values to the neural network, running the motors for 300 ms, and collecting V, v, & i. After 80 ‘actions’ the V, v, & i values are averaged and plugged into the fitness function. This makes sense, right? When it’s all said and done the robot starts with a static set of neural network parameters and the only things to guide it are the changes to the IR sensor readings and the recurrent connection values.

Anyway, this is the way I believe the experiment was conducted:

- Start with an individual’s chromosome which is encoded with 16 sensor synapses, 4 recurrent connections and 2 output bias weights.

- The robot pulls in sensor input, normalizes it and using it along with the synaptic weights specified in the chromosome the neural network does its magic to determine the motor’s direction and speed.

- The robot is allowed to run with these settings for 300ms after which the wheel velocity, straight displacement, and highest sensor activation are recorded. After this initial action the IR sensors are read again and the recurrent connection values are feed back into the network. This action is repeated for another 79 times after which the data is summed, averaged, and applied to the fitness function.

- In the early populations a pair of random speeds was applied for 5 seconds to reposition a robot that was probably stuck or facing a wall when the individual was changed (repositioning strategy).

- After all individuals in the population have run, the genetic algorithm takes over to select the fittest individuals. They are paired up and crossover and mutation functions are run to produce two children that will replace their parents in the population.

- This is repeated for 100 generations or until fitness convergence is reached or the fitness stops improving.

The experiments parameters:

|

Population size |

80 |

|

Generations number |

100 |

|

Crossover probability |

0.1 |

|

Mutation probability |

0.2 |

|

Mutation range |

+/-0.5 |

|

Initial weight range |

+/-0.5 |

|

Final weight range |

not bounded |

|

Life length |

80 actions |

|

Action duration |

300ms |

Their results:

Tom

To err is human.

To really foul up, use a computer.