I'm studying a simple Perceptron and I have questions about what input conditions are trainable and which are not.

Here's a schematic diagram of a 3-input Perceptron that I'm currently studying.

If anyone is familiar with this type of Neural Network I have questions for you. ?

Thanks

DroneBot Workshop Robotics Engineer

James

A long time ago I was interested in neural nets and how to program them so what is your question?

The single layer Perceptron is not capable of learning an XOR relationship.

https://chih-ling-hsu.github.io/2017/08/30/NN-XOR

A long time ago I was interested in neural nets and how to program them so what is your question?

I understand that a Perceptron can only distinguish between input data that is linearly separable. What I am trying to understand is how to know when a specific data set is indeed linearly inseparable. I understand how to do this via graphing to see if a the data can be separated via a line or plane. But it's unclear to me exactly how to plot the input data in order to be able to see this.

What I've done is write a program in Python to emulate the Perceptron, train it for different training outputs and then analyze it afterward to see if the training worked.

Here is the Python program. This program requires Numpy.

import numpy as np

np.set_printoptions(formatter={'float': '{: 0.1f}'.format})

# Perceptron math fucntions.

def sigmoid(x):

return 1 / (1 + np.exp(-x))

def sigmoid_derivative(x):

return x * (1 - x)

# END Perceptron math functions.

# The first column of 1's is used as the bias.

# The other 3 cols are the actual inputs, x3, x2, and x1 respectively

training_inputs = np.array([[1, 0, 0, 0],

[1, 0, 0, 1],

[1, 0, 1, 0],

[1, 0, 1, 1],

[1, 1, 0, 0],

[1, 1, 0, 1],

[1, 1, 1, 0],

[1, 1, 1, 1]])

# Setting up the training outputs data set array

num_array = np.array

num_array = np.arange(8).reshape([1,8])

num_array.fill(0)

for num in range(25):

bnum = bin(num).replace('0b',"").rjust(8,"0")

for i in range(8):

num_array[0,i] = int(bnum[i])

training_outputs = num_array.T

# training_outputs will have the array form: [[n,n,n,n,n,n,n,n]]

# END of setting up training outputs data set array

# ------- BEGIN Perceptron functions ----------

np.random.seed(1)

synaptic_weights = 2 * np.random.random((4,1)) - 1

for iteration in range(20000):

input_layer = training_inputs

outputs = sigmoid(np.dot(input_layer, synaptic_weights))

error = training_outputs - outputs

adjustments = error * sigmoid_derivative(outputs)

synaptic_weights += np.dot(input_layer.T, adjustments)

# ------- END Perceptron functions ----------

# Convert to clean output 0, 0.5, or 1 instead of the messy calcuated values.

# This is to make the printout easier to read.

# This also helps with testing analysis below.

for i in range(8):

if outputs[i] <= 0.25:

outputs[i] = 0

if (outputs[i] > 0.25 and outputs[i] < 0.75):

outputs[i] = 0.5

if outputs[i] > 0.75:

outputs[i] = 1

# End convert to clean output values.

# Begin Testing Analysis

# This is to check to see if we got the correct outputs after training.

evaluate = "Good"

test_array = training_outputs

for i in range(8):

# Evaluate for a 0.5 error.

if outputs[i] == 0.5:

evaluate = "The 0.5 Error"

break

# Evaluate for incorrect output

if outputs[i] != test_array[i]:

evaluate = "Wrong Answer"

# End Testing Analysis

# Printout routine starts here:

print_array = test_array.T

print("Test#: {0}, Training Data is: {1}".format(num, print_array[0]))

print("{0}, {1}".format(outputs.T, evaluate))

print("")

Here is the output of the first 25 tests:

Test#: 0, Training Data is: [0 0 0 0 0 0 0 0]

[[ 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0]], GoodTest#: 1, Training Data is: [0 0 0 0 0 0 0 1]

[[ 0.0 0.0 0.0 0.0 0.0 0.0 0.0 1.0]], GoodTest#: 2, Training Data is: [0 0 0 0 0 0 1 0]

[[ 0.0 0.0 0.0 0.0 0.0 0.0 1.0 0.0]], GoodTest#: 3, Training Data is: [0 0 0 0 0 0 1 1]

[[ 0.0 0.0 0.0 0.0 0.0 0.0 1.0 1.0]], GoodTest#: 4, Training Data is: [0 0 0 0 0 1 0 0]

[[ 0.0 0.0 0.0 0.0 0.0 1.0 0.0 0.0]], GoodTest#: 5, Training Data is: [0 0 0 0 0 1 0 1]

[[ 0.0 0.0 0.0 0.0 0.0 1.0 0.0 1.0]], GoodTest#: 6, Training Data is: [0 0 0 0 0 1 1 0]

[[ 0.0 0.0 0.0 0.0 0.5 0.5 0.5 0.5]], The 0.5 ErrorTest#: 7, Training Data is: [0 0 0 0 0 1 1 1]

[[ 0.0 0.0 0.0 0.0 0.0 1.0 1.0 1.0]], GoodTest#: 8, Training Data is: [0 0 0 0 1 0 0 0]

[[ 0.0 0.0 0.0 0.0 1.0 0.0 0.0 0.0]], GoodTest#: 9, Training Data is: [0 0 0 0 1 0 0 1]

[[ 0.0 0.0 0.0 0.0 0.5 0.5 0.5 0.5]], The 0.5 ErrorTest#: 10, Training Data is: [0 0 0 0 1 0 1 0]

[[ 0.0 0.0 0.0 0.0 1.0 0.0 1.0 0.0]], GoodTest#: 11, Training Data is: [0 0 0 0 1 0 1 1]

[[ 0.0 0.0 0.0 0.0 1.0 0.0 1.0 1.0]], GoodTest#: 12, Training Data is: [0 0 0 0 1 1 0 0]

[[ 0.0 0.0 0.0 0.0 1.0 1.0 0.0 0.0]], GoodTest#: 13, Training Data is: [0 0 0 0 1 1 0 1]

[[ 0.0 0.0 0.0 0.0 1.0 1.0 0.0 1.0]], GoodTest#: 14, Training Data is: [0 0 0 0 1 1 1 0]

[[ 0.0 0.0 0.0 0.0 1.0 1.0 1.0 0.0]], GoodTest#: 15, Training Data is: [0 0 0 0 1 1 1 1]

[[ 0.0 0.0 0.0 0.0 1.0 1.0 1.0 1.0]], GoodTest#: 16, Training Data is: [0 0 0 1 0 0 0 0]

[[ 0.0 0.0 0.0 1.0 0.0 0.0 0.0 0.0]], GoodTest#: 17, Training Data is: [0 0 0 1 0 0 0 1]

[[ 0.0 0.0 0.0 1.0 0.0 0.0 0.0 1.0]], GoodTest#: 18, Training Data is: [0 0 0 1 0 0 1 0]

[[ 0.0 0.0 0.5 0.5 0.0 0.0 0.5 0.5]], The 0.5 ErrorTest#: 19, Training Data is: [0 0 0 1 0 0 1 1]

[[ 0.0 0.0 0.0 1.0 0.0 0.0 1.0 1.0]], GoodTest#: 20, Training Data is: [0 0 0 1 0 1 0 0]

[[ 0.0 0.5 0.0 0.5 0.0 0.5 0.0 0.5]], The 0.5 ErrorTest#: 21, Training Data is: [0 0 0 1 0 1 0 1]

[[ 0.0 0.0 0.0 1.0 0.0 1.0 0.0 1.0]], GoodTest#: 22, Training Data is: [0 0 0 1 0 1 1 0]

[[ 0.0 0.0 0.0 1.0 0.0 1.0 1.0 1.0]], Wrong AnswerTest#: 23, Training Data is: [0 0 0 1 0 1 1 1]

[[ 0.0 0.0 0.0 1.0 0.0 1.0 1.0 1.0]], GoodTest#: 24, Training Data is: [0 0 0 1 1 0 0 0]

[[ 0.0 0.0 0.0 0.0 1.0 0.0 0.0 0.0]], Wrong Answer

As you can see it works in some situations but not in others.

What I'm trying to learn is how I can determine before hand which situations will fail.

In other words, using my program I can see by results which situations fail. But that is information after the fact. I want to understand why these specific cases are failing and be able to determine that without having to actually put them through this training process.

I'm seeing two types of errors.

One is "The 0.5 Error" which occurs in some cases. The other error "Wrong Answer" is when the output is still in binary but does not produce the correct result.

I'm wondering if both of these errors are caused by the same limitation?

I'm suspecting that these specific cases violate the linear separability of the input data. But it's not clear how to determine this for these specific situations.

So I'm not looking for a way to make the Perceptron behave any differently. I totally accept that these cases are simply beyond the capability of a Perceptron.

What I'm looking for is a full understanding of why these specific case fail. If it is due to the linear inseparability of the input data then I'd like to know how I can determine this beforehand.

I'm thinking that this will be associated with 3D graphing since there are three inputs. But it's unclear to me how to determine by graphing the input data when to expect it to fail.

DroneBot Workshop Robotics Engineer

James

I found this site that tells how to calculate the decision boundaries of a perceptron

Calculate the decision boundaries of a single perceptron graphically

The problem is that this method requires that we have already run it through the perceptron and are using the weights that the perceptron had generated. That doesn't help much if we want to know whether a data set will work with a perceptron before we build it.

Based on what I've learned so far it appears that there is no way to guarantee the output of a perceptron without actually using the perceptron first to see if it works.

That seems a bit strange, but from everything I've been reading thus far this appears to be the situation. So the program I've already written that tells me when the perceptron works and when it doesn't work appears to be the only valid solution to this problem.

I was hoping there was some deeper underlying math that could be used. But apparently not since all the mathematics appears to need to the values of all the weights first, and there's no way to get those until we've run it through a perceptron.

So this is a little bit disappointing. A perceptron turns out to be something that can only be designed by trial and error? That's weird. I was hoping for something better than this.

DroneBot Workshop Robotics Engineer

James

Finally! I've made major progress on this on my own. No thanks to the Internet as people who are into A.I. have been telling me incorrect information. Why they do that I just don't know. I guess it can only be because they don't truly understand what's going on themselves.

Just to complete my rant on this let me post a reply I got to one of my questions on an A.I. forum,...

"Instead of explaining neural networks by it's inner structure, the better approach in understanding perceptrons is to research why they are useful."

Why not just confess that he has no clue how to answer my question? ?

In any case, various others have told me that the only way to calculate the decision boundary is to first know what the synaptic weights are. However, I've discovered that this isn't entirely true. At least it's certainly not true in some cases. I just reduced the problem to a 2-input perceptron. This allows the decision boundary to be plotted in 2D which was quite easy. So I plotted all possible I/O data solutions and predicted the behavior of a 2-input perceptron. I predicted that it should fail in only two case. Then I modeled the 2-input perceptron and by golly if failed in precisely the two situations that I had predicted.

That's much better!

Now I can go back to the 3-input perceptron and do the same thing with 3D graphing.

In the meantime my brain is frying. I've been learning Numpy, Pandas, Linear Algebra, and refreshing my calculus just to get this far. This is like being in college all over again. I love it! ?

This is well worth the study. I'll understand these perceptrons inside and out. Unlike the guy who just told me to forget about trying to understand their structure and just research what they can be useful for. What the heck kind of advice is that? I already know what they are useful for. Why does he think I'm studying them in the first place?

Sometimes I really can't help but wonder what goes through some people's minds.

DroneBot Workshop Robotics Engineer

James

I stayed up all night long working on the 3D graphics problem. Most of the problem I was having was trying to understand how to use matplotlib in Python. I'm working in 3D with a 4th dimension expressed in color.

In any case it was well worth staying up all night for. I solved the problem completely. And those A.I. "experts" were wrong. You can predict the decision boundaries of a 3-input perceptron without knowing what the synaptic weights will be. In fact, by doing this prediction you can actually find suitable weights to program the perceptron ahead of time with. This is exactly how I thought this would work.

I'm going to have to make a video on this because it's far too complex to try to explain in a written article. Not that it couldn't be done, but that would take a lot more work on my part to prepare all the graphics it would take to explain it.

In any case, here's a quick preview of the 3D plot of a 3-input perceptron. It actually plots out to 8 points because a 3-digit binary numbers is 2^3 or 8.

Here's the plot of all possible inputs: (binary inputs only here). Obviously if we allow for real number inputs then there are infinitely many combinations of inputs even on a 3-input perceptron.

Anyway, here's the very simple 3D plot:

It sure looks simple enough. I kind of knew that this is what it would look like, but learning how to graph this was not easy when I didn't know how the graphing software worked. ?

The 8 possible inputs are simply the 8 corners of a cube. That's simple enough. But then there are 256 ways to slice this thing up. And that's what I wanted to see. Now that I have this in a graphics program that I can manipulate I can easily go through all 256 states.

I just now went through a bunch of them manually to be sure this was working and so far it's predicted everything perfectly.

There is no need to know any synaptic weights. All you need to know are the input states and expected output states. From there you can actually come up with weights that will work.

This is great. I did it! I solved it myself even as the A.I. "experts" where telling me that it can't be done.

Now I'm off to bed at about 5:30 AM!

Shame on me. A 70-year-old who thinks he's still a 20-year-old college student. Me bad.

DroneBot Workshop Robotics Engineer

James

Wow! Double Wow!!

Have you gone off the deep end? ?

You have totally lost me. Back to the 3D Printer!

SteveG

Wow! Double Wow!!

Have you gone off the deep end? ?

You have totally lost me. Back to the 3D Printer!

It's actually extremely simple. I'm truly anxious to make a video on it, but unfortunately that's going to have to wait until the onset of winter. Strangely, as simple as it is it will require quite a bit to actually explain it. But once you see what's actually going on you'll have the "Ah ha!" moment and see the simplicity of it.

Last night in bed I was thinking about the quote from the guy on the A.I. forum and I started laughing so hard I could hardly fall asleep.

"Instead of explaining neural networks by it's inner structure, the better approach in understanding perceptrons is to research why they are useful."

"The better approach in understanding perceptrons is to research why they are useful."

Just think of how silly this truly is.

Replace "neural networks" and "perceptrons" with "cars" in the above quote and imagine a mechanic asking on a mechanics forum questions about how cars actually work. Think of how utterly silly this answer would be.

"Instead of explaining cars by it's inner structure, the better approach in understanding cars is to research why they are useful."

A student mechanic would look at that sentence and say, "What? I already know what cars are useful for, I want to know how they work so I can design them and repair them."

Apparently this is the mindset of the person who made that post. They aren't even interested in how perceptrons work. All they are interested in is how to use them. I mean, I can certainly see a place for that. There are a lot of people who drive cars and have no clue how cars work. So there is that aspect to it.

But you don't tell a mechanic this sort of thing.

Obviously the person who made this reply has no idea where I'm headed with this. I want to build analogy perceptrons and neural networks based on op-amps and FPGA's so I absolutely need to understand how they work "under the hood". Knowing what neural networks are useful for is basically irrelevant. And besides, just like all auto mechanics who understand how cars actually work also understand what cars are useful for, the same thing applies here. I already know what neural networks are useful for. If I didn't, why on earth would I want to build one?

Sorry, for breaking into this rant again. But it is amazing the different perspectives people have on things. That guy on the A.I. forum doesn't even know how neural networks work apparently. All he's interested in is what he can use them for.

He has revealed himself as an "end-user". ?

Nothing wrong with that I guess. The world is made up primarily of "end-users" and that's what keeps the design engineers in business.

But gee whiz, you'd think on an A.I. forum the people there would know how neural networks work.

DroneBot Workshop Robotics Engineer

James

My only minuscule knowledge of neural networks, which is all of 3 hours old, comes from watching this video.

It seemed to be a very understandable explanation so I copy it here in case anyone else ( apart from Robo Pi who seems seems to be well passed need an introduction and is something of an expert I think ) can benefit from an easily digestible introduction.

Yes, videos from 3blue1brown are probably the best explanation you'll find on the Internet for how neural networks work. However, there are several things that he mentions in this video, and some things he fails to mention that are important to understand.

To begin with, there isn't just one way of doing this. In fact, the field of designing a neural network is really in its infancy even though the concept has been around for several decades. As he mentions very briefly, the number and arrangement of the hidden layers is entirely open to the choice of the designer. And a large part of the research in neural network design has to do with how different individual designers choose to layout their neural networks. The example he gave is only one possible approach. But obviously this is an approach that produces fairly reliable results. So it works. How efficient it might be is a whole other question.

The second area where he really doesn't mention a lot about is that there are three different overall states that a neural network can take on.

- Before Training

- During Training

- After Training

It is important to understand that these three different situations are important to recognize if you're going to set out designing a neural network. It's also important to understand the difference between state 2 and 3.

To begin with, all the calculus and linear algebra that he was referring to are only used in state 2. That is to say that all the math is used during the learning process when all the synaptic weights are being determined. Once that learning process if finished the final state 3 (after training) no longer does any math. The final neural network simply takes in the inputs and produces a single output in this case. It has 10 physical outputs but if it's working correctly it will only put out a single output. After all putting out more than one output in this case would mean that it thinks the input represents more than one numeral.

So there are no calculations going on after training. The calculations where only used to create the synaptic weights during training. Once those synaptic weights are known, no further calculations are required. So a trained neural network no longer does any calculations. I think this is important to understand as many people get the idea that a neural network needs to go through all of these calculations every time it is asked to recognize something. But that's not true. The calculations are only required during training.

The other thing that I have discovered is that just about everyone who works on neural networks seems to be under the belief that there is no way to determine what synaptic weights should be prior to training the neural network. It turns out that this is actually not true. There are ways to predetermine what the synaptic weights should be. And if you know what the synaptic weights are before training you can just set those in place and skip the training step entirely. This would allow you to design a neural network and skip over the whole calculus and linear algebra training step entirely and go straight to having a trained neural net.

Showing how to determine what the synaptic weights need to be before training is part of what I am working on. This is why I want to start with just a single perceptron instead of jumping straight into a complex neural network of perceptrons.

DroneBot Workshop Robotics Engineer

James

It was interesting to read your comments on the video I found that introduces Neural Networks. I sort of understood how this technique would be useful for a computer program to distinguish if a bunch of pixels is representing numbers or letters where the actual representation of those numbers and letters can vary. Extrapolating on from this I can see that other bunches of pixels can can be distinguished as eyes, mouths, chins etc. and a collection of such distinguished objects can then be stored as a machine recognisable individuals face.

But face recognition must get extremely complicated I would think. The computer vision blob detection video mentioned by @casy in the other AI thread is more what I understand as achievable.

I await to be convinced that delving further into Neural Networks would be productive for me. Its a bit like the consideration for using ROS as opposed to plain old C or Python. Presumably there is an advantage, but will the effort required to skill up be worth it? For Neural Networks I suppose the answer is yes if one wants to do facial recognition, but no if one is just recognising a blob of red. So at the moment I'm reading what you and other experts in other areas achieve in a wait and see mode. It is really great to read all the exploits that you and others achieve in this robotic area and look forward to any videos you may get to produce in the Winter months.

Its a bit like the consideration for using ROS as opposed to plain old C or Python. Presumably there is an advantage, but will the effort required to skill up be worth it?

I agree about ROS. ROS is not for me. I'd rather just write my own code in C#, C++, or Python. In fact, I prefer to write my own code because this way I know exactly what's going on under the hood. Using ROS I have no idea how they are actually doing things behind the scenes.

I await to be convinced that delving further into Neural Networks would be productive for me.

The key is to stand on the shoulders of others.

I have no plans on trying to design neural networks to do what has already been done. I'm not going to waste my time reinventing the wheel from scratch.

However, there are still ways that I can use neural networks to improve on what's already available.

For example, there already exists facial recognition software that can instantly produce various important features of a face. So I'm not going to try to reinvent that. But what I can do is build a neural network that can use the output of a facial recognition software to map those features directly to the faces of my friends and family.

In other words, generic facial recognition software just recognizes features. But I can then take those features as the input to my neural network (instead of starting with a gazillion pixels). And then I only need to have my neural network reduce those facial feature to identify the faces of specific people.

And remember, once trained a neural network can do this instantly with no need for all those training calculations. So potentially, with just a little bit of work, I could have my robot be able to recognize my friends and call them by name. Something a general facial feature recognition program could not do on its own.

So don't think of having to recognize blobs via looking at individual pixels. Those programs already exist. The only reason to learn how to do that would be as a learning experience.

Instead we want to stand on the shoulders of giants. ?

So we will be interested in analyzing data that other software provides to us.

We're not going to start from scratch trying to figure out what's in a picture pixel by pixel. Those wheels have already been invented. We'll be using specialized neural networks to analyze the output of those devices.

So we'll be working with a lot less input data required. In fact, focusing on how to work with the least amount of input data possible is the key to simplifying the whole shebang.

I wish I had time to focus on making up some videos on this. But old man winter is breathing down my neck. So I need to prepare for that first.

DroneBot Workshop Robotics Engineer

James

My understanding of neural networks is that they are a collection of computing units that can be wired up via a feedback process (learning) to produce some desired input/output function.

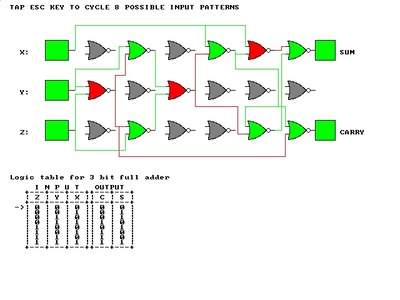

Now a computing unit can be a physical or it can be emulated in software. There is no functional difference except in speed. In the example pictured below I used an array of NOR gates as computing units. The input of each column of NOR gates can be connected to the output of a NOR gate in the previous column. Thus the computed outputs of one column (layer) becomes the input of the next column.

So the question is how do you "wire up" the network of computing units to perform some function?

First decide on what that function is to be, in this case it was a 3 bit full adder.

Because a full adder is a simple function you can actually work out the wiring logically and fully understand how it does what it does. However in complex functions, like recognising the value of a backgammon board state, it is too complex to work out logically so instead you use some algorithm to rewire the network until hopefully it hits upon a working set of connections via a feedback process that strengthens or weakens the connections according to how well it does for each trial.

The problem is you never know how it works only that for the examples so far it does work. It may fail badly with new data. The classic example was a neural network trained on a set of images and given feedback as to the presence or absence of a tank in the image. It appeared to learn. However when tried on a new set of images it failed. It turned out the images with tanks present were taken on a sunny day and those with tanks absent were taken on an overcast day. The neural network had simply learned to separate dark and light images!!

https://en.wikipedia.org/wiki/TD-Gammon

"TD-Gammon's strengths and weaknesses were the opposite of symbolic artificial intelligence programs and most computer software in general: it was good at matters that require an intuitive "feel" but bad at systematic analysis."

My feeling is that even if you use a neural network to compute some function you will still need and perhaps in many cases only need to use your head to work out how best to wire things up. This I think is the case for the simple robots a hobbyist may be working on which may not be any more complex than the current robot vacuum appliances.

Here's another great video on how neural networks work. In this case they are focusing on the higher dimensional spaces of the input data sets (exactly the same approach that I'm hoping to use).

I started with the simple 2D case. This is where the perceptron only has two inputs. But the 2D input space can be sliced up 16 different ways. And this is the dimensional analysis they are talking about in the above video.

When I moved to the 3-input perceptron that gave me a 3D input space that can be sliced up 256 different ways. I'm still working on how to graph those 256 slices. Those slices will be 2D planes. And the equations of those planes give us the correct synaptic weights that we need.

I'm currently getting my feet wet with a 4-input perceptron. This requires 4D space to visualize. And I'm currently working on learning 4D graphic techniques. This 4D space can then be sliced up 65536 ways. And those divisions will be done using 3D hyperplanes. It's going to be interesting to see how I'll be able to convert all this into a graphical output format. But from what I see it can apparently be done.

By the way, I'll apparently need to learn TensorFlow that they mentioned in the above video. Fortunately these people are more than happy to share and have made TensorFlow free and all their work open source.

So the tools are not only out there, but the people who are working with those tools are happy to share and are actually inviting us to use them.

So hyperspace here I come! ?

I'm only just now starting in on the 4D perceptron. Most of my time will most likely be spent learning how to graph in 4D and learning about Tensorflow. But once that's done I should be able to move on to the nth degree fairly easy.

The videos I hope to make are going to be focused almost entirely on perceptrons and their related input and solution spaces. 2D, 3D, 4D, and possibly more. I've already tackled the first two, I'm currently working on 4D as we speak.

DroneBot Workshop Robotics Engineer

James

My only minuscule knowledge of neural networks, which is all of 3 hours old, comes from watching this video.

Yes I remember this video and found it easy to follow. If you want to hard code an optical character reader you would have to work out useful characterizers that the video suggests are carried out in the first layers of the network.

Let us take a very simple example of recognizing hand written numerals between 0 and 9 entered using the mouse. The simple method I used was to enclose the result in a box and reduce it to some standard size then note the spatial position within the box where the stroke began and ended. Much simpler than trying to train a neural network to do the same thing. Essentially it reduced the complex input into two vectors. Now if a neural network were to hit on the same method it would not be able to explain to you (or itself) what it did.

A neural network is a function. If it fails you may not ever know why. You just have to keep retraining it on the failed task until it gets it right. It is something you might turn to if you are unable to work out how to write the function yourself.

I suspect our intuitive conclusions work much the same way. Intuition is more of a fast parallel process using limited knowledge where as reaching a logical conclusion is a linear step by step process using complete knowledge.