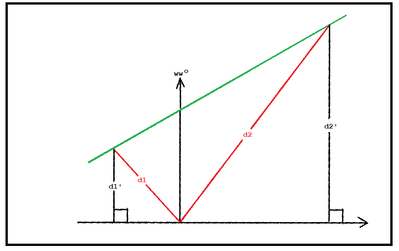

In your drawing... where the lines come down and intersect the sensor's plane... those points don't stand out (to me at least) as having any significance and the distance to that plane doesn't seem to have as much value... don't people want to know how far things are away from the sensor... not some plane out in space???

It is just for display purposes.

It is polar vs. cartesian coordinates.

People want to see a straight wall.

If you plot the angle of the rays for each point along the screens horizontal axis and the length of the ray to the obstacle along the screens vertical axis you will get a curve.

If you use your polar coordinates to fill in a point cloud then it is the display code that will convert it to a screen display using the cartesian coordinates of the point cloud.

I may have it all wrong with regards the sensor readings and also math is not my strong point.

All I know is with ray casting I had to convert the polar coordinates to screen cartesian coordinates.

To enlarge image, right click image and choose Open link in new window.

I see the TOF camera is getting cheaper and is also being used to replace the LIDAR in some of the latest robot vacuum cleaners. This one is for capturing 3d images in real time using the Raspberry Pi.

That's incredible... and kind of deflating after 8x8 resolution.

Looking over the site...

I'm not sure what the purpose of the KickStarter campaign. They obviously have a working prototype and it looks even production packaged with API's ready to go. It's also an established company. I thought KickStarter was to help an inventor with enough funds to get a concept to production.

Anyway... I see the "campaign" ends tomorrow morning (9/30) and it has fully funded. That if you sign up in time, you supposedly get a discount (40% off retail) on the item and get it in November versus waiting for it to fill supply lines. But... apparently they can keep your money if they choose not to go into production. I've never done this before, but there has to be a first time... I joined the campaign. Holding my breath till November. I see Inqling 3rd on the horizon.

Thanks for the heads-up!

VBR,

Inq

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

It's not clear to me from the video or the web pages... does it also do the normal RGB imaging? I can't tell if the images they show the test conditions are from another camera or are they from the same imager. I would think the image resolution (240x180) would be a give-away. But not sure.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

People want to see a straight wall.

I'm people! I want to see reality. 🤣

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

Hi @inq,

I think the images are 'real', except of course, the colour of each pixel is a function of distance.

The Arducam examples seem mostly short range .. say up to 400 mm ... perhaps that is the sort of range it is more consistent?

Teledyne appear to have a 'similar-ish' product ... albeit probably in a different budget range. Have a look at the short videos near the bottom of page:

https://imaging.teledyne-e2v.com/products/applications/3d-imaging/time-of-flight/

Also, note they mention the problem when more than 1 ToF sensor is working in the same area, and the light flash from one, acts as a source for mis-timed photons for the other. It is not clear how they fix it ... I would guess you would sync the two (or more) sensors so they each have time slots.

The Teledyne looks longer 'useful' range in their video ... and their evaluation 'pod' looks a bit fearsome ... I am speculating their light source might be higher power. Teledyne specify two wattage ratings (72W and 64W) which sound high ... I don't recall seeing any such numbers for the ST, but I haven't looked. Depending upon the underlying technology, the maximum light power could be quite high, with very low average power, if it consists of very brief flashes. It isn't clear if the Teledyne light source powers are average or peak.

Best wishes, Dave

I didn't realise it was a KickStarter campaign. I just hit on the video while looking for TOF examples. That they can keep your money if they choose not to go into production doesn't seem right? Is it all fake? I thought it was a working component you could buy now.

I didn't realise it was a KickStarter campaign. I just hit on the video while looking for TOF examples. That they can keep your money if they choose not to go into production doesn't seem right? Is it all fake? I thought it was a working component you could buy now.

It is my understanding that KickStarter is meant to help inventors to help them bring something to market. Or even if they just have the idea and need funding to try it out. The money is only refunded it the "goal" is not met. Otherwise the inventor/company get the money. They don't all promise that you get a product for your "donation", but when they do as this one, they still only have to deliver if it goes into production. If the product fails or they decide it is not a sustainable product they can keep pocket the donations and go their merry way. Even the KickStarter website says this... in more diplomatic ways.

I've never seen it used to confirm product acceptability like this from an established company, but we'll see...

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

@robotbuilder @Inq I have made a bunch of buys on Kickstarter. Dennis, my most recent was a version 2 product, I had version 1 already so this was an established company, in fact the most successful in that category. I have only 1 that is a dud. Can't say it was fake or scam but I doubt it will ever materialize now. They got caught in the chip shortages and had a high degree of government regulations involvement.

First computer 1959. Retired from my own computer company 2004.

Hardware - Expert in 1401, and 360, fairly knowledge in PC plus numerous MPU's and MCU's

Major Languages - Machine language, 360 Macro Assembler, Intel Assembler, PL/I and PL1, Pascal, Basic, C plus numerous job control and scripting languages.

Sure you can learn to be a programmer, it will take the same amount of time for me to learn to be a Doctor.

I think the images are 'real', except of course, the colour of each pixel is a function of distance.

The Arducam examples seem mostly short range .. say up to 400 mm ... perhaps that is the sort of range it is more consistent?

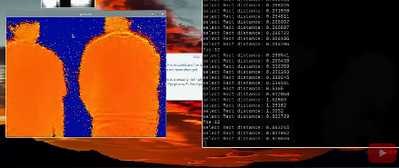

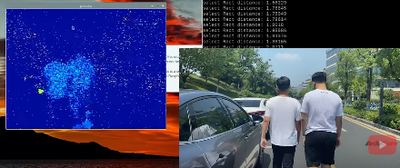

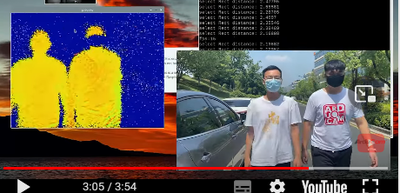

Available "Images" - What I was getting at... I was wondering/speculating if the sensor is a full-spectrum sensor (even though the emitter is infrared 940 nm). I was seeing the video like this one... and wondering if it could return both the RGB of the visible light like the lower-right window simultaneously with the distance data upper-left. That each pixel has R,G,B and X,Y,Z data. Would be way-cool to use it for distance and visual identification. I mean... asking for a beer and getting Coke can simply won't cut it! 😆

400 mm - seems to be the sweet spot before going to the large lens giz-mo's... at 12 meters like

https://www.amazon.com/SmartLam-TP-Solar-Proximity-Distance-Raspberry/dp/B09B6Y2GBJ

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

@inq Is it worth an email to Boston Dynamics to ask what they use?

First computer 1959. Retired from my own computer company 2004.

Hardware - Expert in 1401, and 360, fairly knowledge in PC plus numerous MPU's and MCU's

Major Languages - Machine language, 360 Macro Assembler, Intel Assembler, PL/I and PL1, Pascal, Basic, C plus numerous job control and scripting languages.

Sure you can learn to be a programmer, it will take the same amount of time for me to learn to be a Doctor.

Hi @inq,

I obviously have no connection or access to the products, but unless the video link you posted //youtu.be/pu9bSo-b11A is 'faking' the results, then the sensor is only looking for photons in the near infra red part of the spectrum, matching the laser flash output.

This is the only way it can measure the distance, as it is similar in principle to radar ... send out a 'pulse/flash' of electromagnetic radiation and measure the time it takes for a reflection of that pulse to return.

Furthermore, it must reject ambient light which could easily 'dazzle' the receiver with untimed photons.

Then the distance numbers for each pixel are being converted into colours by a look up table (or similar) ... probably in the R-Pi software.

Look at the part of the video with two guys walking away from, then back towards the sensor at times of about 2:50 - 3:06

You will see they change colour according to the distance from the sensor, nothing to do with the colour of their shirt, although the black face mask is obviously not reflecting the Lidar flash.

----

The slightly earlier part of the video showing people walking from above, suggests the tallest person was the one with the reddest hair ... but it is more difficult to 'verify' the correlation between 'hair' colour and height.

---------------------------------------------------

Thus to tell the difference between a beer and a Coke, you need to have a second 'differentiation' methodology ... strategies to consider:

- Have a 'conventional camera alongside the Lidar and match up the 'images'

- Add a sticker with non-reflective pattern to the beer cans that can be recognised

- Buy small/short cans of Coke, tall/large cans of beer

- Beer on the top shelf, Coke on the bottom shelf

- Cancel the Coke deliveries

All of these options could be made to work ... choose the one with the most Pros and least Cons 😀 😀

---------

Interesting to note the two guys part of the video suggested the image was reasonably sound up to (say?) 2m ... then became 'flakey', suggesting many pixels did not register a photon from the flash ... and that was in sunlit area, though not facing direct sunlight. Perhaps it is a little more 'robust' than the earlier model? (Not a 'fact' and may only be wishful thinking on my part.)

----------------

As for the LIDAR07 sensor in Amazon shown in your last post, I can only go by the table accompanying it

It appears to be a relatively narrow beam with 'half angle' of 2 deg for transmitter and 1 deg for receiver. Perhaps the full data sheet, which I haven't found, would be more enlightening, but I am guessing 'half-angle' refers to the angle at which the light intensity falls to half that of the intensity at centre.

Anyway, it is obviously easier to produce a 'small' bright spot several metres away than to achieve a comparable illumination per square unit area, over a wide area, which the sensors with many pixels require.

And of course, you will need a scanning system if you wish to build up some sort of image.

Best wishes, Dave

Hi @inq et al,

re:

... don't people want to know how far things are away from the sensor... not some plane out in space???

It sounds like you have some experience where that is useful? To gaming... to camera? To the price of beans in China... anything?? Is it some optimization/simplification that allowed Doom to run on 8086 back in '85? Can you elaborate?

It's really bothering me that I don't understand the usefulness of that.

I don't have an answer, but I do have a question.... it might be a very stupid question, in which case I apologise ... or it might yield a 'positive' answer to which, might be helpful to the @Inq's question above!

I do not have a 'fancy' camera, so this question is aimed at anyone with knowledge/experience of taking 'precision' photos with fancy lenses, high resolution sensor/film, etc, at their disposal.

---------

Imagine you are outside, at approximately ground level, on a surveillance job, perpendicularly facing one side of a large cuboid building like a hotel or office, with many windows on the wall of the building you are looking at.

- Your 'challenge' is to get a photo of someone who might appear for just a moment at any of the windows.

- Your camera is extremely high resolution, so a single, well-focussed shot of the whole side of the building, will yield the required result, providing ALL of the building image is in sharp focus.

- You are using a "normal" angle lens. This implies the field of view angle is modest, say 20-40 degrees, and you are sufficiently distant from the building to take the shot.

- The lighting is poor, so your camera will be operating with a wide aperature. This implies your depth of focus is comparatively small ... accurate focus control is essential.

- The camera is fixed on a tripod, etc., and its position is preset to encompass the entire wall of the building

- There will not be time to adjust (automatically or manually) any aspect of the camera when the person appears ... it must be a simple 'one shot', with aim, focus, etc. all preset.

Is it plausible to choose a single focussing distance that will give a sharp focus?

If my requirement is a little too difficult, but a modest reduction (smaller building?) would make it realisable, feel free to describe.

What would the focus distance be?

-------------

The 'important' part of the quetion is to compare the effective 'depth of focus' perpendicular to camera, with the 'depth of focus' along the hypotenuse of along a line not perpedicular to the camera.

Are they different?

Best wishes all. Dave

@davee AS a photographer I can say the simple answer is YES. As you seem to be hinting at there is a zone of in-focus distance that varies with the aperture and the distance from subject. The good news is that the longer distance from subject needed reduces the impact of the aperture.

I just pulled up a hyperfocal calculator and set it to 100 ft from subject, 50mm lens on an APSC sensor with an aperture of f5.6 and the the in focus zone is from infinity to 43.27 ft. Doing it again for an aperture of 2.8 yields 60.41 ft to 290.23 ft in focus.

First computer 1959. Retired from my own computer company 2004.

Hardware - Expert in 1401, and 360, fairly knowledge in PC plus numerous MPU's and MCU's

Major Languages - Machine language, 360 Macro Assembler, Intel Assembler, PL/I and PL1, Pascal, Basic, C plus numerous job control and scripting languages.

Sure you can learn to be a programmer, it will take the same amount of time for me to learn to be a Doctor.

Hi Ron@zander,

Thanks for your contribution.

I should like to explore a little further please.

The camera stays in the same place, angle, etc.

A number of people line up in front of the building, parallel to the building.

The person directly in front of the camera is (say) 15 feet away. The width of the line of people subtends about the same angle as the building.

Can a single (new) focus setting capture all of these people in sharp focus?

----------

And then repeat when the line moves to be just 5 feet away. (Obviously the line will need to be shorter.)

Thanks.

Best wishes, Dave