@byron wrote

It seems to me that using rotary encoders to calculate the position of a robot along the path it is currently navigating will only work for short distances. Great for correcting a short run to correct motor bias and to calculate distance travelled, but only if the surface is smooth and consistent. As I have posted in the past, I find its no good for outdoor grass field robots, but also not very accurate for indoors work when traversing the usual mixture of tile, vinyl , carpet, rugs etc found inside. Just across the Kitchen floor it may be OK.

For getting the robot to move along a desired route then an accurate positioning system where an accurate position of the robot and I'm thinking of centimetre accuracy is the way I seek to go. For outdoor bots we have GPS RTK utilising both a bot based GPS and a GPS base station achieve this level of accuracy. For indoor positioning systems there are radio beacon solutions such as that in this link:

https://marvelmind.com/product/starter-set-hw-v4-9-nia/

Whilst I can contemplate the cost of the outdoor GPS RTK such as that offered by Sparkfun for my outdoor bot (when I get round to building it) the cost of these indoor positioning systems, especially as I'm only looking at an indoor bot to practice with is far more than I want to spend.

So I have been looking to see if there are any reasonable cost solution for an indoor positioning system. One that may be promising is to use a camera based solution to determine the bots position, just like it can do face recognition. I have been looking at openCV where it seems relatively easy to pick out particular shapes or colour regions from the camera input. My idea is to put a coloured disk on the bot, an orange colour as I have a nice bright orange filament for my 3D printer and not much else of that colour in the room I have designated for my experiments. It just so happens that my conservatory is being cleared for renovation and decoration, and when its nice and clear I will mount a camera on the ceiling facing down and use its field of view as my experimental positioning system. I hope to be able to use openCV to pick out the orange disk on the bot and calculate its screen coordinates. I will then input a target set of screen coordinate to be the destination point and navigate the bot to this destination via constant feedback of the current position of the bot. OpenCV is free and and I already have some spare rpi's and rpi cameras so this is a nice cheapo solution for me.

Whilst some initial playing with openCV and some 'blobs' waved about in-front of a web-cam on my mac were encouraging there is a long way to go to see if this is approach is going to work, and my experiment room wont be ready for sometime as I await tradesmen do some renovation work when the pandemic shutdown is all over and normality resumes.

There are other ways of getting the position of the bot and finding its direction of travel like these LIDR's and sticking barcodes (or something) about the place for a robot based camera to read, and I may well have a go at some these ideas too.

In the meanwhile if there are any other ideas around indoor positioning systems for hobby outlay then I would be interested to hear. It would also be interesting to hear how others have tackled or plan to tackle their indoor bot navigation conundrum.

Using wheel encoders isn't the most robust method to navigate a robot base. You still need some method to reset the robot base to some known location because over time even the best dead reckoning from encoders will become less and less accurate. It only takes a small change in direction to become a large change in where the robot ends up. A method that was used by one robot was to reset itself by pressing against flat walls! The first robotic vacuums relied on wall contact to supplement encoder data. Now they can use LIDAR or vision to map out the house and locate themselves within it.

Farm robots use GPS to locate and vision to interact with the crop such as spraying individual weeds.

My laptop based robot will be using vision so hopefully I will be able to demonstrate some success in that area and show how it is done.

so hopefully I will be able to demonstrate some success in that area and show how it is done.

Well here's to your success 🍺. or maybe 🍷 😀. It will be great to read about your progress.

byron wrote: So I have been looking to see if there are any reasonable cost solution for an indoor positioning system. One that may be promising is to use a camera based solution to determine the bots position, just like it can do face recognition. I have been looking at openCV where it seems relatively easy to pick out particular shapes or colour regions from the camera input. My idea is to put a coloured disk on the bot, an orange colour as I have a nice bright orange filament for my 3D printer and not much else of that colour in the room I have designated for my experiments.

Or you can put the camera on the robot looking up at a colour spot on the ceiling? Otherwise you would need a camera for every room. You could replace the colour spot with an inconspicuous LED which will show up brightly. A flashing IR LED would work to avoid the software confusing it with any other bright spot in the image. Vacuum robots use IR LEDs to find their charging station or to act as virtual boundaries.

Looking up could have an issue with room lights being turned on or off so perhaps beacons along the top of the wall would be better. Navigate as boats used to do with light houses before the advent of GPS.

@robotbuilder - Thats a very interest post.

Initially my main use for an indoors positioning system is to sort of mimic a GPS RTK system. The camera and openCV approach, where Lon,Lat coordinates are replace with screen w,y coordinates to calculate a bearing with feedback corrections as the bot proceeds along its route, is to get the algorithms sorted, albeit with different tuning parameters required when using GPS on an outdoor bot. The fixed camera is standing in for the satellites. I'll have to see how successful this idea turns out to be.

But I think the use of a robot based camera and LED's is a great idea and may be a better approach. The LED 'beacons' could be put into a small box with a wifi microprocessor such as an ESP32 for ease of controlling and modifying the LEDs and powered with a battery. They could then be easily located about the place and controlled from a main computer. This could be expanded much more easily to a multi room navigation system if desired. As the bot moves from room to room it could send a signal that the appropriate LEDs should activate to save them being on all the time. Maybe LEDs flashing at different rates could be used as triangulation beacons. Also strategically positioned barcodes can be used and I have a pixy2 camera sitting in a box somewhere that, if I remember correctly, has some demo's of using this barcode recognition.

I'm starting to champ at the bit to get going with playing with this, but I will have to hold the horses for a few more months. 🤨

Initially my main use for an indoors positioning system is to sort of mimic a GPS RTK system.

Sure that should work and I see is used in some robot soccer games to locate and identify players.

Using more than one colour dot will also give the direction in which the robot is pointing.

Having a camera on board means there is no requirement for wireless communication between the camera and the robot. However a separate camera can mean the "brains" can be separate from the robot (on a pc for example) which sends control signals to the robot.

Outside farm robots all seem to use GPS to position themselves as they roam up and down the fields. Cameras are used for things like detecting isolated weeds to give them a quick spray before they get too big or reproduce more weeds and thus saving a lot of money in weedicide. You could also hit them with super hot water to cook them. Why hot steam is not used instead of weedicide I don't understand. Maybe the weedicide kills the roots and steam doesn't. I wipe out my weeds coming up between the pavers with hot water from my kettle. They are also using cameras in experimental fruit picking. Personally I think having the robot navigate up rows and so on based on vision alone the way we do would be a more interesting problem to solve.

I'm starting to champ at the bit to get going with playing with this, but I will have to hold the horses for a few more months

You could spend any spare time getting up to speed with OpenCV and playing with the pixy2 camera. I assume you will be using Python to write code?

I assume you will be using Python to write code?

I started playing with robots about the time Bill of Dronebots kicked off his robot series, and my robots has suffered a similar pause in progress, though not because of Bills stalled project. My preliminary coding with GPS, encoders and distance sensors, as well as the robot motor control was all done in done in C and I may continue to develop my bots in C . However in the meanwhile I have been using python for all my home control stuff like weather sensors, heating control, workshop and greenhouse monitoring etc, where I make a lot of use of mqtt comms, database recording and GUI programming in tkinter and latterly pyqt5.

I started playing with MicroPython when I found microprocessors like the ESP8266 could use it and also I got a couple of TinyPico ESP32 and Wemos boards where it came pre-loaded.

Whilst I find I prefer to code in python I was thinking that C or C++ would be required for the robot programming mainly due to the speed of code execution. As I have played with MicroPython and have not come up against any lack of speed issues I may well do a lot of python programming for my bots which will consist of both on-bot, but also central controller processors. But I do have some Teensy 4 boards that will only work with C++ (they do have a circuitpython ablility but this is lacking compared to Micropython). These very fast Teensy boards will probably be the main boards I use in my bots though by the time I get round to getting stuck in again some new board may then be my pick. 🤔

Posted by: @robotbuilder

You could spend any spare time getting up to speed with OpenCV and playing with the pixy2 camera.

Spare time?! I'm retired and still hoping to find this spare time thing.

But I've been playing around with openCV in python to the stage where I can do some blob recognition and put a border round the blob on the screen. Also, from the blob position, I can calculate a pretend bearing to another screen coordinate by considering the top of the screen to be North. I've found openCV to be rather fun to use and surprisingly easy, but of course one is using a lot of inbuilt algorithms cleaver folk have put into the package. I was thinking of an arrow shaped blob to find the current direction the bot is pointing to, but your post now prompts me to think of using a different colour blobs to indicate front and back of the bot.

Outside farm robots all seem to use GPS to position themselves as they roam up and down the fields

That how I intend my bots to work and with centimetre accuracy as provided with GPS RTK (plain old GPS only being good enough for a metre or two). One potential project is for a friend who does a lot of metal detecting. He trudges the fields waving his detector to and fro with only the odd beeb to cause him to pause and find some old useless bit of scrap metal. The idea is to set the bot off on a search pattern with a metal detector and record the GPS coordinated of any beep. Then he can just go the beep coordinates to discover some more useless scrap metal without having to tramp over a hole field. To be fair he did once find a valuable coin worth a few hundred pounds so if my bot finds gold I'll be wanting my fair share. 😎

I've found openCV to be rather fun to use and surprisingly easy, but of course one is using a lot of inbuilt algorithms clever folk have put into the package.

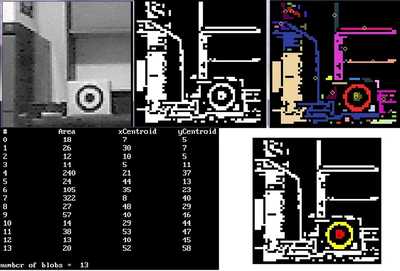

I began playing with vision before openCV existed so I had to write my own blob and color detection software.

An example I have posted before is of a target. The image is binarized with a local threshold algorithm. Each blob is found and listed along with various measurements. So in this case we are looking for a blob with a smaller blob sharing the same or similar centroids. As you can see the software has found the target and colour coded the blobs with red and yellow. It can find more than one target in the same image if they exist.

The size of the blob gives a measure of distance which increases its resolution as the robot gets closer.

For real time frame grabbing I used webcams and this software,

https://sol.gfxile.net/escapi/index.html

It is Windows only. If I was using Linux I would have to learn how to grab images (which are simply 2d arrays of numbers) using something like OpenCV. The actual image processing algorithms are independent of the OS and hardware. Python is probably too slow for image processing but as you mentioned it comes with image processing libraries, probably written in C, for your use.

Thats a nice example of the use of vision detection. Did it make it into a navigation system for a real bot or have you, like me, got lots of workable snippets, but yet to be garnered into a complete working robot whole navigation system.

A lot of Python libraries are written in C or C++ and MicroPython is entirely written in C. For sure openCV is written in C. I'm thinking that one may just as well program all in Python / MicroPython if the microprocessor has a suitable port, and call C routines if the code is not running fast enough in places. And then theres the thought of having multiple microprocessors like the rpi PICO that cost only a few $ to do specific tasks and theres an almighty amount of processing power we can put into our bots. And, as we have all probably found, a goodly amount of effort into producing good code to get an autonomous bot trundling about without do any damage is needed. Python is getting to be a persuasive platform even for robot control albeit call C code if required. Are you doing all your coding in C / C++ or do you also bring other languages into the equation?

Thats a nice example of the use of vision detection. Did it make it into a navigation system for a real bot or have you, like me, got lots of workable snippets, but yet to be garnered into a complete working robot whole navigation system.

Mostly workable snippets. I go for months (actually over a year now) without doing anything much on the project.

I did start on a complete working robot including some visually guided navigation experiments, the one you see as my avatar, unfortunately one of the motors failed and I wasn't able to find a suitable replacement.

https://forum.dronebotworkshop.com/user-robot-projects/k8055-robot/#post-4101

And then theres the thought of having multiple microprocessors like the rpi PICO that cost only a few $ to do specific tasks and theres an almighty amount of processing power we can put into our bots.

For me I don't really want to deal with and program lots of different hardware pieces. Ideally you have a computer with i/o ports to sensors and motors end of story for reasons I have outlined elsewhere.

Are you doing all your coding in C / C++ or do you also bring other languages into the equation?

For the last 11 years I have been using FreeBASIC although I used C/C++ before that on the simpler machines.

Ultimately it comes down to the learning curve which, as I age, becomes much steeper and thus it is easier to stick to what I know rather than learn a new complicated way of doing what I already had done on simpler machines using simpler languages.

https://forum.dronebotworkshop.com/introductions/hi-2/

Still struggling along. This is my latest modification to my robot vacuum base. It looks like I will have started building lots of robot bases without ever completing a real robot.

I have been playing around using the ultrasonic sensors to avoid obstacles.

For many years my real hope has been to give a robot base "eyes" to navigate around, recognize where it is as well as maybe one day giving it an arm to pick up objects. Probably will never happen but now and then every so many months or years I get enthused enough to give it another try!!

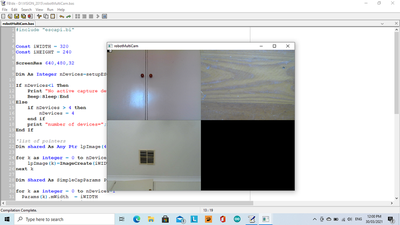

This is a screen shot of 3 cameras on the robot (one is the laptop camera) providing image data. I have experimented writing software to use images to control a robot. The top/left is the laptop camera. The top/right is a camera pointing to the floor (one way of navigating) and the bottom/left is looking up where it can see the ceiling, corner cornice and a air conditioning vent. Using natural features found in a house the robot base could in theory orientate itself and determine where it was at any given time. The bottom/right is where an image from a fourth camera would be displayed.

Still struggling along

Building an autonomous robot is certainly a challenging task and your bot is taking shape nicely.

On a flat plane, given 3 bearings to 3 known coordinate positions then I could calculate the position (the coordinates) of my robot. (well I mean find and crib an algorithm to do the calculations 😎 )

The calculations of finding the position of a robot based on the position of objects recognised in a camera's field of view, but then also integrating this with other cameras all finding different objects and the camera's all pointing at different angles is horribly mind blowing for me. I would struggle for sure to tackle this sort of thing and Trigonometry for Dummies wont help me for this advanced stuff. 🤨

I daresay the calculations could be achieved. If I was attempting this I would consider dedicating a fast processor like a Tennsy 4 to continually perform the complicated processing and have it just spit out the current calculated coordinates of the robot on request of a controlling program on another processor.

I like your ideas but perhaps pointing the camera's all at the same angle and all recognising a similar feature like a coloured blobs you fix about the place would make life considerably easier than recognising your homes current natural features. Maybe the coloured bobs could just be a few small unobtrusive LED lights that are only switched on for robot navigation purposes, though perhaps also automatically switched on with battery power if you get a power outage so you can sell them to the wife as a desirable safety feature. 😎

The idea of a camera pointing up is that it can use the 2d view of the ceiling to navigate and orientate using visual features. On the ceiling there are no "angles to calculate". Let us say on both sides of each door entrance you have a target over the spot at which to rotate to move through a door. The motors run to move the target to the centre of the image and rotate the robot (and thus the image) to point in the right direction.

The same idea with a camera pointing down to the floor. Take an image, move the robot, take another image and use the two images to determine how far the robot has moved and rotated. This can be with pixel accuracy if two points can be found to match.

As for complicated processing needing a dedicated fast processor this is not the case. A PC and maybe a RPi is fast enough if the code is written in C++. The target example above can be tracked in real time (limited by the rate at which frames are grabbed not limited by the software speed). The processing is not complex. You just binarize the image and turn it into blobs.

This guy covers the basic coding for image processing using the Processing language.

I was going to work my way through the examples in order to get up to speed with the Processing language and OpenCV but for the last year have been too busy with other things as well as lacking the motivation to get into it in my spare time.

I now see what you are up to, not trying to triangulate, more head for the marker on the ceiling and calculate speed and distance travelled from the motion calculation of the floor camera. From the robots starting position the distance to travel to reach target will be a nice challenge and will involve calculating angles I think, but maybe you are not going to calculate this anyway.

It would be good to follow your progress but I'm not sure from you posts if your motivation is back. I have the motivation, but like you no time for a while yet to to get properly stuck in to playing with robots. I have made a start as I've cleared a bit of my office room in order to put in a dedicated electronics desk and have now got some drawers to organise some of the many components I have sitting in various boxes, but its going to be a mighty big task.

Thanks for the video link on processing, its the sort of thing I have been doing with opencv using python with numpy arrays. Of course opencv is all written in C++ so theres probably not too much overhead in using python to call the routines, but I will find out when I finally get to that point. So I will continue with python and opencv in preference to the processing language, but hopefully you will take the high road while I take the low road and you'll be 'processing' before me. 😎 May you robot mojo be fully restored.

No need to calculate speed or distance travelled. Just a case of "are we there yet"? "Look there is a light, head for it." Not so much speed or distance just a case of using the data to determine if we are going in the right direction for the next goal point. If the robot is controlled by features in the environment it doesn't need exact position computations as used with odometry navigation.

Worked on the new robot base yesterday, had a few hardware issues with one of the optical encoders not working. Plan to work on it again today. Usually when I do something I do it very quickly and then nothing for months or years!! However having a forum to show off any progress is motivation 🙂

Hope you will share your Python and OpenCV code?

Plan to work on it again today

😀 I see you are getting back into all this bot malarky again, good news and I hope you are re-enthused to keep at it. I'm sure we all look forward to your progress through all its trials and tribulations.

Hope you will share your Python and OpenCV code?

Yes, initially I can share some early testing code I played with. I will need to put in better comments in the code plus a small explanation or it wont make much sense. I'm just finishing off a bit of woodwork that will be ready for painting in a couple of days. Once the first coat of paint is drying I will be at a suitable pause to dig out the code, do the necessary, and post it on the forum.