I just remember reading it somewhere. A quick google, this was the first to pop up.

https://www.mathworks.com/discovery/deep-learning.html

"Deep learning requires substantial computing power. High-performance GPUs have a parallel architecture that is efficient for deep learning. When combined with clusters or cloud computing, this enables development teams to reduce training time for a deep learning network from weeks to hours or less."

"Usually, a Deep Learning algorithm takes a long time to train due to large number of parameters. Popular ResNet algorithm takes about two weeks to train completely from scratch. Where as, traditional Machine Learning algorithms take few seconds to few hours to train."

And so on ...

@robotbuilder Maybe I am misunderstanding, but the intense number crunching is done by a 3rd party. By using the appropriate tools and libraries, you get the benefit of their many hours of data processing. The bottom line is I think not only a PC but even a medium power MPU or MCU can do the job.

First computer 1959. Retired from my own computer company 2004.

Hardware - Expert in 1401, and 360, fairly knowledge in PC plus numerous MPU's and MCU's

Major Languages - Machine language, 360 Macro Assembler, Intel Assembler, PL/I and PL1, Pascal, Basic, C plus numerous job control and scripting languages.

Sure you can learn to be a programmer, it will take the same amount of time for me to learn to be a Doctor.

I assume the programs you have tried out involve using libraries to create a network.

Maybe you can help me with where my thinking is... versus your knowledge.

Remember, my first even thinking about this ANN stuff was inspired by @thrandel's thread. This is the sum-total of my research so far.

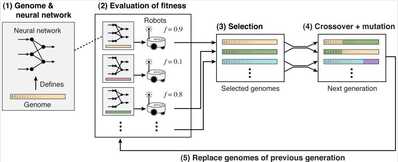

- I've read through his OP reference several times. This has the little 2-wheel robot learning a maze (ACAA)- https://www.research-collection.ethz.ch/bitstream/handle/20.500.11850/82611/1/eth-8405-01.pdf

- I have the book he referenced, coming... D. E. Goldberg. (1989). Genetic algorithms in search optimization and machine learning. Addison-Wesley, Reading, MA.

- I've gone through this Neural Networks series at least once. It charts through learning to decipher hand written digits. The gradient descent discussion was very enlightening that it was something very similar to a couple of projects I did with the Nedler-Mead algorithm.

- This was a tremendous find! I get so tired of sifting through blogs, papers and videos where we have to hear the entire history from the abacus-forward. This kid builds the ANN library from scratch with the forward and backward learning in 9 minutes!!! Incredible! -

I've written this exact matrix library in every language from Basic, Fortran, C, C++, C#, Delphi to JavaScript so I'm feeling pretty good about the nuts and bolts of the problem.

You mention backward calculations but as I understand it genetic algorithms are an alternative to back propagation methods.

I'm not certain I've seen (or know) what the difference is between these two things. Let me see if I can describe what I know and you tell me where I need to re-think or research.

- In the most simple terms, ANN takes a bunch of inputs and runs them through a series of matrix operations that end in some outputs. This, I think is called the Forward Propagation.

- ie... The ACAA robot takes Infra-Red proximity sensors as input. It does the matrix operations and the outputs are the speed and direction of two motors.

- The matrix is just a population of numbers that are pseudo randomly chosen at first and are conceptually the weighting factors and bias values of the equations. I don't think I've seen the term in literature yet, but this sounds like the genome you mentioned.

- All the above happens on the robot. It sounds like the ACAA robot ran through these operations for a given amount of time using this same genome, while keeping track of its results. Being random data, the bot exhibited spinning around, running into walls and random behavior.

- At this point the bot evaluates its results with some goal in mind. In the ACAA bot it was to maximize speed, minimize spinning around and maximize distance from the walls. Based on difference between the results and the goals, it adjusts the weighting factors and bias, re-builds the matrices (genome) and runs another test. This, I think is called the Back Propagation.

- After a while, it converges on a solution and we have the best genome to run our bot for the given task. At this point we should be able to put the bot in a different maze and it'll drive around it pretty efficiently.

I've got a long way to go before I can even start writing this part of the project. But, to put it in the context of Inqster, I'll set it up in a big maze similar to the ACAA experiment. I'll use the same goal. There will be lots of sensors on it, but really the ToF sensors will be the most equivalent to the proximity sensors. Say, I use the 8x8, but only really need to read one row shooting out almost horizontal. The outputs will be the same speed and direction of the two motors. Basically, the only difference is Inqster is a LOT bigger than theirs and I'll use the WiFi instead of the umbilical chord.

My understanding of training a large neural network with back propagation is that they require thousands of iterations and high speed computations which would take a very long time on a home computer.

That is my understanding also. In the context of a purely computer simulation, this might only take mere seconds for thousands or even millions of forward/backward genome generations. On their bot 8 sensors says the matrix is only 8 columns and four rows. One life test would only take microseconds to calculate. Really the time issue that they discuss of taking days has nothing to do with compute power. It is simply having to run the robot in the real world that takes the time and this is all done on one genome. It is my belief that running the simulation, re-calculating the back propagation might totally be done on the ESP32 and not really need to bother WiFi'ng it to/from a base station.

For my learning curve and experimenting with ANN, I will run a second learning state where I throw all the sensor data from:

- 43,200 = 240x180 Arducam ToF as the forward facing sensor

- 16 = 8x8 VL53L5CX ToF as the aft facing sensor

- 3 = 3-axis accelerometer

- 3 = 3-axis gyro

- 3 = 3-axis magnetometer

- 1 = barometer altitude

- 3 = 3 DoF GPS position

Total = 43,229 columns in the matrix. At first, I'd use the same goals again, but the ANN learnings with have to learn that 99% of those inputs are of no value and thus their weights will go zero. I'd expect this to be WiFi'd and run on a PC, but even these numbers are nothing for decent PC. I've done Finite Element Analyses which is basically doing the same kind of matrix mathematics on matrices in the millions of columns and rows.

Then the second goal will be my original one for Inqling Jr - Mapping a building in 3 dimensions. But with my previous thoughts on Inqling Jr was to do that by totally brute-force coding from first principles of vision and knowing what the sensors are telling me... where the pixels are pointing in relation to the bot and having to do all trigonometry to describe it mathematically. Here in Inqster, I'm hoping to see that ANN will just figure this stuff out without really knowing what the sensors are... just that it gave it a number.

At least that is my current understanding and strategy. 😊

At the moment my biggest missing part is where they talk about the intermediate (hidden layers). So far, nothing that I've read indicates how to arrive at how many of these layers are good NOR how many elements are in them. They seem totally randomly picked at the moment. More studies will hopefully enlighten me. 😎

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

"Deep learning requires substantial computing power. High-performance GPUs have a parallel architecture that is efficient for deep learning. When combined with clusters or cloud computing, this enables development teams to reduce training time for a deep learning network from weeks to hours or less."

I don't quite see how they formulate things like ChatGPT, DALL-E from the input side, but I know they are talking hundreds of millions of nodes (DoF). So the matrix mathematics they're talking about require matrices with hundreds of millions of columns and rows. That is where they're getting into the need for GPU's used to do the calculations. The Nvidia 4090 for instance has: 16384 cores, 2.52 GHz and 24GB to throw these huge matrices around.

In the context of where we are even my idea of throwing every sensor at it, I'm only at 43K DoF. For a normal PC (i5 or i7) this is nothing. It might be even possible on the ESP32.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

I ran across this product in an advertisement while researching ANN. Although way to pricey for me, it sounded like something you might be interested.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

As far as I can tell the robot in the article does not learn a maze it simply avoids obstacles. When it wrote that the robot learns to navigate I think it simply meant it could move about without hitting anything.

What I have read about neural network design is it is a bit of a black art. The more features it has to process the larger the ANN. There may be a exponential rise in size for larger inputs and number of inputs to recognize (eg. size of images, number of things to recognize).

The chromosome has a one to one correspondence with the set of neural weights. You shuffle the chromosome and translate that into weights. Back propagation doesn't use chromosomes to shuffle weights it works backward through the net to adjust the weights so next time they would give a closer result for a desired output for that particular input. Our brains are first wired up by genes evolved by many generations of individuals that survived to reproduce, that is like evolving a functional neural network. We can also modify that "wiring" as individuals, that is more like the back propagation process.

1. Define primitives in form of a chromosome

2. Build many of these chromosomes each with a different arrangement of genes

3. test the control system (neural network) generated with every chromosome

4. select and reproduce those chromosomes which produced better fitness according to some criteria (eg. less collisions?)

5. goto 3

Neural nets are interesting but beyond me when it comes to the mathematics and different designs. Thus I use the hard coded approach to visual recognition. The Roomba robot doesn't use nets to find markers in the visual field to navigate. There is also an algorithm for facial recognition that doesn't use a net.

I don't have money to spend on expensive hardware. The lidar returns distance from which I guess you could construct 3d shapes. That is not however how we see the world.

You mean like this?

https://dronebotworkshop.com/esp32-object-detect/

Not sure if they do it for genetic algorithms or any data that is not an image.

@thrandell FYI

@inq wrote

"I'm hoping to see that ANN will just figure this stuff out without really knowing what the sensors are... just that it gave it a number."

Hoping for a magic self learning network 🙂

Personally I like to understand how the "magic" works.

Mapping input to output for obstacle avoidance doesn't seem to be much of a problem to solve.

I think figure 1 is a good visual representation of evolving a neural network.

https://journals.plos.org/plosbiology/article?id=10.1371/journal.pbio.1000292

To enlarge an image, right click image and choose Open link in new window.

Another link that used the same image and may be helpful.

https://www.frontiersin.org/articles/10.3389/fnbot.2018.00042/full

Material Properties

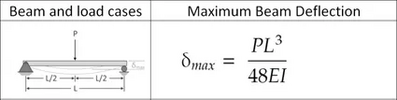

I'm wanting to put some simplistic suspension on the robot so that it might absorb some bumps versus jostling around all the guts or even jumping around. I'll use a simple cantilever, leaf-spring type suspension where everything bends versus having pivots like a car. Anyway, to calculate sizes of things and how much un-weighted deflection to put in them, I'd need some material properties of the 3D plastic.

Giving it some thought with the equipment I have, I came up with the perfect test sample to determine the Flexural Modulus (Stiffness Property). Created a simple flat 3D Print of 240mm x 15mm x 2mm. I just took a WAG of the size I think will be needed.

Also, not having an expensive, calibrated load cell, I came up with an equally simple test cell.

All is necessary is to bend the sample over the pen on top of the scale and deflect the ends until it touches the table. This gives the known deflection (thickness of the scale and pen) of a beam and the load that results from the beam being bent.

This data can then be used with the beam equation to calculate the Flexural Modulus.

Results

The good news - Using ABS plastic, I got an extremely good correlation to the engineering expected value of injected molded parts. This is possible since ABS is a well established engineering plastic while PLA is not. In fact... even though several references stated 3D Printing would be lucky to get even 75% of the injected molded value, I got 90%.

Eflex = 2.06 GPa

The bad news - I also created a Polycarbonate / Carbon Fiber sample. The manufacturer claims near aluminum properties. It came out only 5% stiffer than ABS! And only 3% of Aluminum... I think someone is telling a story.

Eflex = 2.15 GPa (Polycarbonate / Carbon Fiber)

Eflex = 69 GPa (Aluminum)

Those interested in PLA

Eflex = 3.36 GPa

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

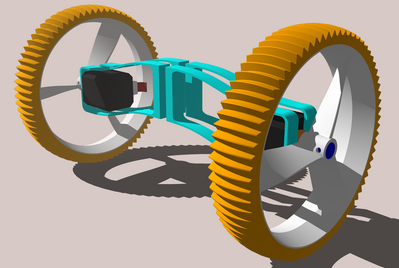

Control Arm Design

Using the Flexural Modulus, here is the design for the control arms. They are pre-deflected down such that when loaded with about one kilogram worth of chassis, batteries, electronics and other equipment the control arms should be just about strait and level.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

A nice bit of engineering and those wheels look good. If you print another set and a few more chassis parts you will have a nice stable rover bot 😀. But I guess you are adamant on a three wheeler that can go topsy turvy when bundled over by an errant rock. That should help to confuse your mapping algorithms. 😎 What CAD package do you use? That picture shows some nice rendering and even has a shadow effect. Very pretty. Good progress, keep us posted 👍

But I guess you are adamant on a three wheeler that can go topsy turvy when bundled over by an errant rock.

I definitely want/need the zero-turn ability and I've not seen a decent 4 wheel design that'll keep that without a lot of crabbing or using Mecanum wheels. Not sure how those would tolerate dirt, grass and gravel. Besides... 3D printing is CHEAP... The one 8" wheel costs about $2 in plastic.

I've given up on the sunny-side-up AND over-easy ability concept. I think the last straw was... eventually, I want to mess with GPS and it really needs to stay sunny-side-up. 😜

What CAD package do you use?

Nothing fancy... Just an old version of SketchUp from when Google still owned it. Version 7.0.10247.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

It will be interesting to see how you go.

I have been rereading the @thrandell thread and getting his neural net working to test on the simulated robot.

It will be interesting to see how you go.

Reading that book was a walk down memory lane for me. I graduated from the University of Oregon in the mid 80’s and Pascal was the programming language that the department had us use. Then I spotted this comment in his code and I remembered that I had a thing for that series of books by Donald Knuth, The Art of Computer Programming.

Being a starving student I could never afford to buy one, but they looked so cool.

Tom

To err is human.

To really foul up, use a computer.