Perhaps this might give you what you need to know?

https://mattmazur.com/2015/03/17/a-step-by-step-backpropagation-example/

Out of curiosity I put the code in a loop after adding this code at the end,

// update weights w1 = nw1; w2 = nw2; w3 = nw3; w4 = nw4; w5 = nw5; w6 = nw6; w7 = nw7; w8 = nw8;

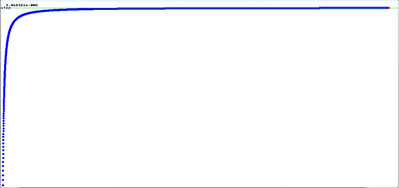

to see if the weights actually converged to values that would produce the desired outputs, that is, where out1 was close to target1 and out2 was close to target2. It worked as expected. It seemed to converge quickly and then slowed down reaching a very close match by 60,000 cycles.

This shows the Etotal from 0 to 10000 cycles I was surprised how quickly it converged to values close to out1 = target1 and out2 = target2.

@robotbuilder, Thank you for the snippet. It does a fine job of clearly showing each phase of the process. Is the FORWARD PASS the same as your discussion of feedforward? Or is that something I'll run into as I progress in the studying of NN?

At this phase, I'm kind of interested in the time it takes to learn. The Floreano reference by @thrandell mentioned days. I know it is purely the size of the problem being far larger AND that it had to actually move the bot in the real world before gathering data and iterating...

As such, I've taken your example (at 60,000 cycles) and instrumented it and run it on several devices. Like you, I plan any complex NN learning I'll run on a PC via data WiFi'd to/from the bot.

- 16 ms - Intel i7 3.3GHz Windows 10, VS C++, using double variables - Baseline

- 31 ms - Intel i7 3.3GHz Windows 10, VS C++, using float variables - Interesting that using the lower precision variables is actually slower. It must be because the floating point processor spends more time converting back and forth from floats to doubles than to do the calculation.

I'm not sure how pertinent this is... I'm gathering the learning phase taking so much time is not indicative of usage. So a robot may not need to learn, but just take advantage of the learning. The desktop/laptop computer is clearly faster. But... it might make things easier if everything is on the bot. Results are using float / double variables

- 71457 / 71457 ms - Arduino UNO

- 10619 / 18540 ms - ESP8266 at 80 MHz

- 5306 / 9267 ms - ESP8266 at 160 MHz

- 3864 / 6498 ms - Raspberry Pi PicoW, RP2040 at 133 MHz

- 443 / 4119 ms - ESP32-S3 at 240 MHz - Note: Not a misprint! The floating point processor built-in to the ESP32S3 only works on floats... not doubles. And it is certainly kicking it! There is supposedly built-in matrix processor which should significantly improve this 443 number. I haven't quite figured how to use that yet.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

@Inq wrote: "Is the FORWARD PASS the same as your discussion of feedforward?"

Keep in mind I am not experienced with artificial neural networks and have no intention of actually using them myself.

In that video example you posted I hard code for features rather than hope they will appear in a neural network. A hard coded solution is faster, uses less memory and doesn't require training of thousands of examples.

I guess forward pass and feedforward mean the same thing here. I have seen the term feed forward used in describing the brain's ability to select what to look for based on current context. Thus the brain will identify an object faster if it is expected to be there.

The best set of weights is unknown and thus chosen randomly to start with. Training a network means using some algorithm like back propagation to best fit for the pattern they need to recognize.

Back propagation is a known way to move the initial weights toward a better solution.

Genetic Evolution of a neural net is apparently another way to move the weights toward a better solution and is the subject of this thread by @thrandell. The neural network he is using actually feeds the output back to the input (recurrent network) so the actual input is the current input plus the previous output.

"There is supposedly built-in matrix processor which should significantly improve this 443 number."

I am sure the neural net libraries would make use of such hardware. I don't think you need worry about the low level programming to use a neural net.

These are libraries you can use with Python.

https://analyticsindiamag.com/top-7-python-neural-network-libraries-for-developers/

And I see there are libraries you can use with c++ such as

https://www.opennn.net/

In that video example you posted I hard code for features rather than hope they will appear in a neural network. A hard coded solution is faster, uses less memory and doesn't require training of thousands of examples.

I understand, and if the problem is well defined, I totally agree. As I go through these YT and articles and books, it is becoming clearer and clearer how it works, why it works, why in some cases it is the only way to approach a problem... and I find it very interesting. Let me give you an example of the path I want to try.

In the first problem, I'd like to tackle a 2-wheel self-balancing robot. Similar to my Inqling Jr, but without the training wheels. I will also give it the mechanical ability to re-right itself after it's fallen. This is, as you say, really pretty easy to do in a direct hard-coded way. A simple Google search will give hundreds of examples, white papers and research papers. For me, it would be to learn the capabilities of using a self-taught, Neural Network. I'd start with blank parameters and let the bot learn for itself how to get up, balance and move.

The second problem, I would consider impossible to do in this direct way... Building a bi-pedal robot. Yes, there are thousands out there and 99% of them are toys that go at one (or maybe two) speeds and look like a drunken sailor. The only exception that I've seen is the Boston Dynamics. As I understand it, BD's are completely choregraphed and take hundreds/thousands of human hours to make those elaborate dance videos.

I would again start from a blank brain and let it learn to get up, balance, walk and run after falling a million times. The key thing that I have not seen on any robot including BD's is the ability to change pace in fine increments. Like humans, the gate between a stroll, walk, jog, long distance run, sprint run is completely different. Yes you could explicitly program every one of those, but what happens when the pace needs to be 23% between two of those paces? A hard-coded solution would fail. Then what happens with a variety of terrains. Gravel, up/down/transverse hills, grass, bumps, holes. Having both the ability to use the NN and to refine it even after walking is achieved to start tackling all the other conditions speed and terrain. It falls, it evaluates all the incoming data it gathered during the fall and runs another backward pass to refine the weights and biases. It gets up and tries again.

I've seen a couple of animated computer generated learning sessions like this, but usually with a quadruped totally simulated in code. I've also seen one or two that appear were training a real quadruped robot in this way. But all these are collegiate research teams and professors or large corporations with deep pockets.

I see it as a challenge that I might be able to do it. I give myself less than a 10% chance, but hey... at least my wife doesn't have to worry about me spending all my time outside the house. 🤣

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

For anyone at the Noob level like me, I've found this on-line book that so-far seem very helpful with both theory (with only a little Math) and a concrete problem.

http://neuralnetworksanddeeplearning.com/

It also confirmed that feedforward neural networks is the THE FORWARD PASS described in @robotbuilder code.

Also... @byron this book is going to carry me kicking and screaming using Python. 😋

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

When a grazing animal is born the gaits are already there. There are birds that can run and peck food the moment they come out of the egg. They depend on innate interconnected neurons in the spinal cord called central pattern generators which are modulated by force and touch feedback and can switch gaits when required. They are triggered into action by signals higher up in the nervous system and feedback from the muscles.

https://en.wikipedia.org/wiki/Central_pattern_generator

Once you train a neural network the resulting weights, learned behaviors, can be transferred to blank neural networks so they can be "born" already able to do the things the first network had to learn (evolve).

There is a thread elsewhere here about using electrical stimulation of trigger neurons to start the walking in someone with spinal damage.

@inq What about Honda's ASIMO. The choreographed dances are for PR. Have you seen other videos where several BD robots maneuver through various obstacles? I am very sure that is not choreographed.

There is even a cow-sized 4-legged robot that the army is evaluating for a troop-support role. It can carry a lot of hardware. In the video, I saw it was maneuvering through typical wooded hilly terrain, and when it did fall, it got right back up. Isn't the dog-style robot being used all over the place now?

First computer 1959. Retired from my own computer company 2004.

Hardware - Expert in 1401, and 360, fairly knowledge in PC plus numerous MPU's and MCU's

Major Languages - Machine language, 360 Macro Assembler, Intel Assembler, PL/I and PL1, Pascal, Basic, C plus numerous job control and scripting languages.

My personal scorecard is now 1 PC hardware fix (circa 1982), 1 open source fix (at age 82), and 2 zero day bugs in a major OS.

After reading that paper by Elman I’ve wondered about how the recurrent connections worked in this experiment. Their robot never got stuck in a corner because of the history of actions that the recurrent connections provided allowing it to work its way out.

Elman J.L. (1990) Finding structure in time. Cognitive Science, 14:179-211.

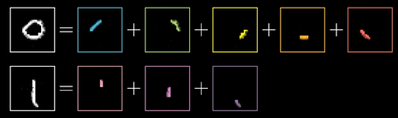

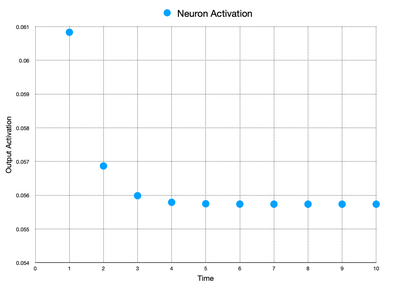

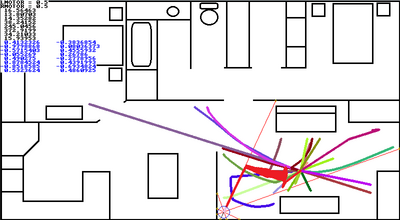

So I did a little exercise with my neural network code. I simplified things by reducing the number of input sensors to 2 and the number of output and hidden (context) nodes to 1 each. The weight of the hidden node was held at 0.9, while all the other inputs and weights where random values.

So the delay that the recurrent connection provides did show up by gradually reducing the output. That output gets mapped to a motor speed, so I looks like it would have slowed down the robot to some stable place around Time 8. Most of the runs of this neural network showed the speed increasing in a similar way.

Tom

To err is human.

To really foul up, use a computer.

The paper is 33 years old I wonder if there have been improved genetic weight changing algorithms since then. I am assuming here that the eight sensors are returning the distance they are from any obstacle and turning that into a value between -0.5 and +0.5 as input?

So has it been working for you in a real robot? I mean you would need a lot of robots? Or run the same robot with different genes and then select the genes with the best performance to try again?

How I would imagine doing it the robot would move according the ANN mapping between the input pattern to two new output values which determine the speed and direction of two motors. If it hits something the genes (weights) are changed.

This is an experiment I just did using my simulated robot that adjusts the direction (not the speed) of the robot by an amount depending on the eight distance readings. It is hard coded mapping but I could try using an artificial neural network instead.

To enlarge an image, right click image and select Open link in new window.

@inq FYI

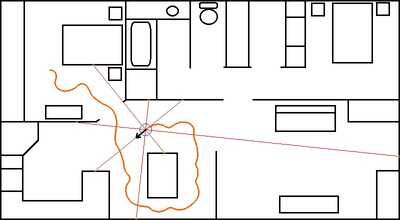

These are example paths taken by the simulated robot from a collection of neural nets with random weights. Each one painted a different color path and at each trial the robot was started in the same pose. The net has 8 inputs which are the distances along eight different directions from the robot to a wall. Each motor neuron has its own set of 8 weights.

The fitness factor is not hitting a wall!

When I implement the chromosomes I figure the fitness factor could be how far it goes before hitting a wall?

At this stage the network is just given random weights for each trial. I have not yet implemented using chromosomes to select the weights.

Often the robot never hit a wall because the motors got an input that stops them completely!

In one set of random weights the robot just kept rotating on the spot because both motors were getting the same speed but opposite directions.

The trial where I captured the image below it behaved in a strange way going back and forth while drifting to the left until it shot off into the corner (the path is the red blob).

One neat thing about the Mazur back propagation example is you could check your own back propagation code was giving the same values and thus you must have gotten it right. I guess if the system iterates to the correct mapping between input and output that is what counts.

To enlarge an image, right click image and select Open link in new window.

@inq FYI

@thrandell wrote:

"I’m still baffled as to how a genetic algorithm can find a set of neural network parameters that allow a robot to navigate and avoid obstacles. I get how the history of actions provided by the recurrent connects help with things like getting stuck in a canyon, but the search space just seems so huge…"

The search space is huge! These algorithms depend on being able to "hill climb". So imagine you want to get to the highest point. You take one step in 8 directions and the one that is higher than the starting point is the one you select. You then repeat until there is no higher point than the one you are on. So you are not trying every position in the landscape. To select the weights randomly would be like sticking a pin in a landscape height map and hoping to hit the highest point.

The other thing about biological evolution is it would have to have taken place in working stages. Each stage worked in that it contributed to reproductive success but it could improve incrementally. The evolution of the eye is often used as case in point. What you are trying to do is evolve a brain that knows what to do as opposed to building a single brain that can learn what to do in real time.

I read that the nets used by chatGPT use soft weights meaning after training they keep learning (changing their weights a process called adaption).

Trying to evolve a brain by running a set of weights on a single physical robot many times would be very time consuming. Biology used millions of examples running in parallel. With the simulated robot I can automate the process.

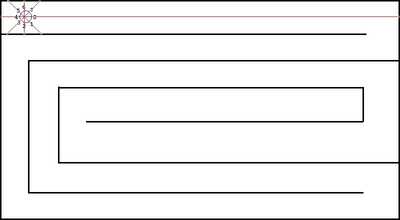

Giving it some thought I decided to change the plan as seen below. The goal was for the robot to travel the path to the end, turn around and go back again and repeat. It would be faster to actually hard code a solution using the distance values.

To enlarge an image, right click image and select Open link in new window.

To try this experiment with one of my robots I needed to make a few changes:

- change out the battery for a constant source of power

- protect the IR sensors from all the hits that random motor speeds would generate in a small space

- find some way to log all the data generated by the genetic algorithm (at this point I’m thinking of swapping out the bumper for a micro SD breakout).

- shorten the distance that the IR sensors can detect. I have a feeling that because the maze that I’m using is small I may need to reduce the range of the sensors. Currently they can measure from 3” to 9.5” so they are ‘on’ most of the time. To shorten the range of the Infrared emitting diodes I would have to replace the 660 ohm resistors on the sensor board with something larger, like 1.2K ohms. I’m going to wait and see if it’s absolutely necessary before doing that.

So I scaled up a maze to fit the size of my swarm robot and picked up some silicone covered 30 AWG and a cool slip ring from Adafruit. https://www.adafruit.com/product/736#tutorials%5D

To see if I’m on the right track I decided to run a test of 80 individuals for 50 generations. I set up the code to run the test and apply the parameters from the fittest individual in the last generation to the ANN and run it in an infinite loop. That way I don’t need to monitor things very closely. A 50 generation run takes over 33 hours!

It’s been amusing watching the behavior of the individuals as they ‘evolve’. Some do nothing, others just spin in a circle, some head for a wall. I find myself rooting for the ones that slowly move away from the walls, hoping that they make it through the selection process. LOL

Tom

To err is human.

To really foul up, use a computer.

I will have to go back over your posts in this thread and see if I can duplicate what you are doing but with a simulated version of the robots. I haven't tried any actual genetic algorithms to evolve the weights yet.

I am a bit scared to ask any questions as you might have answered them earlier.