The question has come up about whether or not a machine shows intelligence if it doesn't understand (explain) why it is doing something or how it works.

So how do you test for "understanding"?

The dictionary seems to be limited to giving synonyms as an answer.

Understanding means having a mental grasp.

https://en.wikipedia.org/wiki/Understanding

Maybe this is an example of understanding.

https://en.wikipedia.org/wiki/SHRDLU

Why did you move the red block. Answer: To clear a space on which to put the blue block.

It can explain (understand?) its actions in terms of the goal result.

Another question might be "How did you stack the blocks".

The answer would be an output of the actions required to stack the blocks from some given state.

Of course I don't believe it has any conscious understanding.

So what is understanding?

This works by these actions having these effects.

Explain your actions!

I wanted this effect and thus implemented this action.

Actually most people probably don't really understand how a lot of things work. For example how does a flush toilet work? (It uses the siphon effect). Their answer might be: "It works by pushing a button." But at least they understand the goal of pushing the button. Is stating the goal showing an understanding why you took a particular action? Is understanding revealed by showing the list of action/effects?

So how do you test for "understanding"?

Scientists who study the early development of human children have already created many experiments to study and test how human understanding develops over time.

For me personally those methods and experiments will be more than sufficient for testing the understanding of any machine intelligence that I might personally create.

DroneBot Workshop Robotics Engineer

James

Have you ever seen any James Bruton videos he has one up vision recognition and other interfaces controlling using basically a rgb lighting controller its very interesting

Yes I've seen his videos. I'm looking forward to using the face recognition software in OpenCV with the Jetson Nano. This isn't the same software and hardware he's using, but the result it basically the same. It returns the coordinates of a box where the face is located in the image.

DroneBot Workshop Robotics Engineer

James

I just got done watching that just before you posted it. I was going to post a new thread entitled, "Moving on from A.I. to A.C.

A.I. of course being Artificial Intelligence and A.C. being Artificial Consciousness. ?

Or, if we like we can call it A.S. for Artificial Sentience.

I don't think people are aware of how face-paced this technology is progressing. We should have a clue when the General Public is being offered their own ANN-development software and tutorial instructions on how to create self-learning machines. We are basically being offered the 'scraps' of the industry to play with.

In other words, if they are giving us these self-learning ANNs to play with you can rest assured that they already have their own highly protected technologies that go far beyond what we are seeing in the public domain.

Another thing that people should take note of is that China is aggressively pursuing A.I. in hopes of becoming the global dominant in A.I. technology. And other countries aren't just sitting by waiting for China to grab that title. So the competition for the best A.I. technology is a major global race between, not only corporate industries, but also national defense in the military. So this technology is advancing at an exponential pace.

I posted this video in another thread:

They already have A.I. building even more powerful A.I. that is far beyond what humans can even design. So this is about to accelerate far beyond human expectations very quickly. Keep in mind that corporations and nation states all want to be there first. So if they can benefit from having A.I. design new A.I. you can bet your sweet bippy they'll do it. So beware of an extremely accelerated advances in A.I. coming SOON to a robot near you.

DroneBot Workshop Robotics Engineer

James

Not everyone thinks these deep learning networks are going to become sentient, self aware, conscious (or whatever other word you may use for having subjective intelligent awareness).

https://www.wired.com/2017/04/the-myth-of-a-superhuman-ai/

https://www.wired.com/story/greedy-brittle-opaque-and-shallow-the-downsides-to-deep-learning/

Personally I am very sceptical as to any super sentient AI arising as this kind of hype has been going on since the beginning of AI.

My take is that we probably will be taken out by machines but only because some idiot puts the AI "finger" on the nuclear button and says "play the game" and with no more intelligence than a chess playing program it may make the choice to hit the button. Another scenario is millions of human seeking drones will be set loose like a swarm of killer bees and take us all out. In other words killer machines may be the result of sentient humans giving decisions over to non sentient machines.

In other words killer machines may be the result of sentient humans giving decisions over to non sentient machines.

I agree. I see this as being a far more likely threat than intelligent machines deciding to eliminate humans. Moreover, this is a threat that already exists today basically.

Not everyone thinks these deep learning networks are going to become sentient, self aware, conscious (or whatever other word you may use for having subjective intelligent awareness).

Yes, it's true that opinions on this vary widely. I think the point they were making in the video is that we may need to change our views on what we mean by terms such as consciousness and sentience. As they suggest in the video these robots are basing decisions on how they are "perceiving" their own actions and their environment. As philosophers we can argue that this doesn't meet our philosophical standards for consciousness or self awareness, but these robots aren't likely to care about our philosophical opinions. ?

So even they we demand that they are just "Zombies" they could still pursue their own agendas. Whether they are having a subjective experience like humans, is really a moot point as a practical matter.

Personally I am very sceptical as to any super sentient AI arising as this kind of hype has been going on since the beginning of AI.

But AI has come a long way since the beginning. In fact, when I was in college I took a course on AI. I was extremely interested in entering into the field and was very anxious to learn about AI. I took the course and was extremely disappointed. It was basically a course on "Expert Systems Programming". Something I had already learned how to do on my own. And to be honest, I personally felt that my own experience programming Expert Systems was actually quite a bit more than what the course taught. It was an extremely disappointing course.

But AI today is taking many different paths. I agree that AI based on ANNs alone is never going to result in any serious intelligence. But a lot of people who are developing AI are well aware of this. They use ANNs as a tool just like they use computers, and everything else. And they recognize that this is only a small part of the overall AI Architecture. Just as an example, the robot arm they had there that was learning how to pick up the red balls and drop them into a jar. I can guarantee that there is a lot more going on there than just ANNs. The ANNs are just a way of organizing the data and serving as memories of how to do things. But that ANNs alone are not the whole shebang.

Also regarding your perspective here:

Personally I am very sceptical as to any super sentient AI arising as this kind of hype has been going on since the beginning of AI.

What about the fact that AI is now designing better AI systems that even humans don't understand?

I mean, you say that this kind of hype has been going on since the beginning of AI, but AI designing itself better and faster than humans can do it is certainly a major difference from how AI started out.

Just because there may have been some unrealistic expectations early on is no assurance that machines won't be able to become self-replicating in ways that humans couldn't even dream of.

Did you watch the video "AI Child Created"? AI codes its own child. That's got to be a major departure from the beginnings of AI.

DroneBot Workshop Robotics Engineer

James

Did you watch the video "AI Child Created"? AI codes its own child. That's got to be a major departure from the beginnings of AI.

It was all over the place with rather vague but bold statements devoid of detail and lots of rubbery words. I could go through the whole video making pointed questions about what they were really talking about.

The reason for the hype is probably to attract funding.

It seems to me that all they are saying is that while at the moment someone has to present hundreds of example images of bananas or cars to train a network (nasnet the child) they have automated the process with a controller network (parent). I assume the parent of the controller network was a human trainer? Like I say it lacked detail.

The video seemed to allude to what is essentially evolving a network which is what I suspect they mean by "propose a 'child' model architecture" (make another variant network). Can we evolve an electronic brain? In theory probably. I think it can be done in working stages the way all our other technology has evolved.

Now regarding learning to change the weather video without input. If you knew how the ANN did this it may turn out to be a rather simple transformation (grass to snow) (sky to black but enhance car lights and reflections on road). That is the problem with our current ANNs we don't know how its evolved algorithm works and it can make major errors no human neural system would make.

That is the problem with our current ANNs we don't know how its evolved algorithm works and it can make major errors no human neural system would make.

I totally agree on this point. However, they aren't arguing for a case that they can duplicate human intelligence. They are simply arguing for a case that they are creating an Artificial Intelligence that is capable of creating even more complex Artificial Intelligence.

The fact that it can make errors makes it all the more dangerous.

~~~~~

On the concept of things like consciousness and sentience, my own personal view is that consciousness and sentience as human experience it can never be achieved via a digital simulation. All that could ever amount to is a simulation of behaviors. We could argue that it would still constitute "intelligence", but perhaps not an actual awareness of being.

Also, I am not arguing that human-like awareness or consciousness is necessarily a good thing. After all there exist a lot of sentient humans who have very evil minds and agendas. So whether AI ever becomes conscious or sentient is a rather moot point in terms of how safe or dangerous it might be.

None the less, an AI that is capable of making its own decisions can clearly become a problem whether it has conscious intent or not. Heck just regular automated machines are often quite dangerous when they start to malfunction. An industrial hopper at a steel mill that pours molten steel onto the worker instead of into a crucible is obviously quite deadly, even though the machine itself had no intelligence or intent.

A machine that generates it's own "intent" can be extremely dangerous. Perhaps even more dangerous if it has no conscious awareness. A machine that actually understands what it's doing would need to intentionally do something wrong. And then there would be the deep question of why it performed that action when it supposedly knew it was wrong?

So robots that don't understand what is wrong can obviously be a far greater threat than those that do.

DroneBot Workshop Robotics Engineer

James

On the concept of things like consciousness and sentience, my own personal view is that consciousness and sentience as human experience it can never be achieved via a digital simulation. All that could ever amount to is a simulation of behaviors. We could argue that it would still constitute "intelligence", but perhaps not an actual awareness of being.

The neurological nature of a subjective experience is still unexplained. Given our current understanding it seems we could fully explain how a brain works without any need for it to have a subjective experience.

A favourite example is color. A machine can respond to and name the color of objects but is it having any subjective color experience (qualia) when it does so? We fully understand how a robot can separate red balls from blue balls and it in no way requires it to have any subjective experience of red or blue. What would our machines have to be doing internally for us to say "that process involves having a subjective experience of color".

This is also true of other human brains. We can infer they have subjective experiences but there is no objective way to tell if that is true or not unless one day we know what is happening when a neural network is "doing subjective experiences".

There have been a lot of interesting experiments on conscious vs. unconscious actions and maybe one day ...

In the meantime we can happily code our AI and label its behaviors as "seeing red", "intending to find light", "acting angry", "avoiding pain" and so on without any need for it to be conscious of color, intentions, emotions or pain.

In the meantime we can happily code our AI and label its behaviors as "seeing red", "intending to find light", "acting angry", "avoiding pain" and so on without any need for it to be conscious of color, intentions, emotions or pain.

Absolutely. In fact, this is what the fellow in the video entitled "How These Self-Aware Robots Are Redefining Consciousness" was attempting to address. He wasn't suggesting that philosophers need to redefine what they mean by consciousness, but rather from a purely practical sense we may need to redefine what we mean when we use these terms if we want to address the real issues with practical AI.

A robot that can behave in ways that emulate how conscious humans behave may be the only important aspects when considering AI.

The neurological nature of a subjective experience is still unexplained. Given our current understanding it seems we could fully explain how a brain works without any need for it to have a subjective experience.

Again, I agree. However, we already know that we are having a subjective experience. We know this from direct experience itself. So the question isn't whether a brain could function without subjective experience, but rather the question is, "How does it give rise to subjective experience?".

I believe that I have potential answers to that question. Obviously I'm in no position to provide compelling evidence for my ideas since, if that was the case, I would have already written up my paper on the topic and submitted it for review. I am confident enough in my ideas though to take a very strong position on what is required to obtain this result. And my answer in short is fairly simple. I claim that it can never be possible to create a subjective experience via a digital simulation. My position is that the only phenomena that could give rise to this emergent phenomena is analog feedback loops. And these cannot be simulate via a digital processor.

So while a thinking brain clearly does not need to have a subjective experience, I believe that an analog feedback loop can give rise to this phenomena.

Do I want to build an AI that can actually have a genuine subjective experience? No, actually I don't. In fact, I would much rather have an intelligent robot that is not having any subjective experience at all.

None the less, from a purely scientific and philosophical perspective, we as humans, would probably like to have an understanding of exactly how are subjective experience is facilitated by our brain. And I would also imagine that once we learn how this is done there will most likely be humans who will want to build a truly sentient robot. Precisely why they would want to do that is beyond me, but once humans learn how to do something they will most likely do it whether it's a good idea or not.

In the meantime, the current direction that AI is going is far more likely to produce machines that simply act like they are having a subjective experience, but not really having one.

The bottom line is that we wouldn't be able to tell the difference from just interacting with them. We can't even determine for certain whether other humans we interact with are actually having a subjective experience. We can only assume that thy are since we are having this experience ourselves. But even that is still just an assumption.

DroneBot Workshop Robotics Engineer

James

In the meantime we can happily code our AI and label its behaviors as "seeing red", "intending to find light", "acting angry", "avoiding pain" and so on without any need for it to be conscious of color, intentions, emotions or pain.

Absolutely. In fact, this is what the fellow in the video entitled "How These Self-Aware Robots Are Redefining Consciousness" was attempting to address.

Unfortunately again bold but unclear statements as to actually what is going on physically. They talk about goal spaces. Do they tell the robot arm where the ball is and then the robot arm uses its present position to move to a "closer" position until it reaches the ball? Does it know what a ball is? How did it learn how to grasp the ball? So much not explained like the other video.

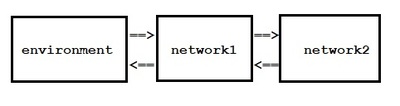

The idea of meta cognition (awareness of your own states) is not a new idea. Just as you can connect a network to the real world via sensory inputs and motor outputs to learn about that real world (as defined by its inputs) you can also connect another network to learn about that network.

What can a network learn? It can learn how its inputs change over time. It can learn how its inputs change with its actions (outputs).

The bottom line here is unless this is a section about the philosophy of AI you have to ground what you talking about in real software/hardware thus the reason in the first post I wrote about what "understanding" meant with the block moving robot arm.

You must avoid word magic to pretend you understand some concept like self awareness using some arbitrary definition like "self awareness is nothing but the ability to self simulate". Just write the code and that is your theory and your definition. It may have nothing to do with human self awareness but it is a clear definition of what you are meaning by using the words.

What can a network learn? It can learn how its inputs change over time. It can learn how its inputs change with its actions (outputs).

I don't think these people are limiting themselves to just using neural networks. In fact, a neural network on its own can't do much of anything. All it amounts to is an extremely complex comparator. It's just basically a way of comparing a lot of inputs that determine a specific output. There necessarily also needs to be other computer algorithms involved that determine what to do after a specific configuration has been recognized. And those algorithms can themselves be dynamically modified by the robot. There's there's a lot more to it that's dynamically evolving than just the ANNs. The ANNs are simply a new tool that can be used to determine features of the real world to an extremely detailed degree.

They talk about goal spaces. Do they tell the robot arm where the ball is and then the robot arm uses its present position to move to a "closer" position until it reaches the ball?

I doubt that they were doing that as that would hardly be worthy of reporting since they were already doing that in the 50's.

Does it know what a ball is?

Let's put it this way. It can recognize what is and isn't a ball. Is that different from knowing what a ball is?

How does a human know what a ball is other than to have a description of the physical properties of a ball?

How did it learn how to grasp the ball?

From what they said it sounded to me like the robot learned how to grasp a ball from trial and error. Not unlike how a human baby learns to grasp a ball.

It seems to me the more we tend to reduce this to questions about how a robot learns things we see extreme parallels with how humans learn them as well. After all, even humans have to learn to walk and talk by trial and error, along with copying from examples of seeing other people do these things. If a robot can learn in this same fashion then (other than the question of having a subjective experience while doing so) how is it any different from how a human learns to do things?

Let's not forget also that teaching a robot to do something cannot be considered "cheating". Because after all, that's what we do with humans all the time. Humans need to be trained on how to do things too. So if robots ever get to the point where they can learn how to do things without being trained, then they will have surely passed humans in terms of understanding.

Think about this too:

When a student first begins college they may not understand much of anything. But after being trained they are marveled at when they take it to the next step on their own.

This could be the case with AI building better AI. The original AI could have been trained to build new AI, but when it does this it does it far better than it was taught to do. That would be like a human student taking the subject to the next level leaving their professor in the dust. So you can't knock the AI just because it was originally taught by a human. What it does with what it has learned is the important question.

DroneBot Workshop Robotics Engineer

James

Learning machines have interested me for a long time but I don't like hand waving vague incomplete talk about what someone imagines their machine is doing. I want to open it up and see exactly what it is doing.

Remember the claims Bill makes about what DB1 is going to be able to do?

"This is a robot capable of becoming aware of its environment and “learning” tasks you assign to it."

So he will be defining exactly what he means by that with actual hardware and software.