Yes I have little robot base but I can't sit my laptop on it which I use to run my visual processing routines

You already have a Raspberry Pi on it. Just connect your laptop to the Raspberry Pi via a WiFi dongle and you can have full control over the robot from your laptop.

I control my little robots from my laptop. I'm currently using an ESP8266-01 module to exchange information between the robot and the laptop. There's no need to have the laptop physically mounted on the robot.

I have full two-way communication. So as far as my laptop computer is concerned it is onboard the robot. It doesn't need to be there physically.

But the camera has to be on the robot.

I use the webcam built into the pc and also plug in more webcams using the usb ports.

Wifi coding is all a bit of a mystery to me. I wouldn't know how to write code to send and receive data over wifi.

Are these suitable for such a project?

https://www.jaycar.com.au/wifi-mini-esp8266-main-board/p/XC3802

https://www.altronics.com.au/p/z6360-esp8266-mini-wifi-breakout-module/

Are these suitable for such a project?

I'm no expert on ESP modules. I'm not currently attempting to transfer huge amounts of video data between the robot and the laptop. Exactly what the limitations might be on that I can't say.

But the camera has to be on the robot.

Since you already have the Raspberry Pi on the robot, my approach would be to do as much video processing as you can within the rpi. And then just send a lesser amount of critical data over to the laptop for more detailed analysis.

Without knowing exactly how you are doing things it's difficult to offer any suggestions. When I do things like this my first focus in on reducing the amount of data exchanged as much as possible. Depending on what you are trying to do, you might be able to get by with sending over frames of video at the slowest rate possible.

In fact, going back to the idea of taking "baby steps" that's definitely where I would start. Start by analyzing single frames of video and then slowly increase the rate until you find where you might start hitting limitations. Then at least at that point you'll know precisely where you're at.

Do you already have a video analysis program running on your laptop? If so, you could start there by seeing how far you can reduce frame rates before your video analysis begins to drop off and become ineffective. Then at least you'll know exactly what your limitations are.

I'm not currently running a camera and video analysis on my robots yet. I do have Raspberry Pies onboard, but I haven't gotten far enough along yet to using cameras. I'm still working on ironing out my motor control circuits.

If you're ready to do video analysis from your robot you're way ahead of me. ?

I haven't even done the standard distance sensors yet. I'll be doing those before I move on to video sensing.

DroneBot Workshop Robotics Engineer

James

When I do things like this my first focus in on reducing the amount of data exchanged as much as possible.

What he said. Start easy, add levels of complexity one step at a time. This way you can see where the failure point is

Since you already have the Raspberry Pi on the robot, my approach would be to do as much video processing as you can within the rpi.

He doesn't want the Pi to process the data, he wants his laptop to do it, which, I believe (from the ROS classes) is the correct approach

On the other hand, why not use the Pi to send the data ? It's already there, and it saves on having to add another module

On the third hand, if you're bound and determined to add another module, I've successfully used the esp32cam to send live video. The video processor doesn't want a fast speed anyway. Once you start processing, it will slow down by itself to maybe 10FPS or less. Remember, it's going to be processing each frame individually, so it can't handle too much data anyway

If it were me, I'd rather use the Pi, but, if you're bound and determined to add another module (which I recommend against) get the "AI-Thinker" chip on the esp32cam. The generic ones don't have good software compatibility

I don't yet know how to capture images for processing using the RPi and anyway it might be a tad slow.

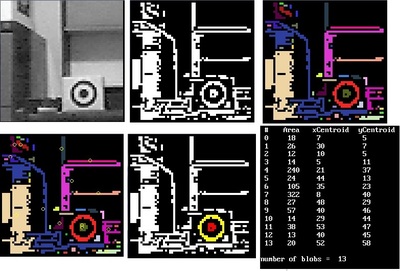

Vision is a problem I have thought about for a long time. Elsewhere I gave an example of tracking a simple target in real time. This returns the x,y position and its size gives a distance measure while the height to width ratio gives an angle to the target. With two targets you might use triangulation to compute a position. They call things like this fiducials.

Let's say you have a remote controlled car. How do you control it? By visual feedback. You do not make use of on board sensors.

Motor control doesn't have to be complex. Forward, back, rotate left, rotate right? Imagine you have a remote controlled car. You can navigate it about the house using visual feedback, no other sensors required! Now replace yourself with an AI with vision.

The robot will need a sense of touch, the details I am still experimenting with, but in theory the navigation and location can be done with vision alone.

Hopefully I can get some vision control demos up and running. This is my current experimental base using vacuum robot geared motors with encoders.