Ok so the over all plan is to have an AI chatbot running on an app on either pc or smart phone and the robot is essentially an array of sensors with mobility.

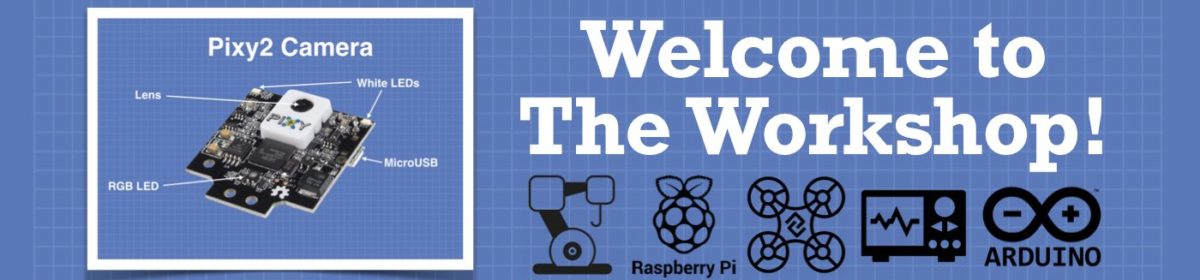

the robot has the ESP32 ai thinker with a cam and WIFI and Bluetooth,

the Arduino has all the motor controls and it could have other sensors hooked up to it although I don't as of right now.

eventually I want the AI chat bot to read in from all the sensors, and a using S.L.A.M. Simultaneous Location And Mapping to make a map of the area that it is in using the camera, it will also be set to update that map as it moves so if someone puts a box down on the floor it will know it is there.

from there you give it object recognition and give it the ability to recognize household furniture so it can determine what room of the house that it is in.

object recognition for household items so you can ask it to get you a soda and it would use neural nets to figure out that the soda is kept in the fridge, the fridge is in the kitchen, go to the fridge in the kitchen and get the soda and return it to you.

So the app talks to the esp32 and gets the camera feed , if the ESP32 has a command or need info from the Arduino, its wired right to it.

The esp32 is located right at the end of the arm on the horizontal wrist joint, overlooking the clasper which can open, close, and twist right or left.

I am not a roboticist, so I'm talking out of my league here - bear that in mind. But I hope this makes some kind of sense to you while you're looking at the overall structure.

You have 6 steppers but you'll almost NEVER need to set targets for all of them at the same time and have them all reach their targets at the same time.

However, it makes perfect sense to pair up the wheels, since they will almost always used in unison and will almost always be required to move to their target position in synch. So it makes sense to pair them up and then write command methods to "move wheels by( left steps, right steps)", "turn body (angle in degrees, direction )" and then write code in the sketch to effect those commands.

Similarly, you can think of the waist (don't know what it's called in robotics, but it's whatever rotates the arm into position), upper and lower arm being completely separate from the wheels, but it would look nice if they all moved in synch to a specified position together. Again, you could write methods like "move arm to ( float body angle, upper arm angle, lower arm angle ) or "move arm to ( float x, float y, float z)".

Same thing applies to the wrist and fingers moving together.

So, I hope you can see that there's a real point to "lumping" some steppers together as a team and providing commands so the the brain (wherever located) can send sensible commands which the MEGA or ESP can perform without having to know how that command is executed.

That is, the brain shouldn't need to know anything about HOW the steppers are used (or even what steppers or their characteristics), it just needs to be told what commands are available for its use. The brain then calculates how far to move, in what direction and where to position the arm and uses those methods to tell the MEGA where the brain wants it.

The same applies to the ESP but I don't know enough about it to suggest methods for it to define. Maybe something like "look in direction( float angle )", "is path clear()", "what object is it()" etc.

It's not always easy, but if you can think about what you will want the brain to be able to tell (and ask) the MEGA and ESP, it'll save a lot of time experimenting in blind alleys that go nowhere.

On the other hand, blind alleys are always where you learn the most 🙂

Anything seems possible when you don't know what you're talking about.

In c the equal sign = means assign a value whereas two equal signs == means test for equality which returns a TRUE or FALSE. In other languages the context is used to determine how to use the = sign. In an IF statement you are testing if something is TRUE or FALSE.

int A = 4; // assigns a value to A int B = 3 + 6; // assigns a value to B if (A == B){ // returns TRUE or FALSE Serial.print("IT IS TRUE"); }else{ Serial.print("IT IS FALSE"); }

You have to crawl before you can run you are trying to do something complicated by trial and error instead of doing things one step at a time with full understanding. Taking the short cut can end up taking longer. With controlling stepper motors just start with two motors with a simple dial on them to see if they are working as desired. I only use the Serial port to pass data between a PC and the Arduino. I do not have an Esp32 cam so I can't help with that one. I would google for a solution.

I have recently acquired of the stepper motors you have been using so I will probably get around to building a simple (small) "arm" to play around with coding them to move the "hand" from position to position. My actual interest was to use them to control a camera to follow visual targets.

Ok so the over all plan is to have an AI chatbot running on an app on either pc or smart phone and the robot is essentially an array of sensors with mobility.

the robot has the ESP32 ai thinker with a cam and WIFI and Bluetooth,

the Arduino has all the motor controls and it could have other sensors hooked up to it although I don't as of right now.

eventually I want the AI chat bot to read in from all the sensors, and a using S.L.A.M. Simultaneous Location And Mapping to make a map of the area that it is in using the camera, it will also be set to update that map as it moves so if someone puts a box down on the floor it will know it is there.

from there you give it object recognition and give it the ability to recognize household furniture so it can determine what room of the house that it is in.

object recognition for household items so you can ask it to get you a soda and it would use neural nets to figure out that the soda is kept in the fridge, the fridge is in the kitchen, go to the fridge in the kitchen and get the soda and return it to you.

So the app talks to the esp32 and gets the camera feed , if the ESP32 has a command or need info from the Arduino, its wired right to it.

The esp32 is located right at the end of the arm on the horizontal wrist joint, overlooking the clasper which can open, close, and twist right or left.

Excellent, sounds like you have a solid plan (and it's ambitious enough to keep you busy for a very long time 🙂 Now you just have to decide what questions the brain should ask and what commands it will need to operate the "limbs".

As an extra sensor, I'd suggest an HC-SR04. It's simple to use, inexpensive and you could put one on the front base of your robot to detect small obstructions in your path and also to determine how far away the object is. The camera may be able to tell there's a wall but may not be able to tell you how far away it is before you bump into it.

Anything seems possible when you don't know what you're talking about.

I have already followed a few simple chatbot tutorials in both Pycharm and VS code, and was starting a new one on neural Net chat bots, haven't finished it yet though.

Im finding building chatbots fairly simple to get it to take in a command and spit out a desired function, now I just need to figure out how to get the motor functions working with it.

As an extra sensor, I'd suggest an HC-SR04

If that is the sonar sensor, I have one, it came in the kit with the UNO, I was thinking of that too! I also bought the sensor kit for Arduino as well, I have all of those sensors to play with, I also bought a 3 pack of 3 axis, gyro and accelerometer sensors that I want to put in there too.... Could put all 3 of those on the arm to detect location and angles....

My actual interest was to use them to control a camera to follow visual targets.

That is exactly what this arm is doing, the camera is the esp32 on the end of the arm, it needs to communicate to the arduino mega to control the stepper motors to follow the object.

You have 6 steppers but you'll almost NEVER need to set targets for all of them at the same time and have them all reach their targets at the same time.

However, it makes perfect sense to pair up the wheels, since they will almost always used in unison and will almost always be required to move to their target position in synch. So it makes sense to pair them up and then write command methods to "move wheels by( left steps, right steps)", "turn body (angle in degrees, direction )" and then write code in the sketch to effect those commands.

Similarly, you can think of the waist (don't know what it's called in robotics, but it's whatever rotates the arm into position), upper and lower arm being completely separate from the wheels, but it would look nice if they all moved in synch to a specified position together. Again, you could write methods like "move arm to ( float body angle, upper arm angle, lower arm angle ) or "move arm to ( float x, float y, float z)".

Same thing applies to the wrist and fingers moving together.

So, I hope you can see that there's a real point to "lumping" some steppers together as a team and providing commands so the the brain (wherever located) can send sensible commands which the MEGA or ESP can perform without having to know how that command is executed.

That is, the brain shouldn't need to know anything about HOW the steppers are used (or even what steppers or their characteristics), it just needs to be told what commands are available for its use. The brain then calculates how far to move, in what direction and where to position the arm and uses those methods to tell the MEGA where the brain wants it.

Sounds like we are on the same wave length, I'm trying to build the functions for the motor controls right now. all I'm trying to do is get it so there are functions on the mega and get the esp to call those functions.