I've only had two formal programming classes.

I swear by "Learn C++ for Windows Programming in 21 Days". 🤣 Learned it over the summer (60 days) of unemployment, went in for my first job interview and nailed it.

I am impressed. That was one of the many C++ books I used to teach myself C++ but it never gave me the depth of understanding of the Windows OS I thought I would need to actual get a job as a programmer.

With retro all the rage, have you published?

Computer games never really interested me and I never played them so I lack the motivation to put in the hard work. It was just a social thing as other programmers using FreeBASIC at the beginning were into writing games. Writing simple games is a good way to learn to program.

I thought looking at code and reams of data would stack up there with watching paint dry.

Actually I do find trying to read other people's code difficult. I like it explained in a human language first.

However the data from the scanner can be copied and pasted to generate images to inspect to figure out how to use it to navigate instead of the "pure" distance data I used. I can add a random variation to the pure data before thinking about how to use it to create a plan for the robot to use in navigation.

I will work on a better more realistic simulation today using a house plan with furniture.

Yet it seems to be practical enough to be used by commercial robot vacuum cleaners?

I'm not aware of this, but interesting. Maybe they use more than one 'beam' to compensate for the very narrow horizontal band that mine uses or they are engineered to have a wider beam? It could be they are not used for navigation purposes, but just as a quick short range check to sense if an obstacle has moved in to its current heading. Possibly they are quite small squat bots so a narrow lidar beam is OK for them. Do you know how they use their 360 degree lidar sensor?

Ive just had a google of some robo vac's. They are indeed very small and squat and some high end ones do use lidar to map the space, just as shown in the dronebot video. There is also a nice example I remember on the sparkfun web site where a chappie drives a cart by looking at the output of a lidar and the James Bruton youtube has some examples.

On my bot which was a similar one to the one dronebot showed roving his yard, my 360 lidar was placed on top and I had a good map of the surroundings at the 25 cm 'ish level but, of course it failed to map any objects at a lower level. They work ok within the narrow horizontal beam, but would need supplementing by a plethora of other sensors. As I'm not so interested in indoor bots I put off further experiments. If you are ever on a trip to the UK I will happily gift it to you and I'm sure you will be able to make more use of it than me.

I am impressed. That was one of the many C++ books I used to teach myself C++ but it never gave me the depth of understanding of the Windows OS I thought I would need to actual get a job as a programmer.

Thank you, but don't be... in those days (early 90's) all you had to have was a pulse.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

Possibly they are quite small squat bots so a narrow lidar beam is OK for them.

Objects have to touch the ground at some point so you can map at some low level. If a high robot tries to go under a table you could have bumpers to stop it. The nearest lidar detected wall ahead might be 3 feet beyond that point of collision which means the bumper has hit some high obstacle 3 feet from that wall and that can be added to the map. The opposite could also be the case where some high scanner look over a table at the far wall as a reference point to record the position of the collision (table) on the lidar created map.

I notice the recently posted CATHI robot has its lidar set high as well as having a camera. What does it do when it hits a low lying object on the floor?

I notice the recently posted CATHI robot has its lidar set high as well as having a camera. What does it do when it hits a low lying object on the floor?

dunno, perhaps it falls over, better ask CATHI 😀

UPDATE

I have finished assembling all parts to make it motivate.

- It includes all the sensors required for balancing.

- It does not include the Time of Flight sensor, servo, head and shoulders. I prefer to get its balancing reliably before attaching the head.

- I am using the same InqPortal library and the program that was written for Inqling Senior. It uses the same GUI for driving.

- It is easily throttled around, back, forth, turn on a dime.

- It takes advantage of the micro-stepping of the A4988, I have it set to default to using the 1/16th micro stepping. I believe this will be necessary to allow for critical balance.

- In this mode, it is very slow... maxing out at about 0.4 km/hr.

- Micro-stepping can be turned off and use the full step mode. In this mode it is quite manic and very hard to control. Maybe the computer controlled mode will have better control. In this mode it can reach a top speed of almost 7 km/hr. Eventually, I should be able to train it to follow me and at this speed, it'll easily keep up.

- Full telemetry of the accelerometer, gyroscope and magnetometer are being pumped out to the GUI for evaluation.

- I've just dink'd around with it for a few minutes and will be checking the systems out next week.

I have created a short video (2:35) but it is almost a Gig. I plan to get out tomorrow and should be able to upload and link to it.

VBR,

Inq

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

- The left Chrome instance is plotting the three Gyroscopic readings (X,Y,Z) from the GY-89 sensor.

- The top one is plotting the three Acceleration readings.

- The bottom one is plotting the three Magnetometer readings.

Configuring these histograms is part of the Admin (InqPortal library) and no specific Sketch coding is required.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

So soon it will learn to walk on its own two wheels without assistance 🙂

Are you going to put the TOF on its the robot's head and send that data to pc for viewing?

So soon it will learn to walk on its own two wheels without assistance 🙂

Are you going to put the TOF on its the robot's head and send that data to pc for viewing?

Yes, it goes in the little head. So far, I'm back to using a servo... but I have issues.

- It's right above the Magnetometer and I'm guessing it'll influence it.

- It'll only turn +/- 90 degrees.

Yes, the data will go back to the PC, be graphed and Excel tabulated for more detailed analysis. Eventually, I'd like it to come back real time and generate a 3D representation like your game while it trudges along.

VBR,

Inq

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

The electronics store charges $109 for the RPi4 and that doesn't include a monitor, battery or charger. Not sure how it compares with the little laptop I was using with its built in webcam, keyboard, screen, battery, charger and a load of development software I am familiar with.

https://www.jaycar.com.au/raspberry-pi-4b-single-board-computer-4gb/p/XC9100

I doubt you can get the Rpi4 at that price these days here in AUS!

Jaycar is all out of stock, and who knows when that price was last valid.

Even on eBay.com.au from China, you can't get it at that price at the moment, Australia is a banana republic right now, and about to implode!

Don't get me started on the economic politics of today and our foolish reliance of imports instead of being self sufficient even though that might be more costly in the short term!

As for computing power I have the laptop to play with until a RPi become affordable or even available.

It will be interesting to see what kind of data you get from the TOF.

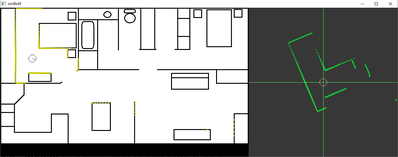

In the mean time I have been reading up about SLAM algorithms and wonder what kind of computing power must be in a robot vacuum cleaner to perform the task. I have updated the simulation to test out different algorithms to navigate and map a house. Although the "lidar" data is clean at the moment I will add realistic noise to test those algorithms. Also the simulated robot has perfect odometry which means I will have to add error to that as well to make sure the algorithms can work even if the robot is not exactly where it should be or even if it is moved physically by a human.

In the snapshot below the actual simulated world is on the left and the "lidar" data seen by the robot is on the right. When the robot turns the actual data on the right will rotate. My previous simulated lidar code I posted actually returns the x,y position of the hit point when in fact the only data you have is the direction and distance of the hit point so the x,y position has to be calculated before it is plotted.

To enlarge an image, right click image and Open link in new window.

Yes, it goes in the little head. So far, I'm back to using a servo... but I have issues.

- It's right above the Magnetometer and I'm guessing it'll influence it.

- It'll only turn +/- 90 degrees.

As its a 2 wheeled bot and thus will rock on its axis as the bot proceeds I presume you will have to take its current tilt from the vertical into accounts when looking at the data received to produce a map. How are you going to incorporate that into your mapping? (that is presuming my presumer is working well 😎 ...)

As its a 2 wheeled bot and thus will rock on its access as the bot proceeds I presume you will have to take its current tilt from the vertical into accounts when looking at the data received to produce a map. How are you going to incorporate that into your mapping? (that is presuming my presumer is working well

...)

Your presumer is mighty-fine. It ain't rock'n!

But my robot is going to be rock solid also! 🙄 🤨 ... said with total sarcasm in case the emoji didn't convey it well enough.

I've spent an enjoyable day today researching some balancing robots out there. Some only use an accelerometer and make some invalid assumptions. Some use a converted Drone library which sounds like way overkill. Some try to do it with only sensor data... using no smoothing, no filtering and certainly no Proportional-Integral-Derivate predictive ability. The point being... many of the YouTube examples we see are rocking and that may be because of not being fully optimized. Some even admit (as a post mortem) that using regular motors/encoders is a hindrance. Motors have very poor, non-linear response when the PWM is running in the 0.1% or 10% throttle range. I recall with my Chester robot, that at very low speed... one motor would work as expected while the other would stutter while not moving at all.

I finally found a link that uses stepper motors, uses both the gyroscope and accelerometer (explaining why) and uses filtering, smoothing and PID to center on a solution. It also has a key feature I want... being able to re-balance after adding an off-center weight. 😎 Inqling 3rd is going to pick up stuff!

I wanted to send him a thank-you, but it's an undergraduate thesis.

And even more telling... one of his references is... you guessed it... one of Bill's videos! Bill should be justifiably proud of his positive influence on the next generation! 👍 🖐️ https://www.diva-portal.org/smash/get/diva2:1462103/FULLTEXT01.pdf Us old farts here are just goofing off!

I'm hoping the author is a regular here! Maybe, he'll chime in and make us all experts! 😊 🤩

I've been modifying his code for my sensors (that are different) and also, I'm using an ESP8266 versus an Arduino that has higher loop rate. I hope between all these things being realized, I'll have a more stable robot. Although, I never found a video of his working.

Now @byron - I did have a method to my madness! In the beginning, I did plan on having a separate pitch servo/stepper on the sensor and I was planning on making it adjust to stay level aka... Drone type camera stabilization. I finally thought... I'll be able to add the orientation data to the Math and just let it shotgun the whole area. 😋 Make sense?

For me... this is the good part. The previous 8 pages, I file under the "No pain... no gain!" category.

VBR,

Inq

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide