@inq I am not sure if it helps, but the Tesla example is different in that your bot is moving very much slower and the map it needs to build is very much less dynamic. So ideas that may be not good enough for Tesla etc may be good enough for your use case. I suspect you know this, but thought I would mention it just in case.

First computer 1959. Retired from my own computer company 2004.

Hardware - Expert in 1401, and 360, fairly knowledge in PC plus numerous MPU's and MCU's

Major Languages - Machine language, 360 Macro Assembler, Intel Assembler, PL/I and PL1, Pascal, Basic, C plus numerous job control and scripting languages.

Sure you can learn to be a programmer, it will take the same amount of time for me to learn to be a Doctor.

@inq Just brain storming. This reminds me of a follow the wall with right hand maze solution, does that have any applicable here?

First computer 1959. Retired from my own computer company 2004.

Hardware - Expert in 1401, and 360, fairly knowledge in PC plus numerous MPU's and MCU's

Major Languages - Machine language, 360 Macro Assembler, Intel Assembler, PL/I and PL1, Pascal, Basic, C plus numerous job control and scripting languages.

Sure you can learn to be a programmer, it will take the same amount of time for me to learn to be a Doctor.

@inq FYI, I also use VNC exclusively now.

First computer 1959. Retired from my own computer company 2004.

Hardware - Expert in 1401, and 360, fairly knowledge in PC plus numerous MPU's and MCU's

Major Languages - Machine language, 360 Macro Assembler, Intel Assembler, PL/I and PL1, Pascal, Basic, C plus numerous job control and scripting languages.

Sure you can learn to be a programmer, it will take the same amount of time for me to learn to be a Doctor.

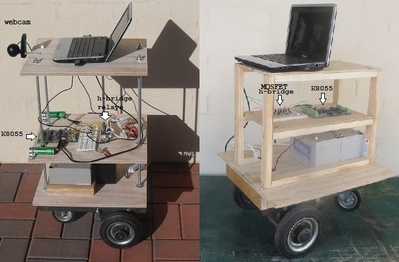

I just realized your avatar is a robot. I always thought it was a traveling workstation. How cool is that? Can you point me to a link on it? Like how powerful of a laptop, battery power, motors, etc?

Sadly one of the 24 volt windscreen motors died long ago and the robot base is no more. I connected it to the pc via a usb port to the k8055 interface as there was no Arduino back then. I used a wireless keyboard to send commands to the pc to execute motor programs or just for simple remote control.

To enlarge images, right click image and choose Open link in new window.

I had hoped to find an cheap electric wheelchair as a new base but without any luck. The most recent was one for $800AU but felt I couldn't justify it as probably I wouldn't get it doing anything useful. Building a real robot is now very much a pipe dream and of academic interest only using a robot vacuum cleaner as a base as proof of concept. However I have done very little over the 3 years since joining this forum.

BTW, I've started using VNC viewer for headless on the Raspberry Pi's (since its built-in) and have clients on other RaspPi's and on Windows. It works very well. Much better than Windows Remote Desktop and/or XRDP. If you have a monitor on the remote machine, it stays active while being remote controlled... so you can use it a teaching tool to someone at the remote end.

Yes I noticed the term headless being used which I assumed I could use my pc with its screen to remote control a RPi that didn't have its own screen or keyboard? However I just hadn't got around to doing it. After a flurry of activity programming the RPi to turn on leds and read button switches it now collects dust.

https://www.circuitbasics.com/access-raspberry-pi-desktop-remote-connection/

I now see your point... If I find a wall, and move along it (keeping the scanner in the same orientation) the next "frame" at 15 Hz will still see the same wall with only a couple of inches of new wall added at the extreme.

No I actually meant you keep scanning 360 like lidar to see the whole room. No need to figure out the position and orientation from wheel encoders or follow any walls.

You should be able to recognize a straight wall and its orientation to the robot's direction by looking at the distant numbers. I could probably work out the algorithms for doing this. I was going to use sonar which extends beyond the 4m you mention but just haven't got around to it.

@inq Just brain storming. This reminds me of a follow the wall with right hand maze solution, does that have any applicable here?

That crossed my mind, except possibly always turning left (Nascar). 🤣

However, I also need to make sure of not getting an Island. I thought of driving it around at the library (or warehouse) and realized, if it started near a shelving unit, it may never get away. Must make it able to sense returning to zero and then turning the opposite direction.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

@inq OR if you return to the start, just throw in a random change of direction and go from there.

First computer 1959. Retired from my own computer company 2004.

Hardware - Expert in 1401, and 360, fairly knowledge in PC plus numerous MPU's and MCU's

Major Languages - Machine language, 360 Macro Assembler, Intel Assembler, PL/I and PL1, Pascal, Basic, C plus numerous job control and scripting languages.

Sure you can learn to be a programmer, it will take the same amount of time for me to learn to be a Doctor.

I had hoped to find an cheap electric wheelchair as a new base but without any luck. The most recent was one for $800AU but felt I couldn't justify it as probably I wouldn't get it doing anything useful. Building a real robot is now very much a pipe dream and of academic interest only using a robot vacuum cleaner as a base as proof of concept.

You were doing it long before it became (relatively) easy with the Arduino/RasPi world. I remember back in the '90's wanting to interface my computers with the real world. Even though relatively flush in my career, I couldn't ever justify the thousands of dollars for a PC based board to do what a Raspberry Pi does (with the CPU built-in) for less than $10. Now, even total hardware Noob's like me can make something almost usable.

I saw your posts about re-purposing a robo cleaner. I got an early Roomba that went belly up. iRobot didn't honor their own warranty. I kept the beast thinking I'd like to use it as you suggest. I kept it for years, but finally pitched it in my last move where I was severely downsizing. With my lack of hardware skills, I found it too challenging to try to figure out which wire was which. And I'd never know if the part was broke or I just was misusing it.

I find it easier to just cook-book things together from other peoples work. Besides, I don't think I could find a broken Robo Vac around here to save money. I don't feel that much pain from the pieces I've got in Inqling Jr.

I've tallied it up and considering I splurged on the ToF sensor at $25, I've got a total of about $65 in it. That's even counting the plastic and the miscellaneous hardware. I buy a lot of things in 3's, 5's and even 10's if I think I'll use them in some other project or smoke'm. So that total isn't accurate if someone only wanted to buy in singles.

VBR,

Inq

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

You were doing it long before it became (relatively) easy with the Arduino/RasPi world.

I remember back in the '90's wanting to interface my computers with the real world. Even though relatively flush in my career, I couldn't ever justify the thousands of dollars for a PC based board to do what a Raspberry Pi does (with the CPU built-in) for less than $10. Now, even total hardware Noob's like me can make something almost usable.

The electronics store charges $109 for the RPi4 and that doesn't include a monitor, battery or charger. Not sure how it compares with the little laptop I was using with its built in webcam, keyboard, screen, battery, charger and a load of development software I am familiar with.

https://www.jaycar.com.au/raspberry-pi-4b-single-board-computer-4gb/p/XC9100

Since learning to program and interface electronics to my first computer, a TRS-80 (back in 1980), I have done so with all the computers I have owned up to today. I didn't have a career in electronics or programming it is just a self taught occasional hobby.

Besides, I don't think I could find a broken Robo Vac around here to save money.

I actually bought the robot base I am using for $100 new as it was being replaced with more clever robots. That is a lot cheaper than buying the inferior parts from a robot shop! The cheapest I can find at the moment is the $89 Mistral Robovac. It is smaller than the normal vacs and the wheels are not around the exact center so I am not sure how that works.

https://auspost.com.au/shop/product/mistral-robovac-white-81447

The electronics store charges $109 for the RPi4

I bought all my equipment RaspPi's before the Big Gouge! I got an RPi4 w/ 8GB for $55. I got six RaspPi Zero W for $5 each (The old single core ones). I just looked on eBay... and see they're going for $55 (USED)!!!

What the hell is going on out there in the world?

Fortunately, my ESP8266's are still only $3 a piece!

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

What the hell is going on out there in the world?

I just paid $107 to fill up my old Toyota Camry so compared with that price ...

The title of the thread was robot mapping, vision and autonomy, subject matter for any robot. Realising it with a self balancing robot is fine and indeed I think a Segway robot base would fit well in a domestic environment. But it was a side issue to the title of the thread, and what I thought the thread was about, which could apply to any robot base.

That's true, but I was questioning your motives based on your following statement:

The reason I wouldn't bother with a self balancing robot is because it complicates things and my interest was AI not the ability to balance.

Your negating comment is quite clear in its intention.

BTW... when was this topic about your interest in AI?, as opposed to the OP's interest in the ability to balance a robot?

Cheers

I feel the self-balancing aspects are completely secondary and are only to get a head start on features I want for Inqling, 3rd. As self-balancing is readily done by other Internet robots, I consider it at the same level as how to use an A4988 to drive the stepper motors. The coding for balancing, if I choose to download others work is nearly plug-n-play in the grand scheme of things. At some point, going forward, I will need to roll-my-own as the standard versions available make some invalid assumptions for my future needs.

The primary goals for this project (this thread) are precisely: Robot Mapping, Vision, Autonomy (MVA). I have spent only two weeks doing the chassis design, printing, electronic design and hope to get power turned on this weekend if time permits... balancing and moving under R/C control next week.

The fact that I haven't even broached the MVA aspects so far may make this thread seem unrelated. That will be corrected in the full course of time for Inqling Jr.

The bulk of time spent going forward will be only software coding doing the MVA aspects... including vision with the ToF sensor, mapping and massaging the data, and finally turning it on in a random location in a pre-mapped area, let it decide where it is and tell it to go to some other location of a human's choice. I expect there will be much experimentation and trial and error. I expect that coding time to far and away exceed the two weeks completed so far. I expect multiple months. Once in that phase, I plan to show all those failings. Some, will be for comic relief, others I hope I'll get constructive feedback from people that have done this kind of thing before.

Also note - If balancing become an issue, it is a simple 3D print to put a tail-skid on the current design and eliminate any balancing code requirements. If I feel the balancing is affecting progress of the MVA, I'll kick it to side of the road in a heartbeat.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

BTW... when was this topic about your interest in AI?, as opposed to the OP's interest in the ability to balance a robot?

My post was not about my interest in AI, I thought it was about ing interest in AI from the title "robot mapping, vision and autonomy" which I put under the umbrella of a machine showing intelligent behavior.

Unfortunately I have never built a self balancing robot, although I think it would be a great project. So I have found ing's project interesting to follow.

My post was not about my interest in AI, I thought it was about ing interest in AI from the title "robot mapping, vision and autonomy" which I put under the umbrella of a machine showing intelligent behavior.

I am also very interested in AI and read the extensive thread here on the forum where you and @robo-pi intellectually debated the subject and definition. I noted your very strict definition of AI and consciously used Autonomy on the title here because of it. 🤣

I may regret this, but I would probably use a far weaker definition (as a baby step toward yours).

A program that takes in external data (sensor or Internet data) and makes a decision (no matter how mundane) AND said data, also adjusts the decision process where future incoming data will arrive at a different answer... is AI

With this simplistic definition, Inqling Jr is using AI. It'll "sense" geometric data of its surroundings, adjust some internal model of boundaries, and make decisions about how to go to a specific point. It will combine memory of the environment with current surroundings as it moves through it to verify it is where it expects it should be. It will also adjust its internal model if it finds new obstructions.

It's as big a task as I am able at this stage in my learning curve.

VBR,

Inq

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

It'll "sense" geometric data of its surroundings, adjust some internal model of boundaries, and make decisions about how to go to a specific point. It will combine memory of the environment with current surroundings as it moves through it to verify it is where it expects it should be. It will also adjust its internal model if it finds new obstructions.

And if you study the input from the sensors you might be able to figure out the code to work all that out.

Probably worth starting another thread when you get to that stage.

Are you going to program this yourself at the low level or are you going to use libraries like SLAM to do the magic for you?

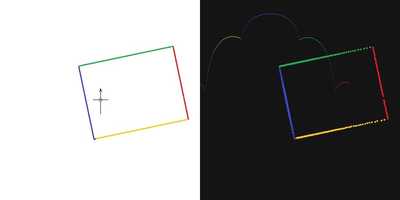

I program at a low level meaning I write my own solutions. For example let us say the robot is in a rectangular box and wants to know its orientation to that box and its position within that box.

It scans 360 degrees using say sonar or lidar to find its distance from the walls and other objects. Using that data it can compute its current position and orientation.

Now on the left of the image below is the simulation. The robot scans 360 degrees and plots the distance to the wall for each angle. I coloured the walls to make it easier to see although I guess returning the color as well as distance is an option. On the right is a plot of the distance and relative position of the obstacle (wall in this case) to the robot.

So the closest yellow point is 88 pixels, the closest blue point is 33 pixels so that will give the position of the robot relative to those two walls. But are they walls? The x,y positions of any three points can be checked to see if they are in line with each other. The angle of that line will determine the orientation of the robot to that wall. From the four closest measurements the dimensions of the box (room?) can be determined and might be one of the attributes useful in identifying which room the robot is in.

Once it has its coordinates it can then use the map and the goal coordinates to compute the shortest path.

To enlarge image, right click image and select Open link in new window

Non player characters in computer games have to have such AI to decide where to move next. Essentially they are simulated robots in a simulated world but they still need to know where they are and what direction to move to next to get to some goal position just like a real robot. The logic is the same. Think of the soccer playing robots. Their goal is to get to the ball on the opposite side of the goal location and give it a good kick.