I'm also not sure how much ROS will be a substitute for A.I.

My understanding of how ROS works is that it's basically a middleman between the intelligence layer and the "action" layer.

In looking at some of the codes I'm using, the action items include basically "left motor" or "right motor" without any care to which motor controller is being used.

So it's kinda using it's own MQTT to be a PUBLISHER, (in following what Bill is doing, I'm assuming that the communication here will be via I2C) and the Arduino which is connected to the motor controller is the SUBSCRIBER

Then, the sensors, which will probably be connected to the Arduino, will send data (again, via I2C) with the Arduino now being a PUBLISHER, to the Intelligence layer (not sure about this one, maybe the Pi4?) with the Intelligence layer now being the SUBSCRIBER, which will then make a decision on which action to take, at which point, the Intelligence layer will again become a PUBLISHER, sending msgs to the motors, via the Arduino

See ? Simple. Probably completely wrong, but, simple nonetheless 🙂

Yes googlenet is uses by the nano.

But it is good , it recognises to great details! Including indian dresses ! Like " kurta"

But if you have many images in the surrounding and ir tries all to recognize the processor really gets super hot! And system shuts down automatically.

Some funny out put too...i was wearing a striped t shirt and it had out put the same as " Prison Dress " LOL

My understanding of how ROS works is that it's basically a middleman between the intelligence layer and the "action" layer.

That's my current impression as well. And I simply have no need for a middleman in my own personal robotics projects. All the middleman would amount to for me would be a whole lot of unnecessary bloatware to bog down the system. I'm far better off just writing all the communications software myself.

But I agree that ROS may actually be a paramount feature in an industrial setting where technicians may need to interface between devices they don't know much about. ROS would make interfacing those modules easy for the technicians.

As an example, in Bill's DB1 project, to use ROS, he would need to install ROS on his motor controller chips, and then again on his Arduino Mega, and then again on his Raspberry Pi, etc. Why bother with all that extra software when in this particular case it's actually easier to just write the communications methods directly?

I could be wrong about all this. I'll have to wait and see how things unfold in the DB1 project.

But as it appears to me right now ROS just appears to be a lot of extra baggage that simply isn't needed. My goal is to remove as much software as possible. Not to bog my systems down with tons of software I don't even need.

Just my thoughts for whatever they're worth. Like I say, I could have this all wrong. I'll have to wait and see how things unfold.

DroneBot Workshop Robotics Engineer

James

But if you have many images in the surrounding and ir tries all to recognize the processor really gets super hot! And system shuts down automatically.

Yes, they still have a lot of bugs to work out. Obviously they must have found a way to deal with a large number of images for self-driving cars.

I also noticed that on a review by Explaining Computers every time there was nothing in the scene the Nano reported "Jellyfish". This is another known problem with ANNs. They tend to need to give an output of something at all times. I would think they could also train it to recognize a blank background. But maybe not?

DroneBot Workshop Robotics Engineer

James

RE: HEADLESS remote graphical access to nano from laptop

I run Jetson nano completely headless from my wifi connected win10 laptop. This includes viewing the Jetson graphical desktop and all gui programs like visual studio code and all the cv2 camera ML python programs. It took me many hours researching this, but here is how I did it - it is dead simple:

1. Install the desktop server program on the nano from a nano terminal: sudo apt install xrdp

2. Install Microsoft Remote Desktop (from the Microsoft store) on your windows 10 machine.

3. Configure the settings from (2) above - straightforward, then run the Remote Desktop (2) . MAKE SURE YOU ARE NOT ALREADY LOGGED INTO THE NANO WHEN YOU CONNECT REMOTELY - it will fail if you try.

4. This setup runs very fast with almost no latency - but I have an i7 windows laptop. My only remote connection issue is getting the audio from the nano into my laptop - I need to dive deeper into the settings to get the remote audio - but not really needed for ML with the camera. And yes, it is using the nano's graphical cores, not the CPU for cv2 ML.

Random notes I wish I had learned sooner about the nano:

1. Install visual studio code on the nano (called code-oss) as per Paul McWhorter youtube channel for your python ide.

2. I don't bother with wireless on the nano - just plug it into ethernet connected to my router. But I still access it via wifi on the laptop.

3. As you discovered, Paul McWhorter's youtube channel on the nano is fantastic. He does not run the nano headless with a graphical interface- he uses mouse, keyboard, monitor. I find that very limiting. My headless remote connection works great.

4. Install a cheap SSD to the USB C on the nano. While you still need the SD card to boot, the ssd can perform the majority of the boot process. Additionally, you have much faster, reliable data transfer and storage. SD cards are a weak link on the nano (and raspberry pi). Jetson Hacks youtube channel describes how to do it - very worthwhile.

5. The Jupyter notebook server is builtin to the nano and can also be used as a graphical interface from a remote windows machine. You access the nano with a Jupyter notebook from your laptop. Works well also, but I prefer visual studio code (code-oss).

6. Have a backup SD card image for your Jetson - sd cards get corrupted easily and have a limited life.

Sorry if this doesn't make sense. I have spent many hours researching how to set up and get the full potential of my Jetson nano. Good luck if you persist with this project.

Thanks for the detailed reply Carter. I was hoping to go headless right off the bat, but ran into the problem that I couldn't even access the Nano using SSH because of the license agreement required on the Nano. It required agreement to the license before it would even allow SSH access. So I already went out and bought a keyboard and monitor for it. Now I'm addicted to the large 22" HDMI monitor anyway. ?

However, I am thinking about buying a second Nano. If I do that I'll definitely want to try going headless on the second Nano as you suggest.

Based on what you've described it sound basically the same as going headless on the Raspberry Pi. I already have all my Raspberry Pies set up for headless operation. So I'll give this a shot when I purchase my second Nano. ?

As you discovered, Paul McWhorter's youtube channel on the nano is fantastic.

Yes, I'm really glad he chose this topic. I like the way he approaches the lessons and covers ever detail so that no one will be left behind. I'm looking forward to seeing what all he does with this.

DroneBot Workshop Robotics Engineer

James

Just finished lesson 11 on Paul McWhorter's YT Jetson channel...getting very interesting now. And all done headless with my laptop from the back yard deck. Good luck!

Just finished lesson 11 on Paul McWhorter's YT Jetson channel...getting very interesting now. And all done headless with my laptop from the back yard deck. Good luck!

I've watched all the lessons up to this point, but for reasons to complicated to get into I haven't been able to actually follow along on the Nano. So I'm going to need to play a lot of catch-up. But I think that should all go pretty quickly because I'm already familiar with Python, MathPlotLib, Numbpy, etc. I just haven't had time to sit down and actually install all this software. But that's about to happen very soon. So I should be able to catch up to lesson 11 very quickly, and I'm looking forward to that because I'm anxious to see how the Raspberry Pi camera works. I haven't even tried my camera out yet.

By the way I have my camera mounted on the top of the Nano case which isn't actually very convenient for me. I'm wondering if there is a way to have the camera attached via a much longer and perhaps more flexible cable?

I'd like to be able to move the camera around more freely.

DroneBot Workshop Robotics Engineer

James

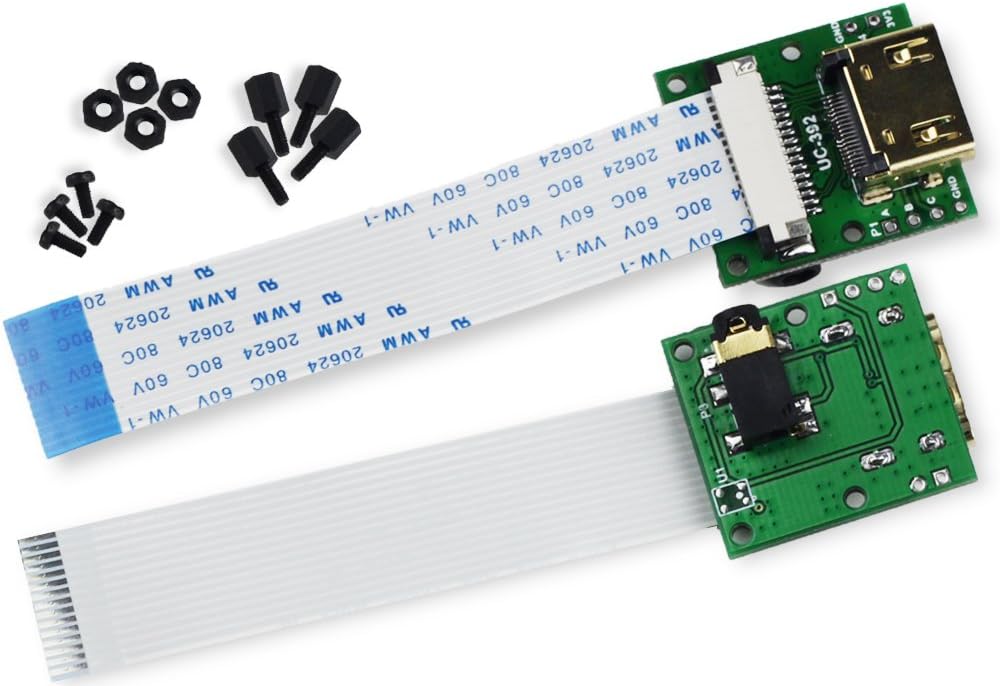

I just found this: I guess this would be the best way to go. Mount one of these to the Jetson Nano and the other to the camera, and then just use an HDMI cable in between.

HDMI extension cable adapter for Raspberry Pi Camera

DroneBot Workshop Robotics Engineer

James

This is what I use, it works fine with any raspberry pi camera and the Jetson nano or RPi.

Lesson 11 is where it starts to actually get into ML. Very exciting. Good luck!

This is what I use, it works fine with any raspberry pi camera and the Jetson nano or RPi.

That's a very economical solution. I ended up ordering the conversion kit to HDMI along with a very flexible 15' long cable. It's overkill to be sure, but I'll certainly have a lot of freedom in moving the PiCam around. I'll be able to put it on a tripod anywhere in the room. So that might prove to be useful. But it also cost me about $30 for the whole shebang.

Lesson 11 is where it starts to actually get into ML. Very exciting. Good luck!

I still have a lot of catching up to do but I did watch lesson 11 just now and I see at the end he promises to be able to recognize specific individuals. That's one of the main reasons I'm interested in this Jetson Nano so I'm really glad to hear him say that he'll be covering that particular topic. Hopefully I'll be all caught up by the end of this week. I have to go out and cut firewood right now though. ?

DroneBot Workshop Robotics Engineer

James

Two of my fun intended uses of Jetson ML are to (1) continuously read the digits of my water meter and text me if usage is abnormal (I have had underground water pipe leaks), and (2) recognize each of my two cats and instantly text me with which one is eating at the cat dish. Useless, but entertaining. It's helpful to have people sharing ideas - keeps motivation higher. Dronebotworkshop is great for that. Good luck!

I finally got a remote desktop working on ubuntu, and, as it turns out, it's remote desktop

So far, I've only gotten it to successfully function fully on ubuntu 16.04 on my pi3b, but it's still giving me a hard time on the Jetson Nano which is running (the same as you I think) the Nvidia version of 18.04

Here's the path I followed...

First, I installed Xrdp on the ubuntu

sudo apt-get install xrdpsudo systemctl enable xrdp

Then, you start remote desktop on your windows machine, type in the credentials (I selected "remember me")

Now, when I installed the Xrdp, it generated an rsa key, which, I think might be the 'key' to the problem. What happens for me on the pi, is, it just works. It's a tad slow, but, it wasn't meant to push all that video data, but, on the Nano, it starts to log me in, I get the Nvidia logo, and it looks good video-wise, nice graphics and all, then the rdp session just quits and I'm back to windows

At first I tried logging out of the Nano desktop, thinking that it could only handle one session at a time, but that didn't do anything at all, and now, I'm working on trying to figure out if there's an rsa key issue. I might be on the right path with that or not. I have no idea what I'm doing

Wait...

I got it !!!

Run these 2 commands, then log out of your Nano desktop, then try the rdp again (DON'T hit delete on any errors that pop up), and poof ! You've got your desktop !

echo mate-session> ~/.xsession

sudo apt-get install mate-core

Ok. I just tried logging into the desktop on the nano while the rdp was connected, and, to nobody's surprise, it didn't let me in, so, it seems that in 18.04 you can only have one person logged into the desktop at once, whereas, on the pi3b running 16.04, it DID allow me to log in remotely AND locally

Wierd, but, cool, it works

Yay !

Booo ! Okay, it's not perfect. I can't get to any of my programs. The dock is gone. I can start a terminal session by hitting ctrl+alt+t, then I typed

gnome-shell-extension-prefs

And turned on the dock and rebooted

Aaaand that broke something. Now I've got no dock locally either, so maybe don't follow these instructions

Looks like much more work is required for me to get the system back to functional

Anyway. It looks like it works unless you mess with the shell extension thingy, then it goes all kerflooey on ya

And it was looking so positive too

Well, I reinstalled gnome, which is apparently what was broken, and now the rdp doesn't work anymore

I'll just go back to doing what I was doing