Hi @robotbuilder & @inq,

From the little I read, I think the gesture recognition is aimed more of the swipe left, swipe right, etc. type, ( compared to say recognising when someone is happy or sad), probably in situations where the swiping 'object' is fairly close and relatively fixed distance from the sensor... i.e. as an alternative to a touchpad/touchscreen ... but without actually touching anything ... avoiding problems such as surface contamination, etc.

Perhaps @inq could use it in a way reminiscent of airport ground crew directing an aircraft, to train Inqling where the beer is kept and where it needs to be delivered? 😀

.........

I think Inq has the right idea for getting the best out of this sensor ... i.e. keeping it static relative to its host, Inqling's body/head.

Whether it is worth having more than 1 sensor to give more visibility to the sides, and even behind is a question that will arise .. I wouldn't go down that route now, but try to allow for it when designing mechanical structures, etc.

I would also allow for at least one other sensing method to be built in later, at least for the pathway in front, in case this sensor fails to spot an obstacle.

Best wishes all Dave

@robotbuilder I think we are saying the same thing but differently? MPEG works because the majority of a scene is unchanged from one frame to the next so it doesn't have to be recorded. It is at least theoretically possible under the right circumstances that a single frame contains only 1 piece of data. At night it could literally be a byte, but daytime/artificial light it's more in order to encode color data. That unchanging TV screen idea was replaced by MPEG, it accomplished the same (low data) but with better visuals.

First computer 1959. Retired from my own computer company 2004.

Hardware - Expert in 1401, and 360, fairly knowledge in PC plus numerous MPU's and MCU's

Major Languages - Machine language, 360 Macro Assembler, Intel Assembler, PL/I and PL1, Pascal, Basic, C plus numerous job control and scripting languages.

Sure you can learn to be a programmer, it will take the same amount of time for me to learn to be a Doctor.

@inq I think you are using pixel differently than I understand. A pixel is the smallest unit and is actually just a light sensor that returns when scanned out a number from 0 to 255. Color is interpreted from the fact that a single cell has a filter that blocks all but red and when we read it's light level that value is assigned to the R of the RGB a similar thing happens for the Green and Blue. Keeping it simple, a single pixel of the 64 you have can only see 256 levels of light intensity, There is no possible way to subdivide that up into 4 objects. I hope this is a language issue, because if it isn't, you are badly informed.

First computer 1959. Retired from my own computer company 2004.

Hardware - Expert in 1401, and 360, fairly knowledge in PC plus numerous MPU's and MCU's

Major Languages - Machine language, 360 Macro Assembler, Intel Assembler, PL/I and PL1, Pascal, Basic, C plus numerous job control and scripting languages.

Sure you can learn to be a programmer, it will take the same amount of time for me to learn to be a Doctor.

From the little I read, I think the gesture recognition is aimed more of the swipe left, swipe right, etc. type, ( compared to say recognising when someone is happy or sad), probably in situations where the swiping 'object' is fairly close and relatively fixed distance from the sensor... i.e. as an alternative to a touchpad/touchscreen ... but without actually touching anything ... avoiding problems such as surface contamination, etc.

I think the Google Pixel 4 Pro had something like this, but NOBODY cared about swiping in the air above it. Party trick value only... considering the power usage, they quickly got smarter.

Perhaps @inq could use it in a way reminiscent of airport ground crew directing an aircraft, to train Inqling where the beer is kept and where it needs to be delivered?

I think I'd rather yell at it! To much effort to hand wave.

I would also allow for at least one other sensing method to be built in later, at least for the pathway in front, in case this sensor fails to spot an obstacle.

Not enough room in Jr. Fortunately, its light, plastic and even at 7 mph it'll likely disintegrate before hurting anything. Unlike...

... hitting you at 7 mph! Ouch!

VBR,

Inq

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

@inq I think you are using pixel differently than I understand. A pixel is the smallest unit and is actually just a light sensor that returns when scanned out a number from 0 to 255. Color is interpreted from the fact that a single cell has a filter that blocks all but red and when we read it's light level that value is assigned to the R of the RGB a similar thing happens for the Green and Blue. Keeping it simple, a single pixel of the 64 you have can only see 256 levels of light intensity, There is no possible way to subdivide that up into 4 objects. I hope this is a language issue, because if it isn't, you are badly informed.

It is totally my fault to use pixel in this way. People intuitively understand a grid pattern of pixels. I think ST (company) uses SPAD array, zone and elements. Other references on the Internet have even some other names for them. I chose to use the terminology "pixel" like a camera pixel. Just like a camera, this sensor averages the values within the FoV for that "pixel". The only difference is they are distances and not color averages. Since it is using inference patterns to measure distance, it can effectively see multiple objects (up to 4).

I'm not misinformed, but don't take my word for it, you can read the manual just as well as I can. @davee bookmarked it above somewhere or its a simple download via the Arduino IDE library for the SparkFun sensor. It also includes all this documentation from ST.

Conceptually, I'm trying to wrap my mind around it to be similar to "Double Slit" experiments where interference patterns occur and are measurable with human scale devices.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

@inq I was pretty sure you knew what a pixel was, I was just trying to discover if you were using that word differently than I.

First computer 1959. Retired from my own computer company 2004.

Hardware - Expert in 1401, and 360, fairly knowledge in PC plus numerous MPU's and MCU's

Major Languages - Machine language, 360 Macro Assembler, Intel Assembler, PL/I and PL1, Pascal, Basic, C plus numerous job control and scripting languages.

Sure you can learn to be a programmer, it will take the same amount of time for me to learn to be a Doctor.

This might makes things worse... Interference patterns comparing the illumination going out and what is reflected back.

In ST Documentation where it talks about the multi-target option:

4.10 Multiple targets per zone

The VL53L5CX can measure up to four targets per zone. The user can configure the number of targets returned by the sensor. The selection is not possible from the driver; it has to be done in the ‘platform.h’ file. The macro VL53L5CX_NB_ TARGET_PER_ZONE needs to be set to a value between 1 and 4. The target order described in Section 4.9 Target order will directly impact the order of detected target. By default, the sensor only outputs a maximum of one target per zone. Note: An increased number of targets per zone will increase the required RAM size

I have tried this out and have seen 2 targets identified within one of the 8x8 zones (pixels). Remember... the FoV of a pixel is 5.625 degrees and light is coming from all areas of that pixel. If an object is only half of the FOV, the other half (background) distance is returning light also. Just like a camera pixel. The difference, the camera pixel averages the light... This sensor can take the closest or strongest as the returned value. User's choice. If more than one is opted for, it will return them in order of closest or strongest.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

Hi @inq,

I hope you understood my beer related comments were a little tongue-in-cheek ... I guess I am imagining beer serving capability as the 'Inq test' ... along the same lines as the 'Turing Test' ... but more useful. Sadly, Alan T didn't live long enough to see machines which presently at least approach meeting his test .. I doubt if Alan T could have imagined being able to develop a machine to pass the 'Inq test' ...Of course, no cheating with a keg, etc.! 😀

...........

I can see gesture communication being useful in the right situation .... as to whether Inqling is a 'right situation', I'll leave you to ponder.

I think I'd rather yell at it!

I can understand the desirability of that approach ... but to me it implies speech recognition if it is to be effective. This project is becoming more complex, interesting and potentially expensive by the hour!!

-----------

The later comments referring to two sensor types and multiple Lidar sensors were made just after reading

Inqling the 3rd will be a full height robot and having to know the distances of everything in front of it from 10 cm height to 2 meters above the floor will be necessary.

Sorry if I have got mixed up with your fast growing family ...

I assume Inqling the 3rd will have more room for sensors ... and inertia .... If Inqling the 3rd does 7 mph, which is near to 9 minutes a mile ... that is a respectable running speed for the 'average' adult on a longer run, especially in later life.

I can understand your present neat little 'ankle-height' model is pushed for space and doesn't present a great risk, but Inqling the 3rd sounds a different proposition ...

Best wishes, Dave

Hi @inq,

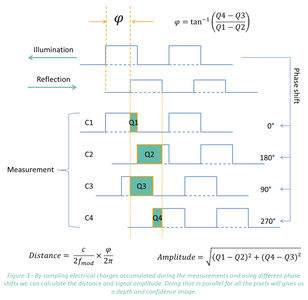

Sorry, I haven't taken the time to follow up on the reference you show a clip above, but the diagram is confusing me ... assuming the x-axis for the diagrams is time ... but sadly lacking any values or units.

My understanding is that the new data rate was about 15 times a second, say 67 milliseconds

But the light travel time is maximum of around 30 nanoseconds

So how is it showing illumination and reflection times with a mark-space ratio of about 1:1?

What would be 'typical' values for the x-axis in this diagram?

Is the illumination modulated at (say) 30 MHz?

Has your deep dive found some explanation of the physics of the device that is worth reading?

Best wishes,Dave

3D Mapping was/is the ONLY thing I am after with this sensor. Pattern recognition is a goal for a later generation bot.

To be of any use a map has to be used and that means recognizing a plan shape (a pattern).

As the sensor data pours in how are you going to convert that data to a static global 3d map?

How is that map going to be represented in the computer?

What about smaller 3d shapes like 3d objects on a bench?

How are you to use that map with a current input pattern?

What is the robot's goal/s?

I hope you understood my beer related comments were a little tongue-in-cheek ... I guess I am imagining beer serving capability as the 'Inq test' ... along the same lines as the 'Turing Test'

😆 Of course I did. Beer is important. 🤣

I like that... "Inq Test". Might have to coin that, much better than "Turing Test" or "Acid Test".

I can see gesture communication being useful in the right situation .... as to whether Inqling is a 'right situation', I'll leave you to ponder.

Yeah!... if you're Mute or a Navy Seal. I'm neither. 🤣

I can understand the desirability of that approach ... but to me it implies speech recognition if it is to be effective. This project is becoming more complex, interesting and potentially expensive by the hour!!

Well... only on the second robot and they have small baby step goals. The 3rd will be incremental and is still pretty malleable until Jr succeeds at all goals or crashes and burns.

- Senior - Movement and RC control/telemetry via WiFi

- Jr - Mapping, Self-balancing

Total cost for Jr should be right at $50 US when all said and done. (Batteries are salvaged)

I can understand your present neat little 'ankle-height' model is pushed for space and doesn't present a great risk, but Inqling the 3rd sounds a different proposition

Nah! I'm a cheapskate. Tall doesn't mean big... think beanpole and absolute minimum-design. But, if self-balancing is achieved in Jr, 3rd will be able to get up from a prone (fell down) position.

VBR,

Inq

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

Hi @inq,

Sorry, I haven't taken the time to follow up on the reference you show a clip above, but the diagram is confusing me ... assuming the x-axis for the diagrams is time ... but sadly lacking any values or units.

My understanding is that the new data rate was about 15 times a second, say 67 milliseconds

But the light travel time is maximum of around 30 nanoseconds

So how is it showing illumination and reflection times with a mark-space ratio of about 1:1?

What would be 'typical' values for the x-axis in this diagram?

Is the illumination modulated at (say) 30 MHz?

Has your deep dive found some explanation of the physics of the device that is worth reading?

Best wishes,Dave

Well... deep dive when you said it, made me feel like I over-stated it. I by no means understand how electronics are measuring the "interference pattern", but, it says the integration time is the time that the modulated light is shown and the sensor's calculations are run. Default is 5ms, but yes, the modulation is far faster and somehow the electronics measures the differences between the signal going out and those coming back (interference patterns) to get not just one, but up to 4 targets within each pixel's FoV. My simplistic view of the electronics world is this is a seriously beefed-up Wheatstone Bridge. Measuring the differential is more accurate. The excerpt above graphically (and equation) shows what the electronics must be doing to integrate over the 5ms duration. I've played with different values, but need to get back to the gym to test distances. I barely have a 1 meter blank wall at the house.

That excerpt came from this link and it was a better primer than the ST documentation.

https://www.mouser.com/pdfdocs/Time-of-Flight-Basics-Application-Note-Melexis.pdf

VBR,

Inq

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

3D Mapping was/is the ONLY thing I am after with this sensor. Pattern recognition is a goal for a later generation bot.

To be of any use a map has to be used and that means recognizing a plan shape (a pattern).

As the sensor data pours in how are you going to convert that data to a static global 3d map?

How is that map going to be represented in the computer?

What about smaller 3d shapes like 3d objects on a bench?

How are you to use that map with a current input pattern?

What is the robot's goal/s?

Wow! I believe your comments are meant to be thought provoking or encouragement; but you're coming across like an employer trying to hold me to your specifications or your time-table... or someone that is just waiting for me to fail. This is a hobby and I'm doing it for fun... There is no end-game for a product!

I can't even tell you if the steps I am taking now will bear fruit and be incorporated into my bots. In this thread, I am merely giving my observations about a sensor... period. I or anyone else might use this information to make an informed decision if they also want to use it. I have already outline many issues that will eliminate it from other people's consideration. @will is gone already. Even @davee (I think) said he is more interested in outside bots, so this is a total non-starter for his needs, but he is along for the ride anyway. For me, this sensor, is cheaper and appears to me to be able to do what I want it to do. We will see.

To be of any use a map has to be used and that means recognizing a plan shape (a pattern).

I consider that a minimum requirement. Not, a goal. I need 3D mapping. 2D alone is not my interest.

As the sensor data pours in how are you going to convert that data to a static global 3d map?

Over many years, and many projects - academic (true ray tracing), professional (Finite Element Method programs) and hobby (3D graphics body rotation) I have written matrix/vector libraries, that do transforms for displacement and rotations. I'll need to dig them up, but I'm not considering the Mathematics to convert the angle/distance vectors the sensor gives to a global 3D Cartesian map to be even a speed-bump. The two issues on this subject that do concern me:

- Moving the bot to another location and relying on motor steps and maybe the magnetometer for keeping the datum consistent. Many of your old posts on the forum convince me of the build-up error will be fatal. And that doesn't even address wheel slippage. I hope that I can "mesh" the data of two overlapping views of the same portion of a wall. That is definitely a TBD and that is why I brought up the statistics of coordinate scatter.

- I have little doubt that the conversion process to 3D map will be easily handled by the WiFi'd computer. But in my mind, Autonomy, mean no outside help. I question whether a RaspPi will be able to keep up once it is on a future bot.

How is that map going to be represented in the computer?

In the first cut of Jr it will be a point cloud as your referenced links on this or Jr's thread have shown examples.

What about smaller 3d shapes like 3d objects on a bench?

Not part of Jr's goals. I think you have on multiple occasions brought up the sentiment, "Walk before running."

How are you to use that map with a current input pattern?

It is my belief (hope) that with statistics (TBD), I can calculate geometric planes out of the point-cloud - thus converting potentially thousands of points into a plane. As an example (that I gave before) an empty rectangular room would have 5 such planes, 4 walls and the floor. Using well established game hit-tests on these planes, will establish where the bot is within a room and allow it to plan and implement a move through a complex space into another room's target location.

What is the robot's goal/s?

They have never wavered - https://forum.dronebotworkshop.com/user-robot-projects/inqling-junior-robot-mapping-vision-autonomy/#post-30744

VBR,

Inq

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

Hi @inq,

Thanks for the updates.

Good to hear Inqling the 3rd will be slim and healthy ... otherwise it might decide to drink your beer instead of serving it 😉 ... nevertheless it sounds like it could give anyone coming too close a nasty poke in the eye.

More seriously, I had a quick squint at the Melexis link ... it left me wondering if it was based on the same generic design model as the ST device ... I am not saying it is markedly different .. indeed it would make sense if they were rather similar ... and there is a possibility they even share some internal parts, as I have the impression the ST device is an assembly of parts, not just a silicon chip with wiring attached .... so I am just raising the possibility just that they might be different .. and if so, confusion is inevitable if it is not allowed for.

You might need some scuba gear or even a bathyscaphe for the next level of deep diving! 😀

Best wishes, Dave