Have you viewed this video?

No, but I have bookmarked it to watch it at the library... maybe Monday??? Keep them coming though. Getting a page of posts... mostly without pictures seems to come in, in about a minute. Sending surprisingly is a usually pretty reliable (as long as I don't add pictures)... I only have to repost about 25% of the time. I hit your link before I started this post and the browser is still showing the light blue background 5 minutes ago. If I was a true masochist at heart, I could watch the video at one frame per minute. 🤣

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

A rotating sensor does what... a couple hundred samples per rotation and rotates how many RPM... I have to inspect or throw away 90% of that data.

Once you have "looked around" with your VL53L5CX sensor you will have even more data to inspect and throw away.

If I want anything above/below the horizontal plane of the device, I have to angle it and half of the data is totally useless.

I wouldn't cross that bridge before I came to it, you might find you can to navigate to a goal location with a 2d plan.

AGAIN... this is totally at the conceptual, brain-storming level of thought.

The devil is in the detail. If you start with the sim bot you can get an idea of what the issues are and are applicable to 3d as well.

As far as the data... you all convinced me it was not cheating to do things off-bot.

Connected by a wire or by wireless what is the difference? No cheating involved.

Heck... a plain rectangular room of 4 wall planes (without furniture, etc) would take a whopping 96 Bytes Total! I feel even a measly Arduino Uno could handle driving around with this kind of map system!

You can store a rectangular room with two numbers, width and length. A TOF measuring device should also show doors and windows to identify walls.

Measure the perpendicular position of the robot from each wall to get its coordinates (one rotatable narrow beam will do that). Shortest lengths flag the direction of the beam is perpendicular to the wall.

Have you seen laser measuring tapes?

@inq My mistake. The $10.70 was just for some cable or something, the actual Lidar is $105

First computer 1959. Retired from my own computer company 2004.

Hardware - Expert in 1401, and 360, fairly knowledge in PC plus numerous MPU's and MCU's

Major Languages - Machine language, 360 Macro Assembler, Intel Assembler, PL/I and PL1, Pascal, Basic, C plus numerous job control and scripting languages.

Sure you can learn to be a programmer, it will take the same amount of time for me to learn to be a Doctor.

Hi @inq

re: I hope it didn't sound like I was presenting a silver bullet....

No you didn't .. so don't worry about that!

So far as I could see, you recognised that it was a device that was claiming to have useful properties ... but obviously, such claims, will be biased towards the advantages, etc. ... converting that into a system that meets a real requirement is a challenge.

If a single, static ultrasound can provide about the same capability, then it would not be an attractive buy. But if it needs more than 1 ultrasound, or an ultrasound on a mobile scanning platform match it, then I would see is as at least a competitive solution worthy of consideration.

But to give something real consideration, you need to actually understand what it can do ... reading app notes and the like is a start, but to do it properly, you have to do what you are doing ... try it for real. As I said before, 90% of research goes nowhere, but still has to be done to even stand a chance of finding the other 10%.

..........

I have the impression you are looking at this from two 'market' perpectives .... as a 'one-off' for your personal Inq-ling family members, and for the wider group that you are teaching. And that you naturally feel more 'cost concious' with the wider group.

Previously, a quick look at Aliexpress suggests that the ..5x version of the sensor, which you have been looking out maybe absent, leaving the likes of Adafruit, etc. to charge £20-£25, (presumably roughly 1US$ = £1), but Aliexpress has boards/modules with the earlier members of the family ...0X and ...1X, which look a lot cheaper ... say around £7 for the ..1X, and £1 to £5 for the...0X

ST data on the ...0X is miniscule, but it appeared to be a single pixel job, making it roughly the same as an ultrasound.

There seemed to be more documents on the ...1X, but maybe less 'friendly' than for the ..5X. I haven't had time to read them, but first impression is that it had a similar 8X8 array. (Yes, I would have expected that to be headline on the spec .... but I didn't see it there!)

Some of the modules come complete with an ST processor.

As always, there is a lot of hype on Aliexpress, so reading the small print, and even then being misled is possible, but it might be worth a glance or two. I guess at least some of the ...5X design started on the ..1X, so whilst I would expect the ...1X to be inferior, it might be good enough ... the trick maybe matching the capabiity to the requirement.

These are just 1st ones I spotted ... maybe better deals, etc. if you look..

....0x https://www.aliexpress.com/item/1005001621959010.html

....1x and ... 0x https://www.aliexpress.com/item/1005003517022396.html

https://www.aliexpress.com/item/1005004617319580.html

The last supplier has options with a little optical cover ... this might have some optical filtering built in, but it isn't discussed in the advert.

Just a thought ... I take no responsibilty for leading you astray.

Alternately, you could get that keg of beer, but then you would have to start looking at temperature control ...

Best wishes, Dave

I can think of experiments I would do with it.

Bring them on... If I can, I'll try it.

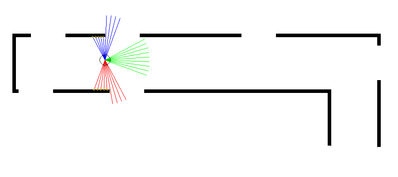

Well one suggestion was to navigate a passage. In the example below I have used 3 sensors but one sensor that can be turned to the left, front or right would do the same thing. The robot could travel down the passage noting its position using the doorways and although I haven't shown them in the map it could determine if a door was open (and probably by how much). It can use the TOF to keep at a fixed distance from one of the walls although I would probably put the sensor and laptop on a trolley and move it down the passage myself recording the distance values on the way to see if those values could be used to navigate the passage. Note I have limited the distance the rays can reach. Note that corners are "shape features" if they can be seen by the sensor.

When the robot isn't within 4m or less of an obstacle it will be driving blind. So perhaps following "walls" is a good start to making a map. Go from obstacle to obstacle. But you need to figure out the numbers seen with the actual shape and orientation of the obstacles to decide how to use them in code.

For when/if you get back to the robot project.

In order to map the robot's relative position to absolute coordinates the robot has to know its current position and orientation within that coordinate system. This means as it moves about it has to estimate as close as possible what direction it is travelling and how far. In your case you can use odometry based on pulses to the stepper motors. So I would suggest an important first step would be to have such a navigation system working (dead reckoning) and test how accurate it actually is. There is also the option to add gyroscope, accelerometer and compass sensors to help calculate the distance and direction.

An important function of a SLAM algorithm is to correct the position and orientation of the robot when it sees the same location again. I remember a robot that would push itself flat against any wall it came in contact with to accurately reset its direction with that wall and position somewhere along that wall.

So a first good experiment would be to find out how accurately the robot can navigate using the stepper motors alone. Give it a path such as say a rectangle and see how close it returns to its starting position and orientation.

Well one suggestion was to navigate a passage. In the example below I have used 3 sensors but one sensor that can be turned to the left, front or right would do the same thing. The robot could travel down the passage noting its position using the doorways and although I haven't shown them in the map it could determine if a door was open (and probably by how much).

Ok... I thought you had experiments at the sensor level like I am doing in this thread. Once I stop doing stand alone sensor experiments, I'll return to the Inqling Jr. thread for integration and testing like your suggestion.

This means as it moves about it has to estimate as close as possible what direction it is travelling and how far. In your case you can use odometry based on pulses to the stepper motors. So I would suggest an important first step would be to have such a navigation system working (dead reckoning) and test how accurate it actually is. There is also the option to add gyroscope, accelerometer and compass sensors to help calculate the distance and direction.

I had similar thoughts about sensors. Already have the gyro/acc/mag sensors. Still need to explore how far away from the steppers the mag has to be before it can reliably get Earth magnetic fields. The one in the current base unit of the Inqling Jr IS swamped by the stepper motor magnetic field. I'll have one in the head unit and see if it can be relied on.

I don't plan on spending any time on stepper motor based counting algorithms. They're just too limiting - must be on a smooth surface with good traction. I'll do it from the get-go with an Inertial Navigation System (INS) using the sensors in combination with land-marks identified from the mapping. I picked that old school as an acid-test obstacle course. As seen... those power-slides of Inqling Jr would totally wipe out counter based systems and limiting to crawl speed... would totally bore me to death. Also at that school... the hallways are all hard-wood floor that have lots of gaps and the boards are cupped and bowed and just driving Inqling Jr over it causes it to stagger around like a Saturday night drunk. IMO, counting steps is a waste of coding time and CPU time. I currently don't see benefits to the learning curve of a system that must be replaced eventually with an INS system anyway and I don't see any commonality that the latter is an enhancement on the former. Do you have some thoughts this bias may be wrong?

VBR,

Inq

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

I personally have no experience with inertial navigation systems.

I will wait and see if you can correlate the sensor data with actual obstacles. I can't do it as I don't have the sensor.

@davee, @robotbuilder, et al - I've finished my obligations for my Library's user group project Ralf at least till the next meeting in September. So, I'm back on this sensor. Before I start integrating it into Inqling Jr, I need to understand it better. Comparing to the one value a HC-SR04 gives you, and not even counting the 64 pixels worth of data... it has those 8 values for each pixel and other assorted data.

I've now studied (more than scanned the last time) the document you found @davee and it has created more questions than answered. 🙄 I'm still in the 1 step forward - 3 steps back phase.

The first thing I notice some of the defaults by the company (ST) have been overridden by Spark Fun's library wrapper. Neither set see to be optimum for my eventual use. I can see setting some of the parameters for precision during the mapping phase and setting them differently in the roaming, obstacle avoidance phases.

Integration Time

I'll start with what I thought would be the easiest. It is Integration Time in section 4.6. It was before the Sharpener you found. They do not actually say what it does... just its default and how to set it.

I found this on the Internet and it's helping... somewhat. The definitions help, the Math is something I choose to not dig into unless necessary. It's painful! 😉

https://www.mouser.com/pdfdocs/Time-of-Flight-Basics-Application-Note-Melexis.pdf

Although not for this specific sensor, it seems to indicate:

- Indirect Time of flight technology works by illuminating a scene using modulated light and measuring the phase delay of the returning light after it has been reflected by the objects in the scene.

- Integration time The duration of the photo-charge collection by the sensor and also the duration of the time when the modulated light is sent with the illumination. Also called “exposure time”. The frequency at which the illumination and the TOF pixels are modulated.

- The integration time. Higher values will get better distance images but at the cost of more power consumption in the sensor and the illumination and lower frame rate.

I was having troubles understanding how integration time had anything to do with a send-receive time would work. Seeing its based on this phase array graphs helps.

The saga continues...

VBR,

Inq

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

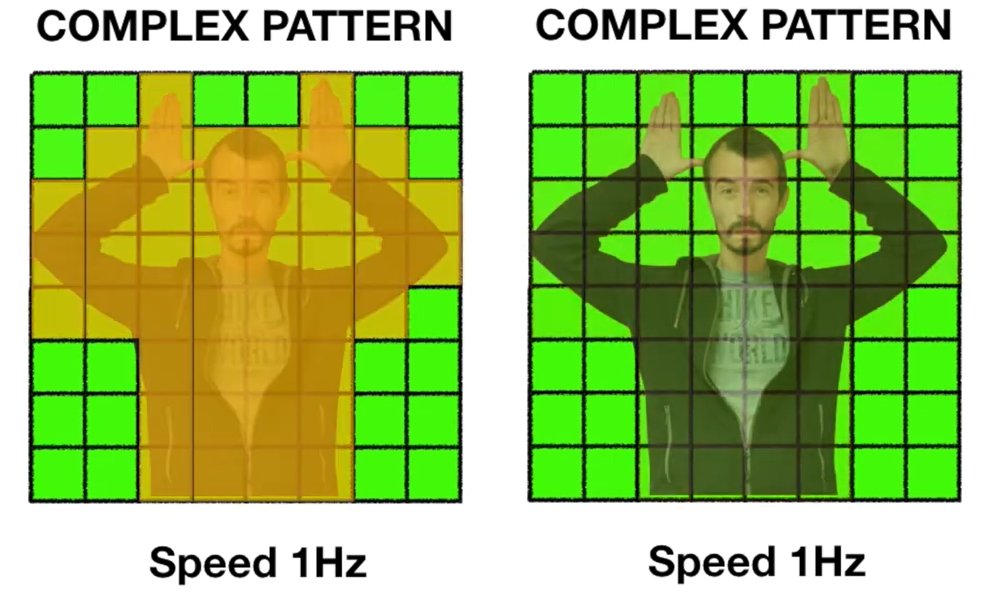

Some things like gesture recognition seem to be better done with a normal camera which doesn't required a fixed distance to cover just the right set of "pixels". Motion detection and the direction of motion is also easy with a normal camera. I now see very little value in the sensor for robot navigation and mapping when compared with the lidar or the sensor lidar it uses.

The idea that more data is better really is wrong it only creates more computations. The kind of data this sensor returns doesn't make the computations on the data simple. I don't see any practical use for this sensor in a fast moving robot apart from obstacle avoidance.

Just take the example below (assuming you can get it sending you that data). No face recognition and I doubt even the ability to recognise it is a human. With an ordinary camera you get face recognition and the size of the face will return a distance measure.

You need to always ask yourself what data do I really need to achieve a particular set of goals. Let me assure you again, more data is not necessarily better. People who haven't kept up with what we know about the human visual system might be surprised at how most of what they "see" is actually generated in the brains imagination, the detail is an illusion. The brain simply can't process that much detail.

@robotbuilder I didn't know that about the brain, so our own visual system is very much like MPEG encoding. I wonder if the MPEG inventor knew that and it inspired the invention.

First computer 1959. Retired from my own computer company 2004.

Hardware - Expert in 1401, and 360, fairly knowledge in PC plus numerous MPU's and MCU's

Major Languages - Machine language, 360 Macro Assembler, Intel Assembler, PL/I and PL1, Pascal, Basic, C plus numerous job control and scripting languages.

Sure you can learn to be a programmer, it will take the same amount of time for me to learn to be a Doctor.

The human brain doesn't record our visual inputs. "MPEG compression works by encoding only the information that changes between successive video frames, rather than encoding all the information in each frame." So given the first frame all the other frames can be reconstructed in some or complete detail.

We however do not encode all the detail in a series of frames that can be replayed.

Something I found interesting was that in old movies each frame could be enhanced by removing noise using the information from the previous and following frames making them better than the original.

@robotbuilder YUP, that's what I meant if I didn't state it clearly enough. Didn't know about the old movie noise though.

First computer 1959. Retired from my own computer company 2004.

Hardware - Expert in 1401, and 360, fairly knowledge in PC plus numerous MPU's and MCU's

Major Languages - Machine language, 360 Macro Assembler, Intel Assembler, PL/I and PL1, Pascal, Basic, C plus numerous job control and scripting languages.

Sure you can learn to be a programmer, it will take the same amount of time for me to learn to be a Doctor.

I now see very little value in the sensor for robot navigation and mapping when compared with the lidar or the sensor lidar it uses.

The idea that more data is better really is wrong it only creates more computations. The kind of data this sensor returns doesn't make the computations on the data simple. I don't see any practical use for this sensor in a fast moving robot apart from obstacle avoidance.

Pattern Recognition

Didn't buy this thing for it. Never said it was something I cared about. Early on, I said it couldn't tell the difference between a ball and a fist and a head. 3D Mapping was/is the ONLY thing I am after with this sensor. Pattern recognition is a goal for a later generation bot, and @zander convinced me ages ago successfully that image recognition was better handled by a ESP32-CAM.

Mapping

I guess that is where we have a difference of opinion and I'll just move my own way. Although I agree completely with you that too many points is a waste of time... I totally differ on which one is giving too many points. I'd rather have 8x8 so I can map 3D space than have 5000 in a disk 5" inches above the floor. I'm not building a vacuum cleaner.

Anyone can build a encoder robot that bumps into the wall and do a floor plan. Having a $99 device that gives me a room plan in 1/15th of a second is not a useful improvement. Adding all the stuff to make it tilt and do the calculations for that tilt is exactly the same coding that is required to handle the 3D space this thing will give without moving.

Don't get me wrong, I think the rotating ones are technologically cool... especially the ones using wireless power and communications. But, I think they solve a problem that doesn't interest me - building vacuum cleaners and 2D floor plans. Inqling the 3rd will be a full height robot and having to know the distances of everything in front of it from 10 cm height to 2 meters above the floor will be necessary. An 8x8 grid would require little to no movement. A rotating disk... would be painful to implement and evaluate the tens of thousands of results.

High Speed Navigation / Obstacle Avoidance

Again, not why I bought it, but another mode I discovered in this deeper dive is perfect for high-speed navigation. This sensor can point strait ahead. No need to rotate or scan back and forth. Each of the 64 pixels can have a threshold set. The pixels pointing at the floor can be set closer so the floor itself doesn't trigger, while the ones above floor level can be set further out. When anyone of the 64 point's threshold is triggered an interrupt is sent. IOW, I don't have to write one line of code that keeps checking every pixel... every frame to see if it is about to run into anything. Just wait for an interrupt... get the quadrant of the obstacle and adjust accordingly. Sounds pretty trick to me.

Multiple Targets Per Pixel

Not exactly sure what to do with this feature or even if I will use it. But it is possible to set it up to detect and give you the distance for up to 4 objects in each pixel. You can have it give them to you in strength order or distance order.

VBR,

Inq

BTW - I did buy one of the 12 meter static Lidars. If I decide the marginal 4 meter range is a hindrance with this thing. Just have to do more mechanical stuff to achieve the same thing.

3 lines of code = InqPortal = Complete IoT, App, Web Server w/ GUI Admin Client, WiFi Manager, Drag & Drop File Manager, OTA, Performance Metrics, Web Socket Comms, Easy App API, All running on ESP8266...

Even usable on ESP-01S - Quickest Start Guide

My response though was it wasn't like MPEG which encodes all the details from every frame. We can build up details in a "global representation" but at any given current frame we see very little detail instead we have to move our eyes around to see any real detail in a small area. This was once suggested as a means by which only the detail within that small area need be shown on a tv screen. This would required monitoring what part of the screen you were looking at. The viewer would experience the whole screen as being high definition when it was only HD in the small area being directly viewed.

You might like to google "change blindness" to show how we can miss even large details.