One thing I like to do when possible is to understand how an algorithm works, that means being be able to program it myself. My hope is to program my robot to navigate and locate objects by vision alone.

In the experiment below the outer diameter of the target is 4 inches. The maximum stable distance was 138 inches (11.5 feet) from the target when the enclosing rectangle had a width of 11 pixels. The closer you are the more accurate the distance measure from the target as given by the pixel height of the target. Note rotating the target 45 degrees around its base leaves the height unchanged and its height to width ratio a measure of the angle to the robot.

So maybe the robot might move toward the target until at some given distance from the target it might rotate to find another target to move toward?

Hopefully I will be able to get it to recognize "natural" indoor landmarks and features. For example the edge between the ceiling and wall can orientate the robot and give a distance measure from the wall. Other things that stand out might be door knobs (also tells if door shut) or light switches. Paintings and photos hanging on the wall can also be used as recognizable landmarks.

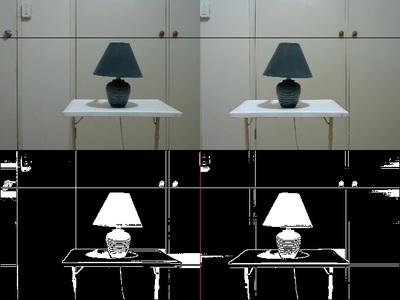

These images were taken by the program that was processing the images to locate the target in the images and return the visual properties of the target. The images are 320x480 so a higher resolution might make seeing the target at a greater distance possible.

@casey

One thing I like to do when possible is to understand how an algorithm works, that means being be able to program it myself.

That's a nice goal to work towards, and I'm sure a very rewarding one to achieve... it interests me too!

Hopefully I will be able to get it to recognize "natural" indoor landmarks and features.

I have thought about this previously, and mentioned this in another post. This sounds like there is a need for at least one, and potentially multiple origins to navigate against. My math isn't so great, but I'd guess that mapping vectors to and from these origins would provide great accuracy in location... just a thought!

Almost forgot... what camera and tools did you use in this example?

Cheers!

@casey

Almost forgot... what camera and tools did you use in this example?

Cheers!

The language I have been using is FreeBASIC and I use escapi.dll to capture images.

https://sol.gfxile.net/escapi/index.html

I am using the laptop's built in camera and two webcams,

https://www.logitech.com/en-au/product/hd-webcam-c270

Escapi.dll finds and gives each camera a number.

FreeBasic is a low level language comparable to c++ but with a BASIC dialect. It has c++ options including an inline assembler. The reason I chose it 13 years ago over c++ was because it was easy to read and use and compiled to fast exe code and had an inbuilt graphics library. This included bitmaps for easy image manipulations including fast direct access to pixels.

The algorithm I used in the above example converts the image into binary blobs and returns some measurements, namely their area, a box around the blob, area in pixels and centroid coordinates. What it does not do is return a shape description (eg. circle, rectangle, letter B and so on) which is why I queried the Pixy2 claim for shape recognition. Color following is fairly easy to implement. The algorithm for recognizing this particular target image amounts to comparing the centroids of all the blobs to see if their centroids are close enough to be declared a match and it mostly works very well.

I have been exploring Python and Processing as possible programming options in the future.

The target example was written many years ago and over the last 10 years I haven't really visited image processing very much. When a motor on my robot base burnt out I also lost interest in the robot project but recently with the Arduino and reading about Bill's DB1 project I thought why not give it a go again.

This forum is essentially about electronic hardware projects but for anyone working on the DB1 or their own robot once you have finished with building the mechanical and electronic side of your robot your next question will be how are you going to program it and to do what? This is why I assumed Bill would want to use the ROS framework with all its prewritten code to navigate using the LIDAR and so on?

My robot is simply a PC with a usb connection to a Mega which acts as an interface to the motors and sensors so I am already facing the hard part of robot building, how to program it!

Hopefully I will be able to get it to recognize "natural" indoor landmarks and features.

I have thought about this previously, and mentioned this in another post. This sounds like there is a need for at least one, and potentially multiple origins to navigate against. My math isn't so great, but I'd guess that mapping vectors to and from these origins would provide great accuracy in location... just a thought!

Let us say we have a set of colored beacons. The robot (or head) rotates around and notes their direction relative to the direction of the robot. Now it knows exactly where the beacons are in absolute space (internal map) so from that and the angles to the current position and orientation of the robot it has to calculate its own absolute position in that internal map. There must be a position and rotation in absolute space where the angle between the beacons matches the ones it now sees.

I haven't given a solution for solving this problem for isn't solving problems the fun part of programming?

Our robot starts at location 320,240 as in figA.

Now we have moved the robot to coordinates 442,137 in absolute space and rotated it left by 40 degrees. The robot doesn't know that and has to work out its location and degree of rotation by the angles between two or more beacons. We can see the absolute position of the robot fig.B but the robot sees the positions of the beacons relative to itself shown in fig.C

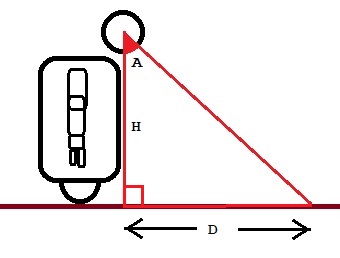

If the feature is above or below the robot's webcam it can make a measure of how far away it is by triangulation. A feature might be a colored object, a line between wall and floor or wall and ceiling and so on. Probably good unchanging features would be on the ceiling. Corners of ceilings could be detected by running an edge algorithm and computing the angles of the lines and where they meet.

@casey

I have been exploring Python and Processing as possible programming options in the future.

Thanks for the information on how you're working with it.

Have you looked into other libraries?

There are a number of good open source ones such as: "Magick" and OpenCV".

Cheers!

OpenCV may be useful to grab images from a video source but I like to understand how visual processing works and perhaps invent some of my own solutions.

I guess OpenCV has a function that takes in beacon angles and spits out the absolute location and orientation of the robot?

I actually enjoy low level programming as it is easy for my poor old brain to understand what is going on. With high level programming you can certainly write some good code even if you haven't a clue how a library method does its magic. If however there is a problem without a library method to solve it you are back to the old ways of coding.

OpenCV may be useful to grab images from a video source but I like to understand how visual processing works and perhaps invent some of my own solutions.

You can use OpenCV to program as low-level as you like. It simply takes the frames in for you as a 2D array of tuplets. Once you get the array you can do whatever you want with the information.

NIVIDIA just came out with a new version of the Jetson Nano that has two camera connectors instead of just one. This would allow you to treat the video information as stereo vision and do distance and perspective calculations with it. Of course you'll need to know the physical positions of the cameras relative to each other.

Also to do those kinds of calculations truly efficiently you'd probably also want to use Quarternion mathematics. Just to make the calculations far simpler. Since OpenCV is actually just a library for Python you can also include Numpy to work with the video arrays. Of course you can always use good old Euclidean geometry too.

Exactly what you do with the video information is entirely up to you. You can program as low-level as you like. Or you can take advantage of libraries that do mundane things for you so that you can move on to doing higher level recognition. It's all up to you and what you want to do with it.

This is what it makes it so exciting. If it was nothing more than just a bunch of pre-programmed libraries that did everything for you then it wouldn't be very exciting, at least not for a hobbyist. Although for someone who's starting up a robotics business using canned software to the hilt is most likely the best way to go.

It all depends on what you want to do.

DroneBot Workshop Robotics Engineer

James

Yes I understand you can capture raw video with OpenCV thus the reason I was spending time with Python and Processing as they are both easy to use with OpenCV and the Arduino. OpenCV does have stereo vision support although Shinsel of Loki robot fame found it lacking in performance. Years ago I spent a lot of time trying to generate depth maps from two cameras. The easiest solution appears to be Kinect? There are videos showing how to use Kinect with Processing or Python and/or using it with ROS.

The issue with writing your own code to handle the raw data is speed. How fast would your own algorithms run with Python or Processing when written in those languages?

At the moment I have two identical webcams plus the laptop webcam. The two webcams are 4.5 inches apart. You may notice the right image is brighter than the left image for reasons I don't know for sure. Software could measure the difference and change the brightness value of one of the images so they are both the same. The two images below are the output of the local threshold function that turns them into two binary images (blobs).

I've have also been working on an artificial eye.

the problem I see with your image detection is, it doesn't see objects inside objects.

I would love to work with you especially in order to add distance calculation to my A.Eye.

see page 16 for a demo of the A.eye detecting even overlapping images:

https://aidreams.co.uk/forum/general-ai-discussion/outline-from-gadient-mask/225/

here is the current alg:

Team Fuki

I haven't done much on this subject for some time. What I would suggest is you might look at the free OpenCV or even a simpler product Roborealm (although it is not free).

https://www.roborealm.com/