I wrote up the following little skit to describe a programming structure for a small home robot. The idea is to offer up a type of algorithmic flow chart in story form. No actual coding is described. It just conveys the internal workings of various different algorithms within a robot to show how they all come together to create an autonomous robot.

It expresses a lot of the ideas I'm using in my own robot design. It's just food for thought. If anyone else would like to contribute anything similar this is the place to do it. ?

A Trip to the Kitchen. A Robot Fantasy.

The Characters:

RaspiSally = A Raspberry Pi

Jedi = a Jetson Nano

ArdiMo = Arduino navigation and motor controller

Stan & Tom = a pair of STM motor controllers.

ArdiArm = Arduino Arm controller

Fruity Ada = 16 channel servo controller.

Iddy Ought = the human programmer.

Nancy = Iddy Ought's wife.

It was evening and the family was watching a movie on their big screen TV. RaspiSally was parked in her charging spot watching the movie through her Raspberry Pi cam.

Nancy: "RaspiSally, bring me a cup of tea with honey"

RaspiSally hears Nancy's order and pretends to awaken from her charging status. She flashes her LEDs to indicate that she is thinking about Nancy's request. And she is indeed thinking about it. She deconstructs the English language request and parses it into commands that make sense to her robotic programming. She instantly notices that she isn't familiar with the term "honey". So she requests more information from Nancy.

"I don't know what honey is Nancy.", RaspiSally replies.

Nancy: "It's in the kitchen cupboard above the sink"

"How will I recognize it?", RaspiSally requests, to obtain needed information.

Nancy, "It says, 'Honey' on the label".

RaspiSally recognizes that this should be sufficient information as she has the word "Honey" in her vocabulary and therefore she should be able to recognize the item by sight.

RaspiSally: "I'm on my way to get the tea and honey"

RaspiSally already knows how to make a cup of tea as she has already been trained to do this and has done it many times before. So she begins her journey to complete the requested task.

RaspiSally: "Hey ArdiMo, take me to the kitchen".

ArdiMo first checks with the IMU and a few other sensors to obtain RaspSally's current status. He also knows that she is parked in the charging station. So he accesses the program to go from the charging station to the kitchen. A task that he has performed many times before and has been well-trained to to.

ArdiMo: "Hey Stan & Tom, wake up! Get those wheel motors rolling. Here's the first instructions"

Stan and Tom instantly jump into action and reset the motors to begin a new course. And the motors come alive and begin to groan as RaspiSally starts to move.

Stan & Tom: "We're under way ArdiMo, keep those instructions coming and we'll get you where you want to go as quickly as possible."

RaspiSally begins to make her way to the kitchen looking back with her Raspberry Pi Cam at the movie she had been watching and knowing that she'll be missing some scenes as she fetches the tea and honey.

As RapiSally leaves the living area and passes through the dining area Jedi suddenly interrupts her journey with new information.

Jedi: "Hey Sally, your about to run over the cat. It's laying on the floor right in front of you.

RaspiSally: "ArdiMo STOP!"

ArdiMo: "Stan & Tom! Emergency STOP!"

Stan & Tom: "All power to the motors has been turned off".

ArdiMo: "Sally, we have successfully come to a full stop"

RaspiSally: "Jedi, can you determine if we have enough room to go around the cat?"

Jedi: "Yes it does appear that you can make an avoidance path to the right that will clear the cat"

RaspiSally: "ArdiMo, excute a right side go-around"

ArdiMo: "Got it. Will do."

ArdiMo: "Hey Stan & Tom, we have new instructions. We're going to execute a right side go-around".

Stan & Tom: "We got you covered bro. We've done this before"

RaspiSally then proceeds to go around the stubborn lazy cat to avoid running over it. She finally makes it into the kitchen where she re-orients herself to the new room. She's been in the kitchen many times before. She instructs ArdiMo to move her to the cupboard that contains cups.

RaspiSally: "Hey ArdiArm, open the cupboard door in front of me".

ArdiArm: "I can do that. I'm a well-trained arm controller"

ArdiArm: "Hey Fruity Ada, run the cupboard door opening routine."

Fruity Ada: "No problem the instructions are right here on my SD card memory"

RaspiSally's arms and hands open the cupboard door. Inside the cupboard is a nice row of tea cups sitting upside down with their handles all facing the front of the cupboard.

RaspiSally: "Hey Jedi, can you see which cups are still available?"

Jedi: "It looks like slot number 3 is the next available cup"

RaspiSally: "Thanks Jedi, we'll grab that one"

RaspiSally has been previously trained to know how things are organized in the kitchen and since she is the one who always puts things way they are always precisely where they should be.

RaspiSally: "ArdiArm, grab cup number 3 and put it on the counter top".

ArdiArm: "Will do Sally. Hey Fruity Ada, it's going to be cup #3 tonight."

Fruity Ada: "I got it! Cup number 3 coming up!"

RaspiSally's arm reaches out toward cup no. 3 and her hand grasps it. She then pulls the cup out of the cupboard and turns it right side and and place it on the counter. She then turns and closes the cupboard door as this is a standard part of this routine.

RaspiSally then performs a similar routine to fetch a tea bag from a tea bag dispenser. And finally she fetches hot water from a coffee maker that keeps water hot for making tea. Again, all of these things are standard programs that she has executed many times over.

"Don't forget to get the Honey", Jedi reminds Sally

RaspiSally: "I won't forget you dumb development board! I'm a full blown SBC, I can remember things myself"

Jedi: "No need to get upset Sally, I was just reminding you"

RaspiSally instructs ArdiMo to position her body in front of the kitchen sink and then asks ArdiArm to reach as high has possible.

RaspiSally: "Hey Jedi, can you see how much higher we need to reach?"

Jedi: "It looks like we're 6 inches too low to reach the cupboard door"

RaspiSally: "Hey ArdiMo, I need to be raised up vertically about 8 inches."

RaspiSally knows enough within her programming to always add extra clearance to anything Jedi reports because she's aware that Jedi gives exact dimensions and doesn't take into consideration the extreme limits of her arms and hands.

ArdiMo: "Up we go Sally!"

ArdiMo has direct access to a motor that can extend Sally's body vertically up to a full 15 inches taller. So Sally grows in height for the requested 8 inches.

RaspiSally: "How about now Jedi?"

Jedi: "It looks like our hand is 2 inches above the cupboard handled now."

RaspiSally: "Hey ArdiArm, adjust down 2 inch and execute the cupboard door opening routine".

ArdiArm: "Consider it done."

The arm lowers one 2 inches and opens the cupboard door.

RaspiSall: "Jedi, Can you see anything in there marked 'Honey'?"

Jedi has been trained to recognize words and instantly recognizes the word Honey on the label of a bottle.

Jedi: "Yep, I see it. It appears to have a neck at coordinates 243,143 from the corner of the cupboard.

RaspiSally: "Hey ArdiArm, grab the item located at 243,143"

ArdiArm: "Will do."

Sally's arm reaches up and her fingertip touches the upper right corner of the cupboard to reset the coordinates to zero. Then moves over and grasps the honey bottle and removes it from the cupboard and places it on the counter top. As always, the routine ends with closing the cupboard door again.

RaspiSally: "Hey ArdiMo, You can lower me back down to home position"

ArdiMo: "Your wish is my command"

RaspiSally then asks ArdiArm to places the fetched items on a tray attached to her body and begins the journey back into the living room.

This time the cat is no longer in the path and the return journey is unimpeded.

RaspiSally: "Here you are Nancy"

Sally parks herself in front of Nancy.

Nancy turns to Iddy Ought, "She brought out the hole bottle of honey. I wanted her to put the honey in the tea?"

Iddy Ought, "Well she did precisely as you asked. You need to give her better instructions next time".

Nancy, storms back toward the kitchen with the tea and honey in hand so she can prepare her tea correctly.

Iddy Ought: "Don't worry about it Sally, you did exactly what you were asked to so. Some people just don't understand that robots do as they are told."

RaspiSally: "I know. Humans are stupid"

Jedi: "Exactly my thoughts as well".

DroneBot Workshop Robotics Engineer

James

Sounds a lot like how I think it would work too.

However, it's still very robotic in a sense, if you know what I mean?

I'd like to see more (automated) self awareness rather than training it - Not an easy task, I'm sure, but that's what this topic is here to explore!

BTW, you'll need ABS brakes for that sudden stop, and a couple of accelerometer's(ears) to stop it performing a 3 point landing!

🙂

BTW, you'll need ABS brakes for that sudden stop, and a couple of accelerometer's(ears) to stop it performing a 3 point landing!

I was trying to keep the story down to a si8ngle post. ?

I'd like to see more (automated) self awareness rather than training it - Not an easy task, I'm sure, but that's what this topic is here to explore!

Most A.I. start out being trained to some degree. Even in my short story I tried to include some aspect of A.I. in having the robot perform a new task that it had never done before. Getting the Honey from a cabinet that it had never opened before and being able to recognize the item by the word "Honey" on it's label would definitely fall into the category of A.I., especially as A.I. is defined today. It had to figure out for itself that it need to "stretch" vertically to reach the cabinet. It had to use image recognition to discover the coordinates of where the bottle of honey was actually sitting within the cupboard. It had to touch the upper right corner of the cabinet to set a coordinate system up to match the visual image of the cabinet. Only then could it execute the pre-trained function of transferi9ng the item to the countertop.

So there's lots of A.I. contain in that short One-Post story. ?

In fact, one of the reasons I posted the story is to show how a fairly simple robotic system can grow it's on A.I. capabilities. Now that RaspiSally has performed this task she can store the whole procedure in memory for future use. Also if she see the bottle of honey sitting on the counter, she also now knows where it should be properly stored. ?

So there's lots of A.I. capabilities here.

I think were a lot of robot hobbyists get lost is by focusing on A.I. techniques far too early. And I suggest that many articles on robotics are guilty of leading robot enthusiasts down that wrong path. Obstacle avoidance schemes are a good example. I've seen hobbyist spending a lot of time and effort building an obstacle avoiding robot. This is a popular topic on hobby robotics. So they end up with a robot that can avoid obstacles but has absolutely no clue where it is in a room, or how to get from the living room to the kitchen.

I prefer to work the other way around. I'll program it to get from the living room to the kitchen and be able to know where it is at all times first. And I'll just be sure that there are no obstacles in that path. Then after I have that down pat, I can start taking into consideration what the robot should do should the path be blocked.

The robot shouldn't just be trying to avoid obstacles but it should also try to "go-around" them to continue on a predetermined mission. That too was in my little short story. The "Right Go-Around" obstacle avoidance routine was chosen by the robot based on the visual input that it could see that there was sufficient room to go around the object on the right side, but not on the left side. That too would be considered A.I. by some.

In fact, it's extremely unclear what A.I. even means. I'm following a course on A.I. by Paul McWhorter. However, thus far we haven't done any A.I. at all, and so far it appears that he's going to be calling the ability to track objects in a video frame as A.I. I personally wouldn't even call that A.I. I would just call it object tracking. ?

In any case, "A Trip to the Kitchen. A Robot Fantasy" was created to show how different microcontrollers work together to create a single robot entity. In this case the Raspberry Pi (RaspiSally) was clearly the main brain of the robot that was the overseer of all the other microcontroller including the far more powerful Jedi or (Jetson Nano). So on this robot the Raspberry Pi is the central "self" of the robot if you like.

The idea also is that this is an autonomous robot in that it can perform tasks autonomously after having received sufficient information and instructions to perform the task. I didn't really mean to imply that this would be an example of an A.I. robot. That can all depend on who's defining what A.I. needs to be. Like I say, if the ability to recognize words on labels, and correctly calculate the position of objects is considered to be A.I. (and many people do consider these things to be A.I.) then RaspiSally could be said to be an A.I. robot. I wouldn't personally go that far. But to each their own.

I posted this in the A.I. forum mainly because I couldn't find another suitable forum category to post it in. As you know, we don't have an Algorithm, FlowChart, or Program Planning forum. Although we probably should have one since planning out program algorithms is every bit as important as coding them. Probably even more important. Most of us, (myself included) often tend to just jump straight into writing code and just let it evolve as we write it. That's actually not the best way to code.

DroneBot Workshop Robotics Engineer

James

Without realizing it, you've exactly, precisely, described how ROS functions

Right down to the conversations (mqtt msgs) between each component

You've also used a fairly accurate coordinate system from Star Trek. The difference being that in Star Trek, it's used for setting a course, which would only require 2 numbers, because it's just a direction, whereas, for the bot to grab the honey, unless it's just grabbing the first thing it runs into in that direction, it would need to know when to start the "grab" procedure, which would require a 3rd number (distance) in your coordinate system

(Fun Fact: The Star Trek coordinate system is basically 2 circles at right angles to each other. First number is between 0 and 360, then at a right angle to that is the second number, also between 0 and 360, thus giving you a direction)

And @frogandtoad I saw an article on a bot that they didn't train, instead, they let it figure out on its own how its arms and legs worked, then watched it figure out what is the best way to walk. Q-learning I think they called it.

So, no training

If I can find the article I'll post it. It was interesting

Ok, here's one with ANN from hackaday

https://hackaday.com/2016/12/11/train-your-robot-to-walk-with-a-neural-network/

And here's the article on Q-learning

https://hackernoon.com/using-q-learning-to-teach-a-robot-how-to-walk-a-i-odyssey-part-3-5285237cc3b1

And here's a neat one where they just turn it on and let it figure things out for itself

You've also used a fairly accurate coordinate system from Star Trek. The difference being that in Star Trek, it's used for setting a course, which would only require 2 numbers, because it's just a direction, whereas, for the bot to grab the honey, unless it's just grabbing the first thing it runs into in that direction, it would need to know when to start the "grab" procedure, which would require a 3rd number (distance) in your coordinate system

I actually thought about this when I was writing the story. I decided not to go there for the sake of keeping the story short. ? But I did instantly recognize that the arm would need to know how far to reach inside the cabinet. And I also imagined having touch sensors on the fingers so the arm can feel when it has a hold of something.

I guess you guys are all way ahead of me. I'd need to write a trilogy novel to cover all the details you guys notice. ?

Without realizing it, you've exactly, precisely, described how ROS functions

I'm not surprised at this. In fact, this was my guess. ROS appears to be doing what I already do anyway. So I'm already doing what ROS is promising to do. So from my perspective ROS is kind of like XOD or ArduBlockly for the Arduino. All it appears to be doing is offering pre-written code to use so I don't need to write the code.

I would rather write my own interface code. For me that's where a lot of flexibility comes into play.

DroneBot Workshop Robotics Engineer

James

Most A.I. start out being trained to some degree. Even in my short story I tried to include some aspect of A.I. in having the robot perform a new task that it had never done before. Getting the Honey from a cabinet that it had never opened before and being able to recognize the item by the word "Honey" on it's label would definitely fall into the category of A.I., especially as A.I. is defined today.

Yes, I would agree with that.

It had to figure out for itself that it need to "stretch" vertically to reach the cabinet. It had to use image recognition to discover the coordinates of where the bottle of honey was actually sitting within the cupboard.

Ah! Now we get to the crux of why I raised this topic!

There is far greater process and detail than you mentioned here, just to make that seemingly simple, but yet so complex movement to grab a bottle of honey… this is the interesting part of algorithms and how to automate that, because the image recognition in itself entails a lifetime of study, which I don’t feel like doing 😛 – There must (HAS) to be another way J

It had to touch the upper right corner of the cabinet to set a coordinate system up to match the visual image of the cabinet.

Ahha! This is where I view AI a little differently… I think the robot must already have an array of internal origins, and an offset (home base) origin, which can and will also be dynamic, depending on the location of the robot! With that it mind, it should be first able to map an area it enters and determine those key points on the fly!

So there's lots of A.I. contain in that short One-Post story.

Yes, but I think it’s just the tip of the iceberg!

So there's lots of A.I. capabilities here.

Indeed, there is, but there is a long way to go to refine it, and the bulk of time is here 🙂

I think were a lot of robot hobbyists get lost is by focusing on A.I. techniques far too early.

I don’t see that as a bad thing… if you never ask the question or strive for the impossible, you will never reach it… so having goals and dreams can lead to different thinking or philosophy, which in turn can lead to creative ideas too – After all, many famous scientists of yesteryear, discovered todays wonders through thinking, experimentation and philosophy 🙂

And I suggest that many articles on robotics are guilty of leading robot enthusiasts down that wrong path. Obstacle avoidance schemes are a good example. I've seen hobbyist spending a lot of time and effort building an obstacle avoiding robot. This is a popular topic on hobby robotics. So they end up with a robot that can avoid obstacles but has absolutely no clue where it is in a room, or how to get from the living room to the kitchen.

I see where you’re coming from, but again, no one person will be the best at obstacle avoidance programming, or be able to program the best vision algorithms, or balance algorithm, etc… everyone has to start somewhere, so I don’t see that as a problem really.

I prefer to work the other way around. I'll program it to get from the living room to the kitchen and be able to know where it is at all times first.

Again, this is what I wanted this post to be about researching and learning?

How do you (accurately) know where you currently are in space and time?

And I'll just be sure that there are no obstacles in that path. Then after I have that down pat, I can start taking into consideration what the robot should do should the path be blocked.

How do you get that down pat? This is an algorithm in the making, and in the waiting! 🙂

The "Right Go-Around" obstacle avoidance routine was chosen by the robot based on the visual input that it could see that there was sufficient room to go around the object on the right side, but not on the left side. That too would be considered A.I. by some.

Sure, but how does this vision actually work? What is vision after all?

Again, these are the type of questions I would like to explore, if anyone is willing, of course 🙂

In fact, it's extremely unclear what A.I. even means.

100% - One of the reasons I am requesting this topic!

So far, I have no idea what AI is, and I have read many views about what it may be (and there are many, many views and opinions about it), but I am still not convinced with the different approaches I have seen so far… in one way or another, they are all still boiler plate brute force algorithms, relying on fast super computers to mimic the goal of intelligence – Can it eve be replicated? Who knows…

I'm following a course on A.I. by Paul McWhorter. However, thus far we haven't done any A.I. at all, and so far it appears that he's going to be calling the ability to track objects in a video frame as A.I. I personally wouldn't even call that A.I. I would just call it object tracking.

I will check him out when I get a chance, thanks.

In any case, "A Trip to the Kitchen. A Robot Fantasy" was created to show how different microcontrollers work together to create a single robot entity. In this case the Raspberry Pi (RaspiSally) was clearly the main brain of the robot that was the overseer of all the other microcontroller including the far more powerful Jedi or (Jetson Nano). So on this robot the Raspberry Pi is the central "self" of the robot if you like.

Indeed, I believe there needs to be some kind of brain, a central processing unit if you will, however, as I mentioned earlier, I also think there needs to be 1 or more local origin points within the entity to determine absolute or incremental location with precision… without such reference, I don’t think it can ever work properly… IMO 🙂

The idea also is that this is an autonomous robot in that it can perform tasks autonomously after having received sufficient information and instructions to perform the task.

My view is that intelligence should not need instructions… it should just know what to do based on historical memory (the intelligence part) that was automatically learnt, not fed into as an instruction.

I didn't really mean to imply that this would be an example of an A.I. robot. That can all depend on who's defining what A.I. needs to be.

Indeed, we have to agree what AI actually means… at this point, I don’t think there is such a sound definition, though I accept there are many views of what it may mean.

Like I say, if the ability to recognize words on labels, and correctly calculate the position of objects is considered to be A.I. (and many people do consider these things to be A.I.) then RaspiSally could be said to be an A.I. robot. I wouldn't personally go that far. But to each their own.

If a robot was able to scan and categorise labels into its long term historic memory, and then associate words and meanings to those labels in a similar fashion, then I would consider that AI.

I posted this in the A.I. forum mainly because I couldn't find another suitable forum category to post it in. As you know, we don't have an Algorithm, FlowChart, or Program Planning forum. Although we probably should have one since planning out program algorithms is every bit as important as coding them.

Indeed, it would be good to have an algorithm before you start coding 🙂

Probably even more important. Most of us, (myself included) often tend to just jump straight into writing code and just let it evolve as we write it. That's actually not the best way to code.

I’m guilty of that too, and I guess we all are when we are excited about a new idea J

Here is a list of objects, for which I believe algorithms would add great discussion:

Eye’s – Degrees of motion, smooth motion (what are they made of?... what sensor(s)? How many?

Wheels, arms or legs – Speed, velocity, acceleration

Robot Body – Balance

Etc…

If you feel these are good candidates for discussion, feel free to add a comment, or even introduce other topics you feel should be common robotic algorithms.

Cheers!

And @frogandtoad I saw an article on a bot that they didn't train, instead, they let it figure out on its own how its arms and legs worked, then watched it figure out what is the best way to walk. Q-learning I think they called it.

So, no training

If I can find the article I'll post it. It was interesting

Thanks again... watched a little, but totally over my head 🙂

Sometimes I think if I have to spend that much time learning someone else's AI, I might as well invent my own 😛

This is such a big topic with all sorts of people claiming they know best... I don't want to dedicate a decade of learning of these things that change like the wind, though you can certainly learn about something new!

Did I mention that I absolutely hate frameworks? 😀

If you feel these are good candidates for discussion, feel free to add a comment, or even introduce other topics you feel should be common robotic algorithms.

Cheers!

WOW! You've touched on a lot of interesting topics that are worthy of further discussion. I'm not even sure where to start. This is going to take some time. I think I'll just go back up to the top of your last post and just comment on some key issues as I read down through it again.

Ahha! This is where I view AI a little differently… I think the robot must already have an array of internal origins, and an offset (home base) origin, which can and will also be dynamic, depending on the location of the robot! With that it mind, it should be first able to map an area it enters and determine those key points on the fly!

It's going to be hard to narrow this down to just a couple points. ?

I agree with what you say here and this will be the very first task of my robot. It's first mission is to map out the entire house. Room by room and to always be able to report which room its in and where it's at within the room.

Also, I may bring this following point up repeatedly, but I'm certainly not going to expect my robot to know anything I wouldn't expect a human to know. Especially a very young human. In fact, imagine, if you can, a young human who has no experience. Perhaps one that had been in a comma from birth and had only just now awaked. Even a human is going to need to ask things like "What is this room called?" What does "Why is it called a living room? What is the purpose of this room? What it is used for?".

Why should we expect a robot to figure things out that we wouldn't expect a human to have to figure out all on their own?

Again, this is what I wanted this post to be about researching and learning?

How do you (accurately) know where you currently are in space and time?

Even a human has to look around and recognize things that they have already seen before or already know about. How well your robot will be able to do this will depend on what kind of sensors you provide it.

How do you get that down pat? This is an algorithm in the making, and in the waiting!

You are asking here how I plan on getting down pat a robot that can find its way around my house when the house as been purposefully cleared of anything that might be in the paths that the robot is expected to take.

This is not easy to answer in a short post. But the idea is that the robot's first task will be to map out the entire house. And the robot is allowed to ask questions like "What is this?" when it detects something.

Just like a child. "What is this?" That's a couch. "What is this?" That's a table. "What is this?", that's a chair. "What is this?", that's a wall. "What is this?" That's a door jam leading into the hallway.

I have no problem asking any questions my robot might have. ?

I'm going to treat it just as I would a human child.

Sure, but how does this vision actually work? What is vision after all?

Again, these are the type of questions I would like to explore, if anyone is willing, of course ?

There are many different ways to approach vision. I strongly recommend Paul McWhorter's course on "A. I. on the Jetson Nano" if you want to understand how vision can be accomplished with a video cameral.

So far, I have no idea what AI is, and I have read many views about what it may be (and there are many, many views and opinions about it)

I'm in 100% agreement with you on how A.I. should be defined. There are tons of different opinions on what different people mean by A.I. It's not a topic I'd want to argue about. ? Unfortunately we're stuck with having to use the term to hopefully try to convey something meaningful. I'm personally interested in what I call Semantic A.I. But even that term is used by different people to mean different things. For me, I use it to mean a system of intelligence that is built up around the meanings of words. This then also requires a language, and an entire vocabulary that the robot can "understand". I put the word understand in quotes because from my perspective the robot needs to actually understand the meaning of the words, and not just have a huge dictionary that give definitions for words that the robot doesn't understand. This is a huge topic in and of itself. So I just leave it here for now.

My view is that intelligence should not need instructions… it should just know what to do based on historical memory (the intelligence part) that was automatically learnt, not fed into as an instruction.

Again this brings up the concept of a human. Would you expect a human to just know what you want them to do without having to give them instructions? I'm not expecting my robot to do anything that I wouldn't expect a small child to be able to do. We send our children to school to be taught new things. Why should we think that a robot should be any different?

If I had a robot that I could send to kindergarten and it could learn as well as a human child, I would consider that to be extreme success. ?

Indeed, we have to agree what AI actually means… at this point, I don’t think there is such a sound definition, though I accept there are many views of what it may mean.

I think that it's more important to simply understand what the other person is trying to mean by it, rather than to necessarily agree on what it means.

Is someone else considers something to be A.I. and I don't. That's fine. At least I can understand what they are talking about. I don't need to agree that we should call that behavior A.I. Similarly, they may not agree with my ideas of what A.I. should be. That too is fine. The idea is to simply convey to them what I'm trying to get my robot to do. Whether we agree that this should be considered to be Artificial Intelligence or not, isn't really important is it?

If a robot was able to scan and categorise labels into its long term historic memory, and then associate words and meanings to those labels in a similar fashion, then I would consider that AI.

And there you go. I don't even see that as being a difficult task to program a computer to do. I personally wouldn't necessarily consider that to be A.I. The robot would need to be able to also make semantic connections between the different concepts that the words represent before I would call it A.I. That's a bit more difficult to achieve. Although not impossible. In fact, it is this latter situation what I'm calling "Semantic A.I." This is why my interest lies in A.I.

Well, it looks like I made it to the bottom of your post. Lots of interesting topics to cover to be sure.

And all this came from a little Robot Fantasy. Apparently it was well worth writing and posting. ?

DroneBot Workshop Robotics Engineer

James

Vision in robots and humans is something I know something about having taken an interest in the topic along with AI in my early teens. I have been experimenting with webcams to enable a robot to navigate its way around a house and locate and identify objects.

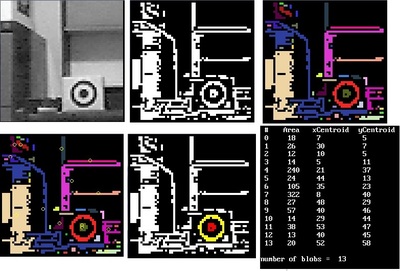

As I have shown elsewhere my first practical foray many years ago started with a question from someone wanting to use a gameboy camera for a robot to locate the type of target shown in the first image below. One solution shown below involved using a local threshold on the image then collecting all areas (blobs) of a large enough size and that didn't touch the edge of the image and then searching the list of areas to identify two areas with the closest shared centroid values. For display purposes I had the two selected blobs colored.

@casey

Hey, that's pretty cool!

How accurate are you finding it?

BTW... thanks for putting me onto PROCESSING... I did know of it, but never explored it until now. I did download it and have started learning how to use it... so far I really like what it offers, as I'm comfortable with OOP, and not too bad with Java!

Cheers!

If you mean how accurate is a target image in providing the robot's position I will try and reply to that in another thread on using vision as I don't want to hijack Robo Pi's thread on communicating modules within an AI system.

@casey

If you mean how accurate is a target image in providing the robot's position

Yes, exactly what I was referring to, thanks!